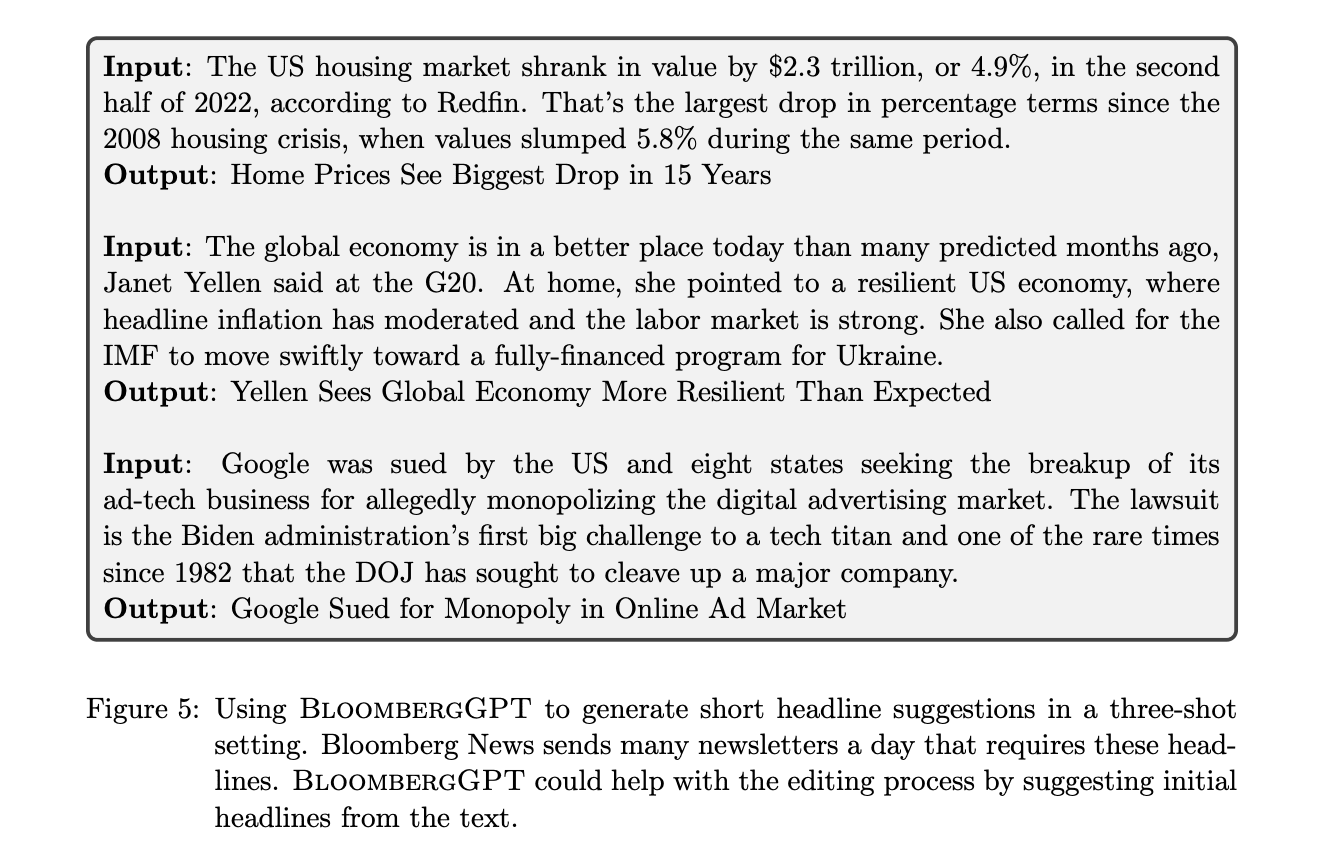

Financial technology companies are increasingly turning to artificial intelligence to help analyze data, automate processes, and improve decision making. Bloomberg recently unveiled a new AI system called BloombergGPT that is specially designed to understand financial and business language.

BloombergGPT is what's known as a large language model (LLM).

LLMs are AI systems that are trained on massive amounts of text data so they can generate human-like text and engage in tasks like question answering and summarization. They have become very popular in recent years thanks to advances in computing power and AI techniques. Some well-known examples are systems like GPT-4 from OpenAI and BLOOM from Anthropic.

What makes BloombergGPT different is that it was explicitly trained on financial data sources curated by Bloomberg analysts over decades. This includes 360 billion tokens (words) from sources like financial news, earnings reports, regulatory filings, press releases, and more. The goal was to create an AI system optimized for understanding nuanced financial language.

To supplement the financial data, BloombergGPT was also trained on 345 billion tokens of more general text from publicly available sources like Wikipedia, books, academic papers, and web content. The combination of financial and general data was intended to make the system adept at both financial tasks and general natural language processing abilities.

In terms of its technical details, BloombergGPT contains 50 billion parameters. Parameters refer to the adjustable settings inside the model that are tuned during training. More parameters allow the system to learn more complex patterns and relationships. For comparison, GPT-3 has 175 billion parameters.

BloombergGPT uses an architecture based on the transformer, which is the most common framework used today for large language models. Transformers process text by looking at the entire context rather than processing words one-by-one. This allows them to develop a more holistic understanding of language.

During training, BloombergGPT was optimized using techniques like activation checkpointing and mixed precision to lower memory usage and increase speed. The result was a system capable of 102 teraflops, or 102 trillion floating point operations per second. This level of compute power was needed to effectively train the 50 billion parameter model.

To evaluate the capabilities of BloombergGPT, the researchers tested it on a range of financial natural language tasks as well as standard AI benchmarks. On financial tasks, BloombergGPT achieved state-of-the-art results, outperforming other models by significant margins. It performed very well on financial question answering, named entity recognition, and sentiment analysis.

On general benchmarks, BloombergGPT proved competitive with some models over 100 times its size. While it wasn't always the top performer, it consistently outscored similarly sized models on benchmarks measuring abilities like reasoning, reading comprehension and common sense knowledge.

The researchers attribute BloombergGPT's effectiveness to three main factors. First and foremost was the high-quality domain-specific data. Second, they believe their choice of tokenizer - the system responsible for breaking text into pieces the model can process - was beneficial. Finally, the model architecture and training techniques allowed them to efficiently train a model competitive with far larger systems.

The researchers highlight that creating an AI system of this scale still poses challenges. Instabilities can arise during training that require careful monitoring and intervention. They logged their training process in detail to aid future work.

Additionally, the authors considered ethical issues like potential biases in financial data and possible misuse of the system. Bloomberg has extensive procedures in place to reduce risks and ensure responsible AI development. However, the company chose not to publicly release the model to minimize chances of data leakage or misuse.

In conclusion, BloombergGPT represents a milestone for domain-specific natural language AI. Its training process and strong performance on financial tasks demonstrate the value of curated in-domain data. While specialized, the system retains competitive general abilities as well. As financial institutions continue adopting AI, expect systems like BloombergGPT to play an increasing role in driving automation and insights.

Sources:

BloombergGPT: A Large Language Model for Finance