The Challenge of Reasoning in AI

One of the key challenges in AI is enabling machines to reason like humans. While large language models can generate text that sounds plausible, they often lack the ability to reason or understand the context. This can lead to outputs that are nonsensical or incorrect, despite sounding reasonable.

For instance, if you ask a language model a complex question or a question that requires understanding of the real world beyond its training data, it may struggle to provide a correct answer. This is because these models are trained on large amounts of text data and generate responses based on patterns they've learned from this data, rather than truly understanding the content.

Chain-of-Thought Prompting

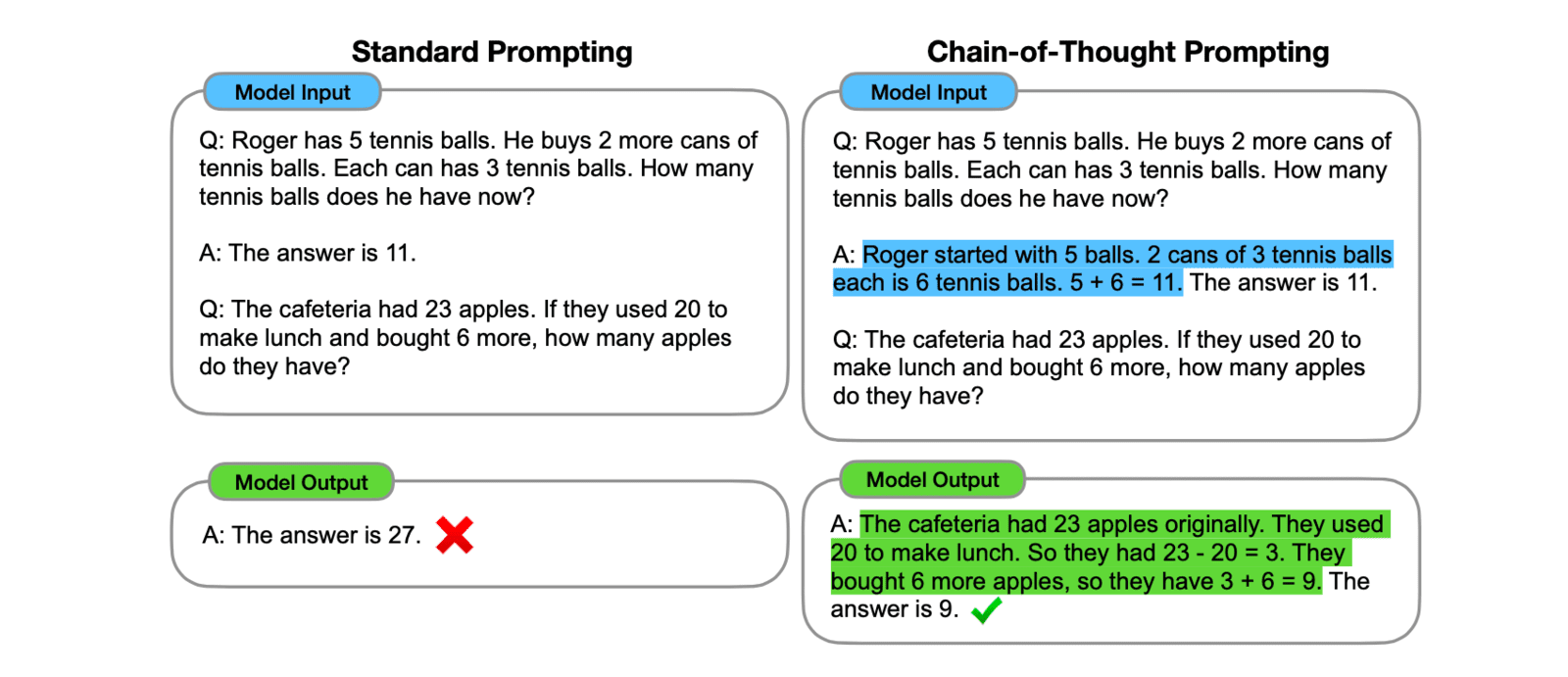

A recent research paper titled "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models" proposes a novel approach to address this issue. The authors introduce a method called "Chain-of-Thought Prompting" (CoTP), which structures the prompts in a way that encourages the model to build upon its previous responses, creating a chain of thought.

The idea is to ask the model to explain its reasoning, justify its answers, and consider alternative viewpoints. This approach can help to mitigate some of the limitations of current language models, such as their tendency to generate plausible-sounding but incorrect or nonsensical answers.

The researchers found that this method significantly improved the model's performance on tasks that require reasoning, such as answering complex questions or solving problems. The model was also better able to provide coherent and detailed explanations of its thought process.

Let's consider an example:

Question: "If I have 10 apples and I give away 3, then I buy a dozen more and give away 5, how many apples do I have left?"

Incorrect Language Model Response: "You have 22 apples left."

The correct calculation should be: Start with 10 apples, give away 3 (leaving 7), buy a dozen more (adding 12, for a total of 19), then give away 5. So, you should have 14 apples left.

Now, let's see how a chain-of-thought prompting approach might help address this:

Prompt: "You start with 10 apples and give away 3. How many apples do you have now?" Model Response: "You have 7 apples."

Prompt: "You buy a dozen more apples. How many apples do you have now?" Model Response: "You have 19 apples."

Prompt: "You give away 5 of your apples. How many apples do you have left?" Model Response: "You have 14 apples left.

In this chain-of-thought approach, each step of the calculation is broken down into a separate prompt, making it easier for the model to handle the complexity of the overall problem. This approach encourages the model to build on its previous responses, leading to more accurate and reasoned responses.

While you can prompt step-by-step, just asking the model to reason step-by-step might produce the desired outcome.

Enhancing Temporal Reasoning

Another research paper titled "Towards Benchmarking and Improving the Temporal Reasoning Capability of Large Language Models" focuses on improving the temporal reasoning capability of large language models. The authors introduce a comprehensive probing dataset to evaluate the temporal reasoning capability of these models. They also propose a novel learning framework to improve this capability, based on temporal span extraction and time-sensitive reinforcement learning.

It's worth noting that temporal reasoning is a complex task that involves understanding not just the order of events, but also their duration and the intervals between them. This makes it a challenging problem for AI, but also a crucial one, as many real-world tasks require the ability to reason about time.

Temporal reasoning is crucial for many real-world applications. For example, understanding that an event occurred before or after another event can be critical for making business decisions or understanding historical trends.

Visual-Language Models and Reasoning

In the paper "Enhance Reasoning Ability of Visual-Language Models via Large Language Models", the authors propose a method called TReE, which transfers the reasoning ability of a large language model to a visual language model in zero-shot scenarios. This approach could be particularly useful for tasks that involve both visual and textual information, such as image captioning or visual question answering.

Implications for Business Leaders

The advancements in improving the reasoning capabilities of large language models have significant implications for business leaders. These models can be used to automate various tasks, such as customer service, content generation, and data analysis. Improved reasoning capabilities can make these models more effective and reliable, leading to better business outcomes.

Moreover, these models can provide valuable insights by analyzing large amounts of text data, such as customer reviews or social media posts. They can identify trends, sentiments, and key topics, helping business leaders to make informed decisions.

However, it's important to remember that these models are not perfect. They still lack a true understanding of the world and can make mistakes. Therefore, their outputs should always be reviewed and verified by human experts.

In conclusion, the research in improving the reasoning capabilities of large language models is a promising step towards making AI systems more intelligent and useful. As these models continue to improve, they will become an increasingly valuable tool for businesses.