Tokenization is a foundational step in natural language processing (NLP) and machine learning.

Large Language Models are big statistical calculators that work with numbers, not words. Tokenisation converts the words into numbers, with each number representing a position in a dictionary of all the possible words.

Tokenization breaks down a piece of text into smaller units, called "tokens." These tokens can represent whole words, parts of words, or even multiple words in some languages. For instance, the sentence "ChatGPT is fun!" might be broken down into tokens like ["Chat", "G", "PT", " is", " fun", "!"].

You can choose from multiple tokenization methods, but it's crucial to consistently use the same method during both training and text generation.

Why is this important for large language models?

Understanding Context: By breaking text into tokens, the model can process and understand the context around each token. It's like looking at each puzzle piece and understanding where it might fit in the bigger picture.

Efficiency: Language models have a limit to how many tokens they can process at once. By tokenizing text, they can manage and process information more efficiently.

Flexibility: Different languages have different structures. Tokenization allows these models to be flexible and work with multiple languages. For example, in English, spaces often separate words, but in languages like Chinese, words are often clustered together without spaces. Tokenization helps the model handle such variations.

Training: When training these models on vast amounts of text, tokenization ensures that the model learns from consistent and standardized units of text.

In essence, for large language models, tokenization is a foundational step that allows them to read, understand, and generate human-like text across various languages and contexts.

Trade-offs

Different tokenization strategies come with their own sets of trade-offs. Let's delve into some of these key trade-offs:

Granularity:

Fine-grained (e.g., character-level):

Pros: Can handle any word or term, even if it's never seen it before. It's very flexible and can be language-agnostic.

Cons: Requires more tokens to represent a text, which can be computationally expensive and may not capture semantic meanings as effectively.

Coarse-grained (e.g., word-level):

Pros: Can capture semantic meanings more directly and is often more efficient in terms of the number of tokens used.

Cons: Struggles with out-of-vocabulary words and might not be as flexible across different languages.

Language Dependence:

Language-specific tokenizers:

Pros: Optimized for a particular language, capturing its nuances and structures effectively.

Cons: Not versatile. A separate tokenizer would be needed for each language, which isn't scalable for multilingual models.

Language-agnostic tokenizers:

Pros: Can be used across multiple languages, making them ideal for multilingual models.

Cons: Might not capture the intricacies of each individual language as effectively as a language-specific tokenizer.

Fixed vs. Dynamic Vocabulary:

Fixed Vocabulary:

Pros: Deterministic and consistent in its tokenization. Easier to manage and deploy.

Cons: Struggles with out-of-vocabulary terms and might become outdated as language evolves.

Dynamic (or adaptive) Vocabulary:

Pros: Can adjust to new terms or slang, making it more flexible and up-to-date.

Cons: More complex to implement and might introduce inconsistencies over time.

Efficiency vs. Coverage: Some tokenizers aim for maximum coverage, ensuring they can handle any text thrown at them. Others prioritize efficiency, using the fewest tokens possible to represent a text. There's a balance to strike here: more coverage can mean more computational overhead, while prioritizing efficiency might mean sacrificing the ability to handle rare terms.

Complexity and Overhead: Advanced tokenization methods, like Byte-Pair Encoding (BPE) or SentencePiece, can handle a wide range of text types and languages. However, they introduce additional computational and implementation overhead compared to simpler methods.

Consistency: Some tokenization methods might tokenize the same text differently based on context, leading to potential inconsistencies. While this can be beneficial in capturing nuanced meanings, it can also introduce unpredictability in the model's behavior.

Choosing a tokenizer for a large language model involves weighing these trade-offs based on the specific goals and constraints of the project. Whether the priority is multilingual support, computational efficiency, or capturing linguistic nuances, understanding these trade-offs is crucial in making an informed decision.

Novel AI's Tokenizer to "enable stronger storytelling capabilities"

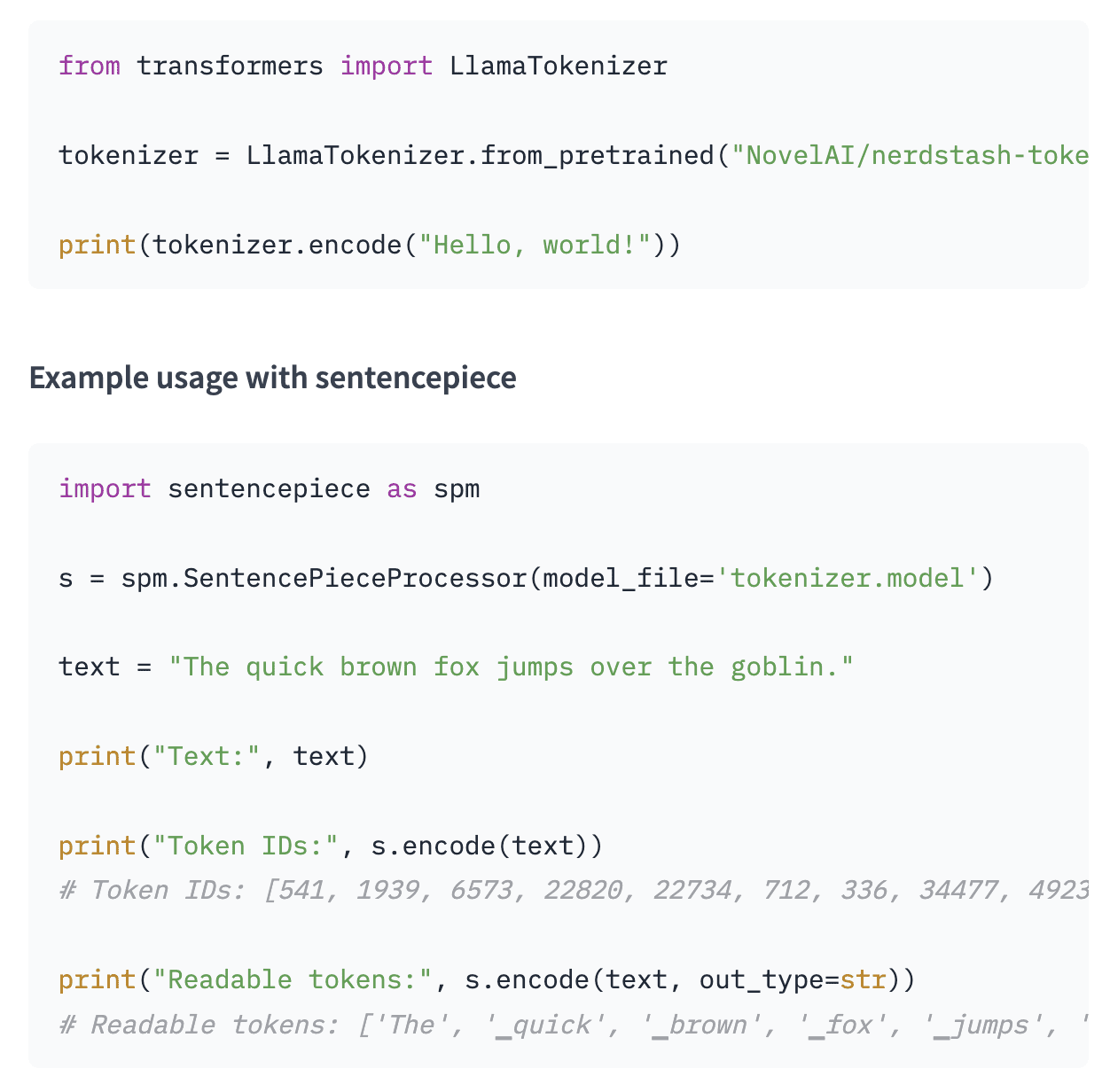

Novel AI recently developed a custom tokenizer for their AI models. The GitHub project detailing their process provides an inside look at engineering tradeoffs like vocabulary size, compression ratio, and handling numerals. The focus of this project is to build a tokenizer that enables stronger storytelling capabilities. Let's explore!

On the surface, the new tokenizer offers clear advantages:

Improved Granularity and Flexibility: Unlike traditional tokenizers, this one offers a balance between word-level and subword-level tokenization. By breaking down words into meaningful fragments, it can better understand and generate nuanced text. This is especially crucial for storytelling where context, nuance, and subtlety matter.

Compression Ratio: A higher compression ratio means the model can process and understand larger chunks of text at once. This is vital for maintaining context in long narratives or when referencing earlier parts of a story. By achieving a 7-19% higher compression ratio than the LLaMa tokenizer on significant parts of the English dataset, it's evident that the tokenizer is more efficient. This efficiency can translate to richer and more coherent narratives, especially in longer stories.

Adaptability and Evolution: The iterative approach to tokenizer training, with multiple runs and rebalancing, ensures that the tokenizer is optimized for the specific nuances of your dataset. This adaptability is key for evolving storytelling styles and trends.

Some other pros are mentioned. These are more specific to the Novel AI project:

Multilingual Capabilities: By accommodating both English and Japanese from the start, the tokenizer is designed for bilingual storytelling. This means it can seamlessly switch between languages or even blend them, offering richer narratives and reaching a broader audience.

Efficient Handling of Unicode Characters: The ability to natively tokenize Unicode characters, especially emojis, allows for more expressive storytelling. Emojis, in modern communication, can convey emotions, context, and tone, making them valuable in narratives. But they are less relevant to novel writing.

Numeric Understanding: Tokenizing numbers digit by digit enhances the model's capability to understand and manipulate numeric values. This is crucial for stories that involve dates, quantities, or any numerical context.

Disadvantages:

Complexity and Maintenance: Training the tokenizer added development time and complexity.

BPE vs. Unigram: The decision to choose BPE over Unigram was based on compression ratio. While BPE might offer better compression, Unigram might provide more natural word segmentations. The storytelling quality might be affected if the tokenizer doesn't segment words in a way that's intuitive to human readers.

Multilingual Limitations: While accommodating both English and Japanese is a strength, it might also be a limitation. The tokenizer might be overly specialized for these two languages, potentially making it less effective for other languages or multilingual contexts beyond English and Japanese.

Vocabulary Size: The decision to use a vocabulary size of 65535 tokens, while efficient from a computational standpoint, might introduce limitations. Is this size sufficient to capture the nuances of both English and Japanese, especially given the richness of the Japanese writing system?

Numeric Tokenization: Tokenizing numbers digit by digit can indeed improve the model's understanding of numeric values. However, it might also make the model less adept at recognizing larger numerical patterns or relationships between numbers.

Handling of Unicode Characters: While the ability to natively tokenize Unicode characters is a strength, there's a potential for overfitting or misinterpretation. Emojis and other Unicode characters can have different meanings in different contexts or cultures. Relying heavily on them might lead to misunderstandings in generated narratives.