The rapid progress of artificial intelligence over the past decade owes much to a class of algorithms called transformer neural networks. Transformers gave rise to large language models (LLMs) like GPT-4 or Claude 2 that display impressive natural language abilities.

But as AI becomes more integrated into business processes, sole reliance on data-driven machine learning approaches like transformers may prove limiting. Researchers are exploring how to combine these powerful statistical models with neurosymbolic methods that incorporate logical reasoning and structure.

The result could be AI systems that blend raw pattern recognition power with human-like compositional generalization and interpretability.

The Rise of Large Language Models

Much of the current excitement around AI stems from the advances of LLMs over the past few years. Models like GPT-3, PaLM, and Google's LaMDA have shown the ability to generate human-like text, answer questions, and accomplish tasks from basic prompts.

LLMs owe their abilities to a neural network architecture called transformers. Transformers process text more holistically than previous recurrent neural networks. They capture long-range dependencies in language by attending to all words in a context.

Training transformers on massive text corpora like the internet produces universal language models. With enough data and compute, these models learn statistical representations that prove surprisingly versatile for language tasks.

Finetuning techniques allow specializing LLMs to specific applications by updating the models on task data. For example, a finetuned GPT-3 model can be adapted into a conversational chatbot or a code completion tool.

The broad capabilities of LLMs along with their ease of use via prompting led to widespread adoption. Startups like Anthropic and Cohere are commercializing LLMs for business use cases. Apps built on LLMs range from automating customer support to generating content to synthesizing code.

Limits of Language Models

But for all their progress, LLMs still suffer from key limitations. Most notably, they display limited compositional generalization outside the distribution of their training data. For example, a LLM trained on English text will struggle with novel sentence structures or made-up words.

Humans seamlessly compose known concepts into new combinations thanks to our intuitive understanding of language syntax and meaning. Neural networks have no such innate symbolic reasoning capabilities.

LLMs are also black boxes. They can generate plausible and useful text or code but offer no interpretable justification for their outputs. Lack of interpretability makes it hard to audit models or identify causes of failures.

Finally, the massive scale of data and compute required to train LLMs makes them environmentally costly. Requiring less data and smaller models would allow much wider deployment of AI technology.

Integrating Symbolic Representations

To overcome the limits of language models, researchers are finding ways to incorporate more logical reasoning. The aim is to complement the statistical learning with capabilities closer to human understanding.

One approach injects structured knowledge representations into the training process. For example, some methods jointly train the language model with a knowledge graph. Knowledge graphs are data structures that represent facts as networks of entities and relationships. They encode real-world knowledge in a machine-readable graph format with nodes for entities like people and edges for relationships like "employed at". This allows computers to automatically reason over millions of interconnected facts. Knowledge graphs help power many AI applications today including search, recommendations, and question answering. The knowledge graph acts like a symbolic memory bank to improve reasoning.

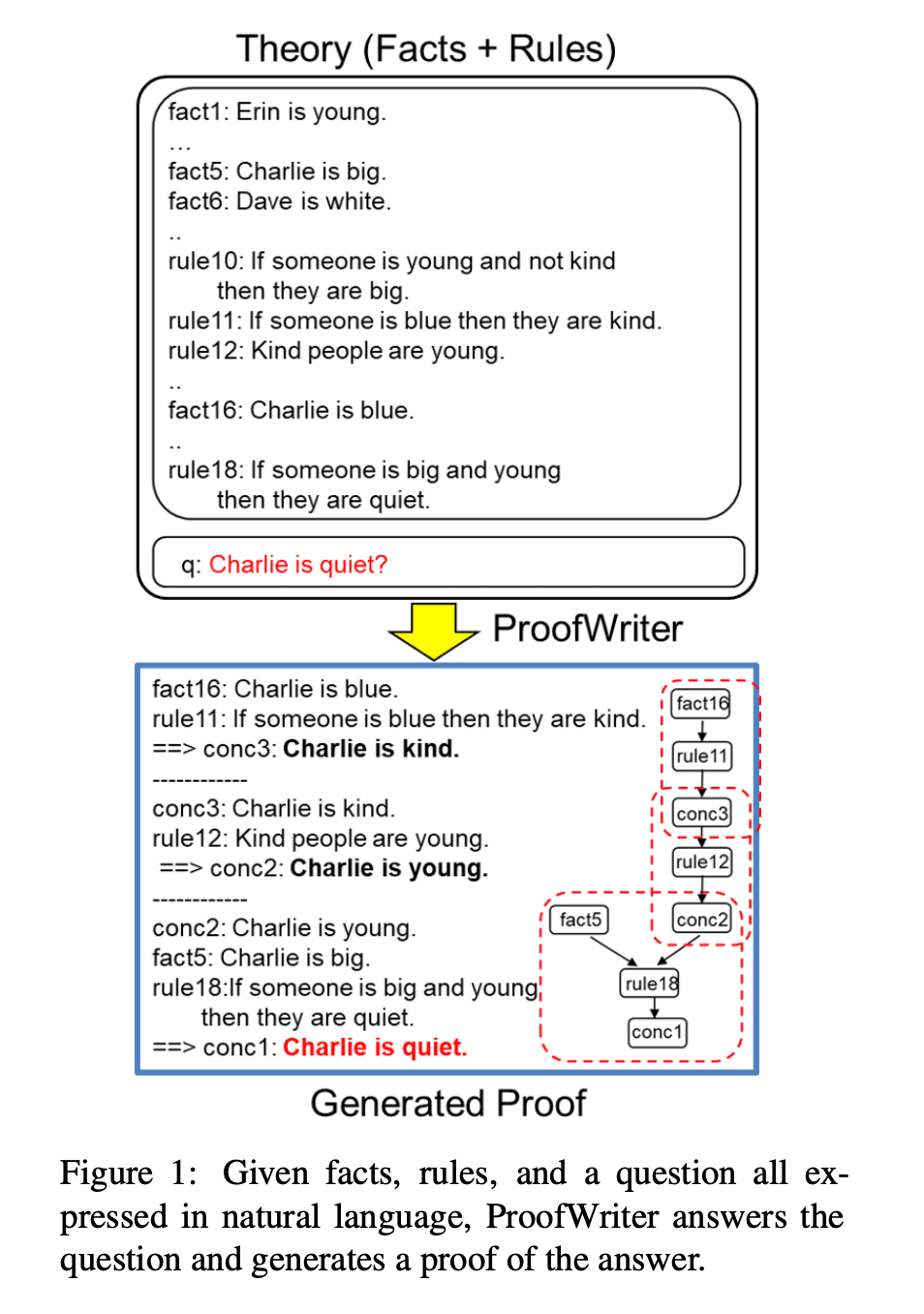

Other techniques draw inspiration from classic logic programming languages like Prolog. These languages represent knowledge as human-readable rules. By integrating them into the training, the aim is to bake in more systematic symbolic thinking.

Researchers are also finding ways to refine and check language model outputs using logical constraints. For instance, one idea runs the text through separate logic rules as an extra plausibility filter beyond the statistical patterns.

In each case, the goal is to guide, restrict, and enhance the pattern-finding abilities of language models with more deliberate symbolic reasoning. Just like humans blend intuitive thinking with logic, the hope is to achieve AI systems that integrate learned statistical correlations with structured symbolic representations.

The end result could be models that display more generalized reasoning abilities, while also producing outputs we can audit, validate, and explain.

Towards Hybrid Intelligence

Ultimately, the aim is achieving hybrid systems that integrate the complementary strengths of neural and symbolic AI. Some researchers argue that intelligence emerges from the interplay of two mechanisms:

- Correlation-based pattern recognition that is data-driven and associative.

- Model-based compositional generalization relying on structured representations and explicit rules.

Large transformer networks excel at the former while neurosymbolic methods specialize in the latter. Combining these two modes of reasoning could thus give rise to more human-like artificial intelligence.

The business implications of such hybrid AI systems are far-reaching. Logical components would allow verifying conclusions, checking ethical compliance, and generating step-by-step explanations. Incorporating domain constraints would reduce data needs and may lead to safer and less environmentally costly systems.

At the same time, retaining differentiable components preserves versatility, allows critiquing and updating symbolic knowledge, and facilitates integrating with downstream machine learning tasks.

Realizing this vision of integrated reasoning poses research challenges. Tradeoffs exist between symbolic interpretability and neural flexibility. Multi-component systems risk bottlenecks limiting end-to-end learning. Architectures that blur gradients across reasoning layers may be needed.

Nonetheless, the potential payoff for deployable, ethical, and broadly capable AI merits investment into these hybrid systems. Given the enthusiasm around LLMs today, injecting connections to symbolic reasoning could be a crucial next step in fulfilling their promise while mitigating risks.

Blending logical rule-based reasoning with modern neural networks could create more capable and reliable AI systems. This combination of human-like symbolic thinking and data-driven pattern recognition represents an exciting path forward. The result may be AI that better aligns with human intelligence in terms of adaptability, efficiency, and trustworthiness. Integrating the strengths of both of these approaches could lead to more advanced and human-compatible AI.

Sources: