Artificial intelligence systems like ChatGPT require massive amounts of data and computing power to train. A key bottleneck is memory - storing all the model parameters and training data strains even the most advanced computing systems.

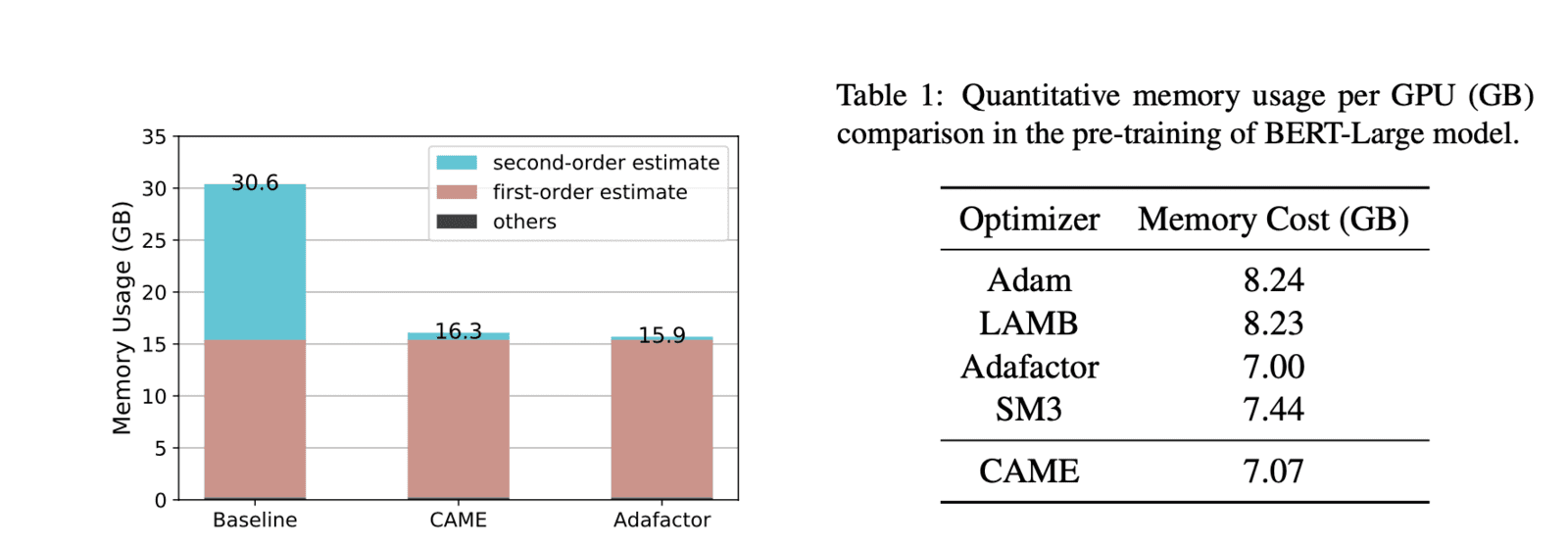

Researchers from the National University of Singapore and Noah's Ark Lab (Huawei) have developed a new training method that slashes memory requirements with no loss in performance. Their method, called CAME, can match state-of-the-art systems like BERT while using over 40% less memory.

Here's how it works:

Existing systems like BERT store every single model parameter and data point, which hogs memory. CAME instead stores compressed "representations" of this information.

It's like compressing a digital photo into a smaller JPEG file - you store less data but lose no quality. CAME applies similar compression to model parameters and training data.

This compressed storage alone saves much memory. But CAME goes further - it also ignores stored data that may be "corrupted" and unreliable. Just like we'd delete a glitched JPEG photo, CAME skips noisy data.

This confidence-based filtering saves even more memory with no drop in accuracy. Across multiple tests, CAME matched or beat standard AI training methods while using 15-40% less memory.

The researchers believe CAME paves the way to train more advanced AI with fewer computing resources. With memory savings, companies can train bigger models faster without expensive infrastructure upgrades.

CAME also has no theoretical limitations - the techniques can be applied to any size model. So as AI systems grow ever larger, CAME will remain a robust, efficient training method.

The era of trillion-parameter models demands unprecedented computing power. Techniques like CAME can make that power go much further. AI training will consume less energy and computing time using less memory, taking us closer to the advanced systems we imagine.

Business leaders should track algorithms like CAME that promise more efficient use of resources. Easing the computing strains of AI is key to unlocking its full potential. CAME represents an important step toward more affordable and accessible AI development.

Sources: