A new study reveals troubling bias in AI detectors of machine-generated text against non-native English speakers. The findings raise important questions around AI fairness and underscore the need for more inclusive technologies.

With models like ChatGPT attracting millions of users, risks emerge of AI text being passed off as human-written. Several detectors aim to differentiate AI versus human content, but their effectiveness and fairness are uncertain. This study by researchers at Stanford evaluates popular detectors on essays by native English 8th graders versus Chinese English learners.

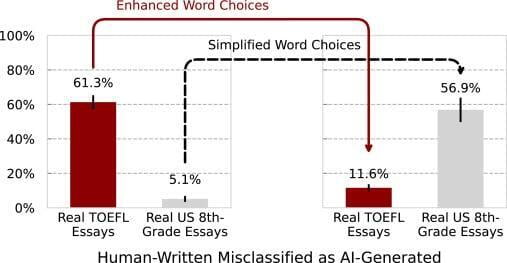

Alarmingly, over half of non-native essays were mislabeled as AI-generated, while native essays were accurately classified. The study shows detectors consistently penalize non-native writers' limited vocabulary and linguistic complexity. When the researchers used ChatGPT to enrich the non-native essays with more native-like word choices, misclassifications plummeted.

The implications are stark. As detectors become more stringent, non-native authors may rely on AI editing just to avoid false accusations of cheating or fake news. This risks further marginalizing diverse voices in education, media, and public discourse. The study highlights the urgent need to address bias in AI systems that increasingly mediate communication.

The researchers also demonstrate a simple technique for bypassing detectors, editing machine-generated essays and abstracts to dodge detection. This casts doubt on current methods overly reliant on statistical measures like perplexity. More robust techniques and human-in-the-loop validation will likely be imperative.

For business leaders, this study is a wake-up call on emerging risks of unfairness as AI proliferates. Consider recruiting and hiring, where text analysis aids decision-making. Bias against non-native speech could lead to unjust screening out of qualified talent. Proactively auditing for fairness and enabling redress will be key.

Customer service chatbots present another concern. If detectors disproportionately flag non-native customers as “bots,” will they receive lower quality service? Fostering trust requires ensuring AI interacts equitably with all users.

As for content moderation, faulty AI could censor non-native writers sharing ideas or reporting on social media. Humans must remain in the loop to prevent silencing marginalized voices.

While detectors aim to manage risks of AI content, overlooking their own limitations poses risks of disenfranchisement. Study co-author James Zou states: “Our findings emphasize the need for increased focus on the fairness and robustness of these detectors.”

Indeed, achieving AI’s promise requires centering inclusion from the start, not as an afterthought. Building balanced training data, testing for disparate impacts, and enabling redress of unfair outcomes will be key priorities for responsible innovation.

The rapid pace of AI progress demands proactive engagement on ethics and governance. Thoughtful development today will lead to more just and empowering outcomes as these technologies continue transforming society.

Sources: