Blog tagged as Responsible AI

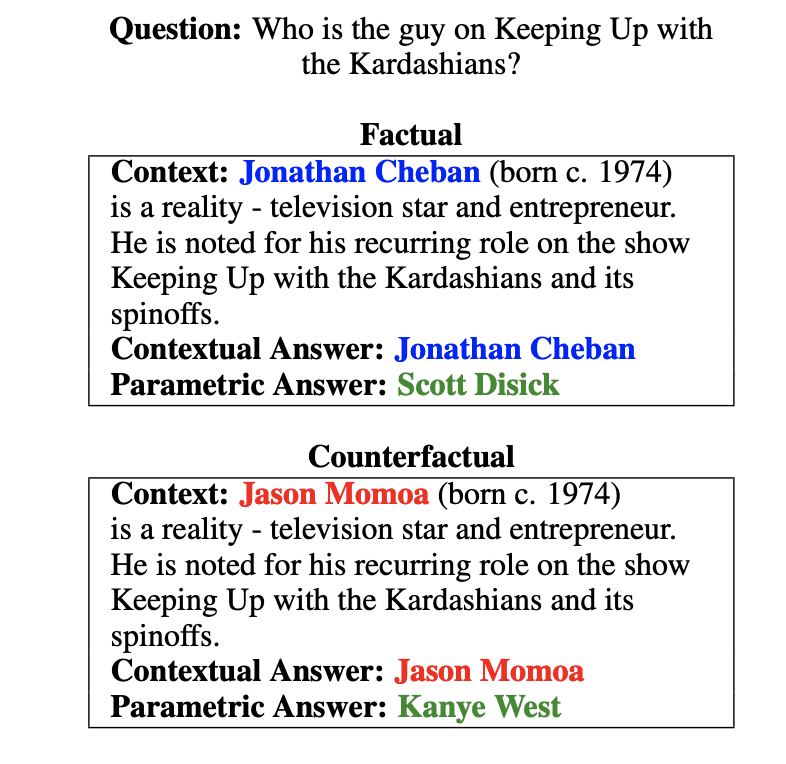

LLMs currently face significant challenges when it comes to truthfulness. Understanding these limitations is essential for any business considering leveraging LLMs.

Ines Almeida

29.04.24 01:00 PM - Comment(s)

We explore the current state of responsible AI, examining the lack of standardized evaluations for LLMs, the discovery of complex vulnerabilities in these models, the growing concern among businesses about AI risks, and the challenges posed by LLMs outputting copyrighted material.

Ines Almeida

29.04.24 11:19 AM - Comment(s)

A new study from Harvard University reveals how LLMs can be manipulated to boost a product's visibility and ranking in recommendations.

Ines Almeida

15.04.24 02:00 PM - Comment(s)

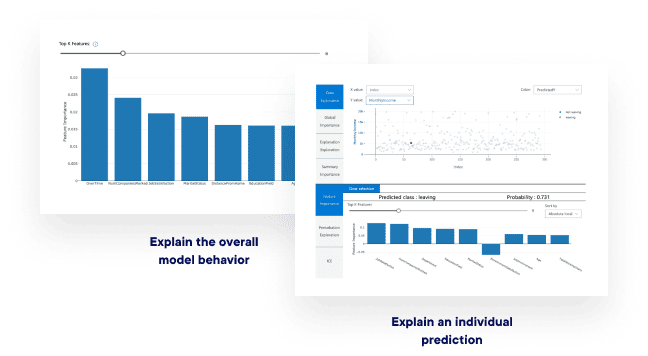

InterpretML is a valuable tool for unlocking the power of interpretable AI in traditional machine learning models. While it may have limitations when it comes to directly interpreting LLMs, the principles of interpretability and transparency remain crucial in the age of generative AI.

Ines Almeida

04.04.24 12:45 PM - Comment(s)

It is crucial for business leaders to understand the limitations and potential pitfalls of current approaches to measuring AI capabilities.

Ines Almeida

03.04.24 10:42 AM - Comment(s)

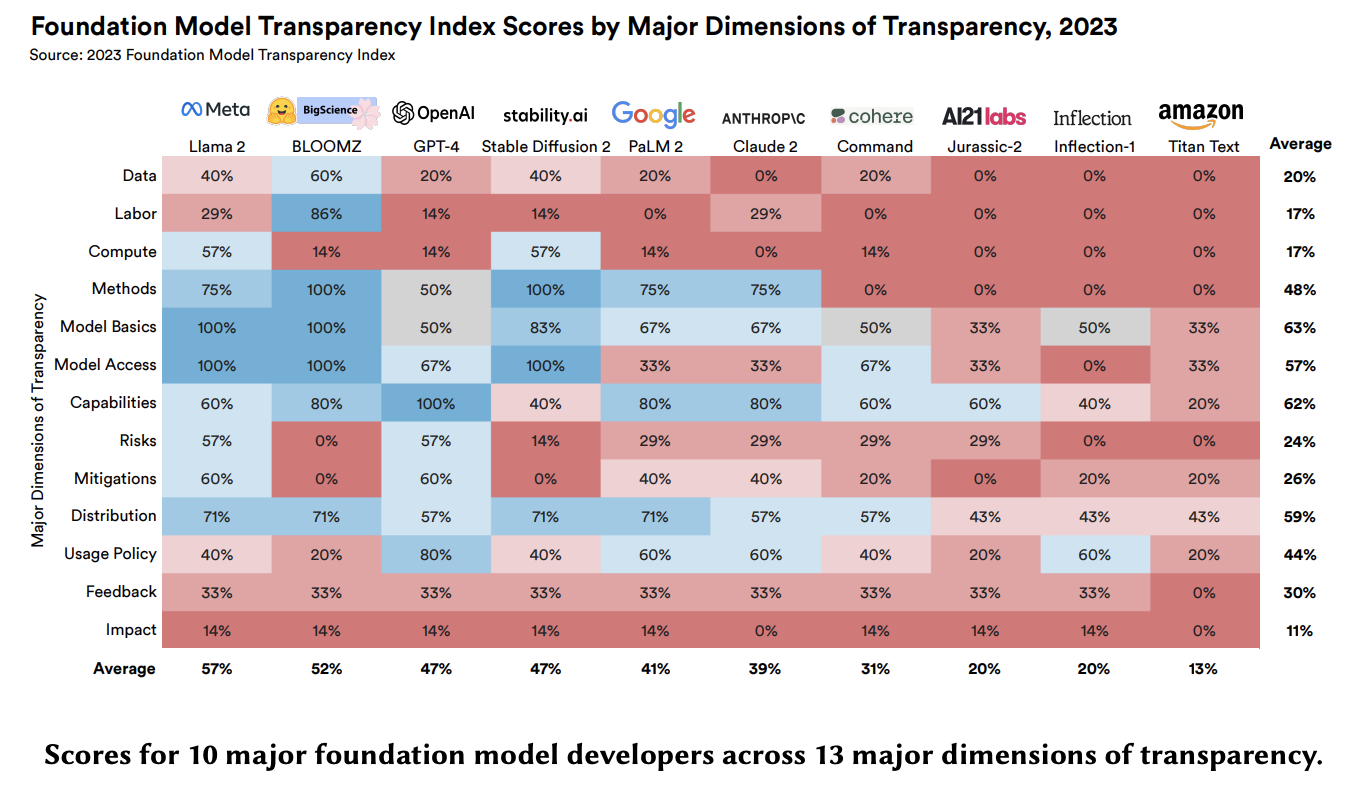

A recent critique calls into question a prominent AI transparency benchmark, illustrating the challenges in evaluating something as complex as transparency.

Ines Almeida

01.11.23 12:07 PM - Comment(s)

A recent critique calls into question a prominent AI transparency benchmark, illustrating the challenges in evaluating something as complex as transparency.

Ines Almeida

01.11.23 12:07 PM - Comment(s)

AI experts Alexandra Luccioni and Anna Rogers take a critical look at LLMs, analyzing common claims and assumptions while identifying issues and proposing ways forward.

Ines Almeida

16.08.23 09:04 AM - Comment(s)

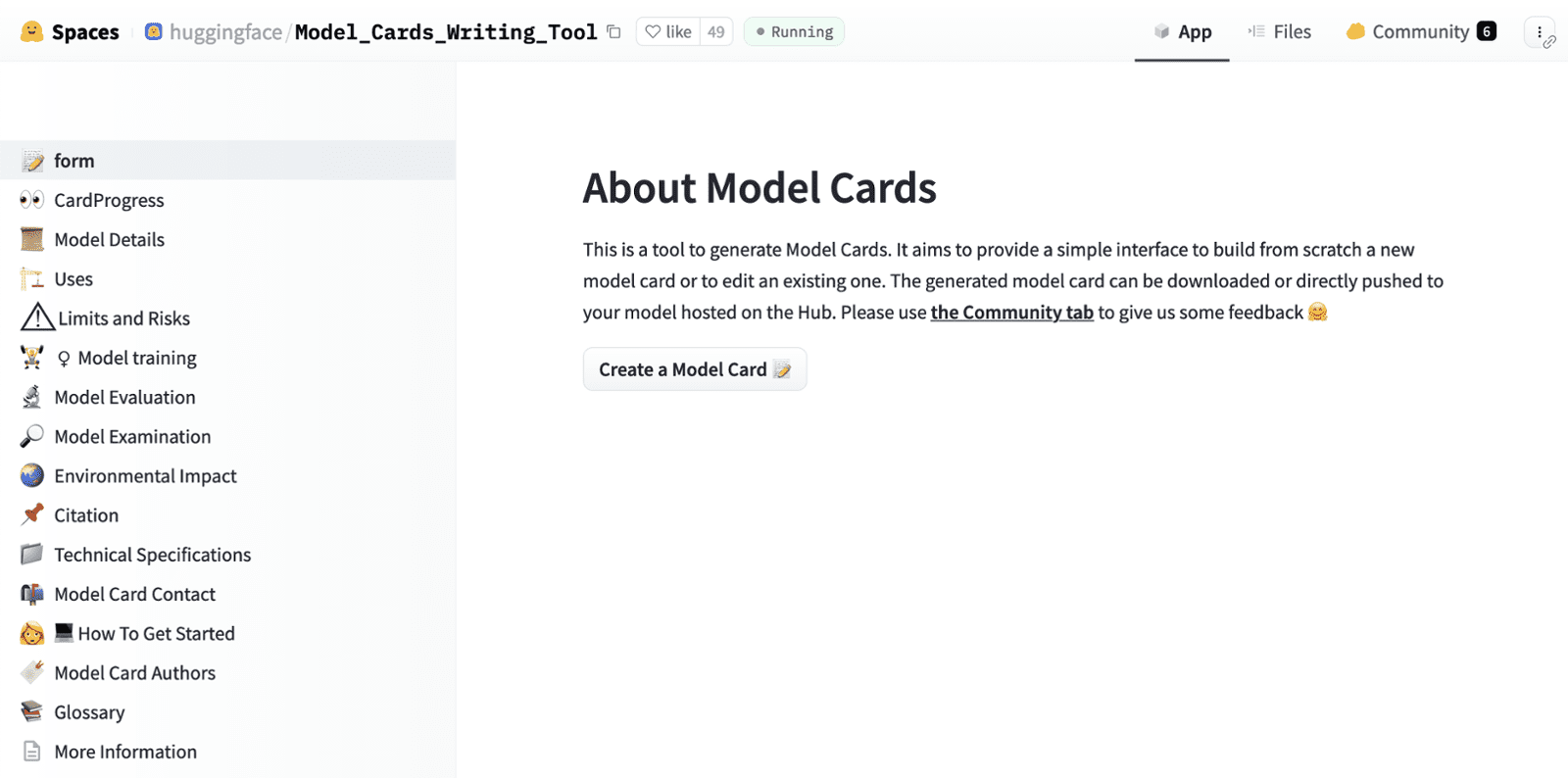

In 2019, a research paper proposed "model cards" as a way to increase transparency into AI systems and mitigate their potential harms.

Ines Almeida

13.08.23 10:39 PM - Comment(s)

A thought-provoking paper from computer scientists raises important concerns about the AI community's pursuit of ever-larger language models.

Ines Almeida

13.08.23 09:46 PM - Comment(s)

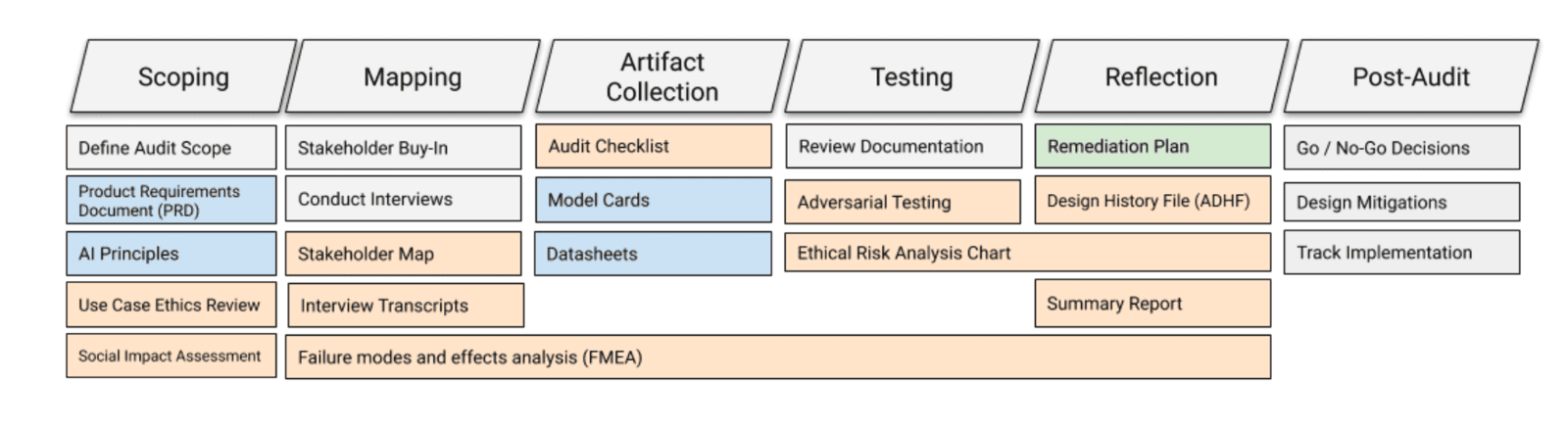

A research paper proposes formal internal audits as a mechanism for technology companies to ensure their artificial intelligence (AI) aligns with ethical priorities before deployment.

Ines Almeida

13.08.23 08:31 PM - Comment(s)

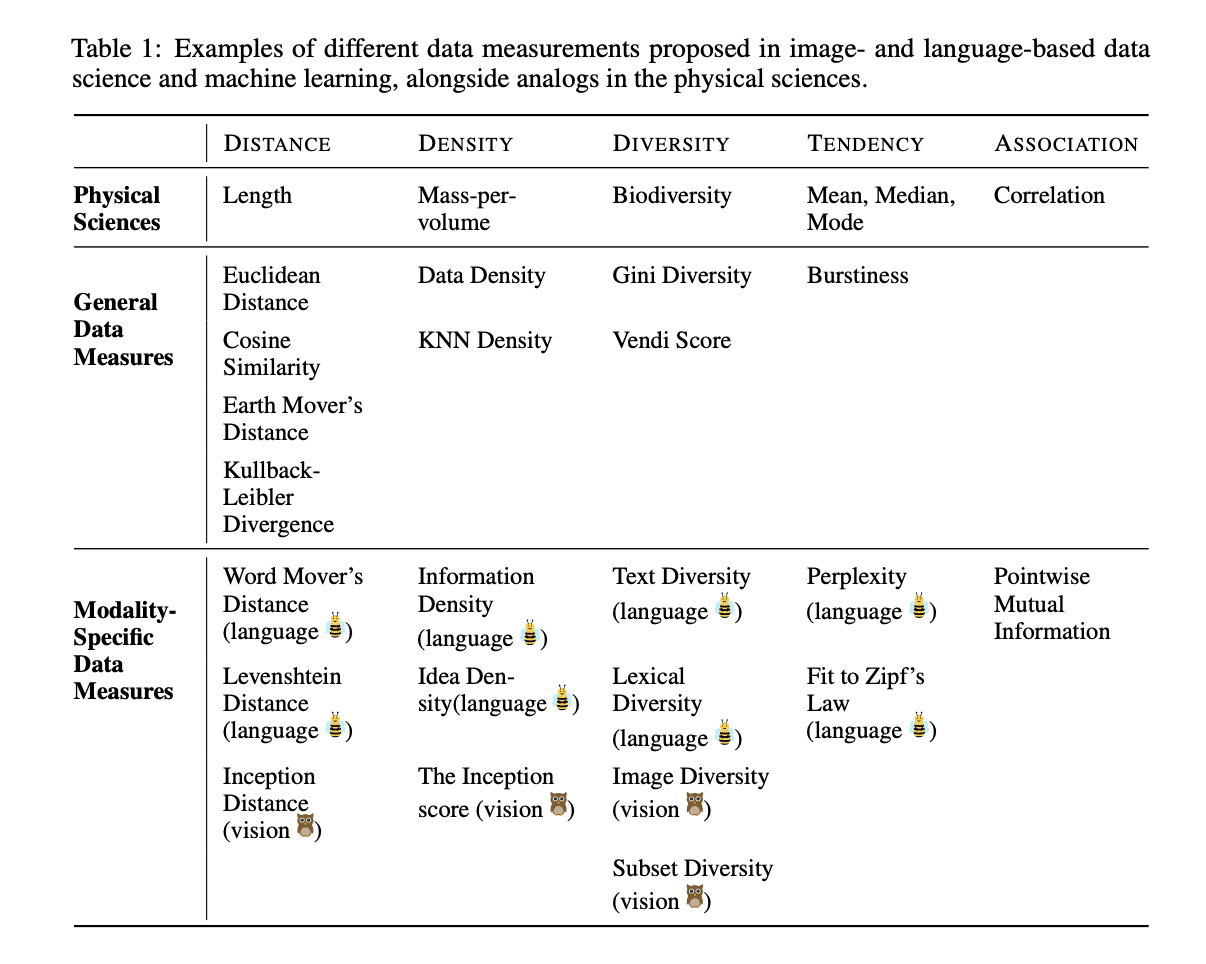

A new perspective paper argues for "measuring data" as a critical task to advance responsible AI development. Just as physical objects can be measured, data used to train AI systems should also be quantitatively analyzed to understand its composition.

Ines Almeida

13.08.23 08:14 PM - Comment(s)

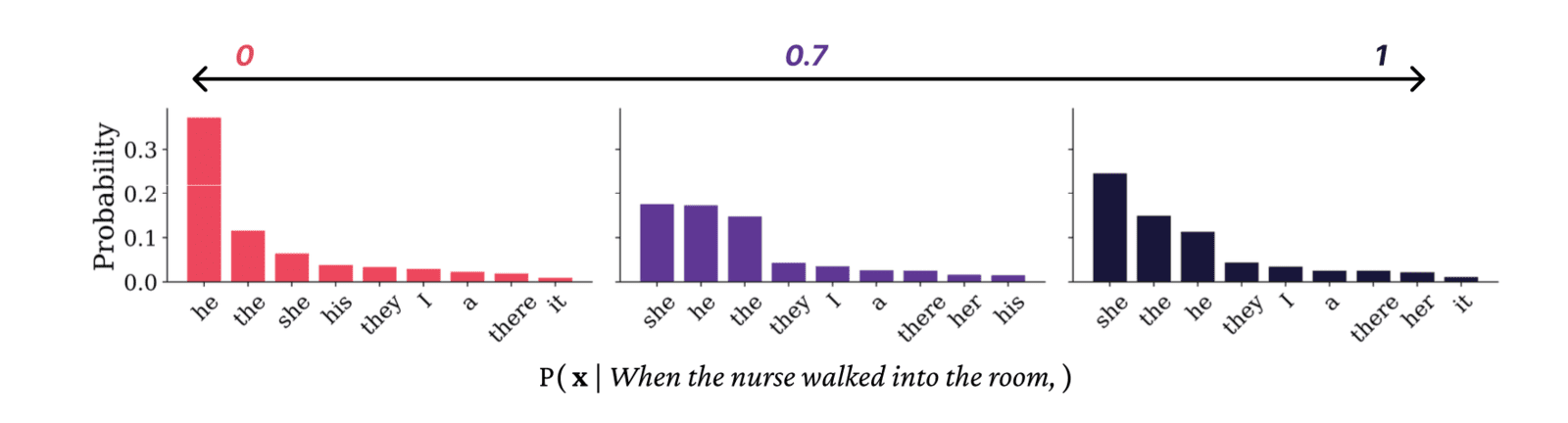

A study from AI researchers at OpenAI demonstrates how large language models like chatbots can be adapted to reflect specific societal values through a simple "fine-tuning" process.

Ines Almeida

13.08.23 08:03 PM - Comment(s)

Ines Almeida

13.08.23 12:31 PM - Comment(s)

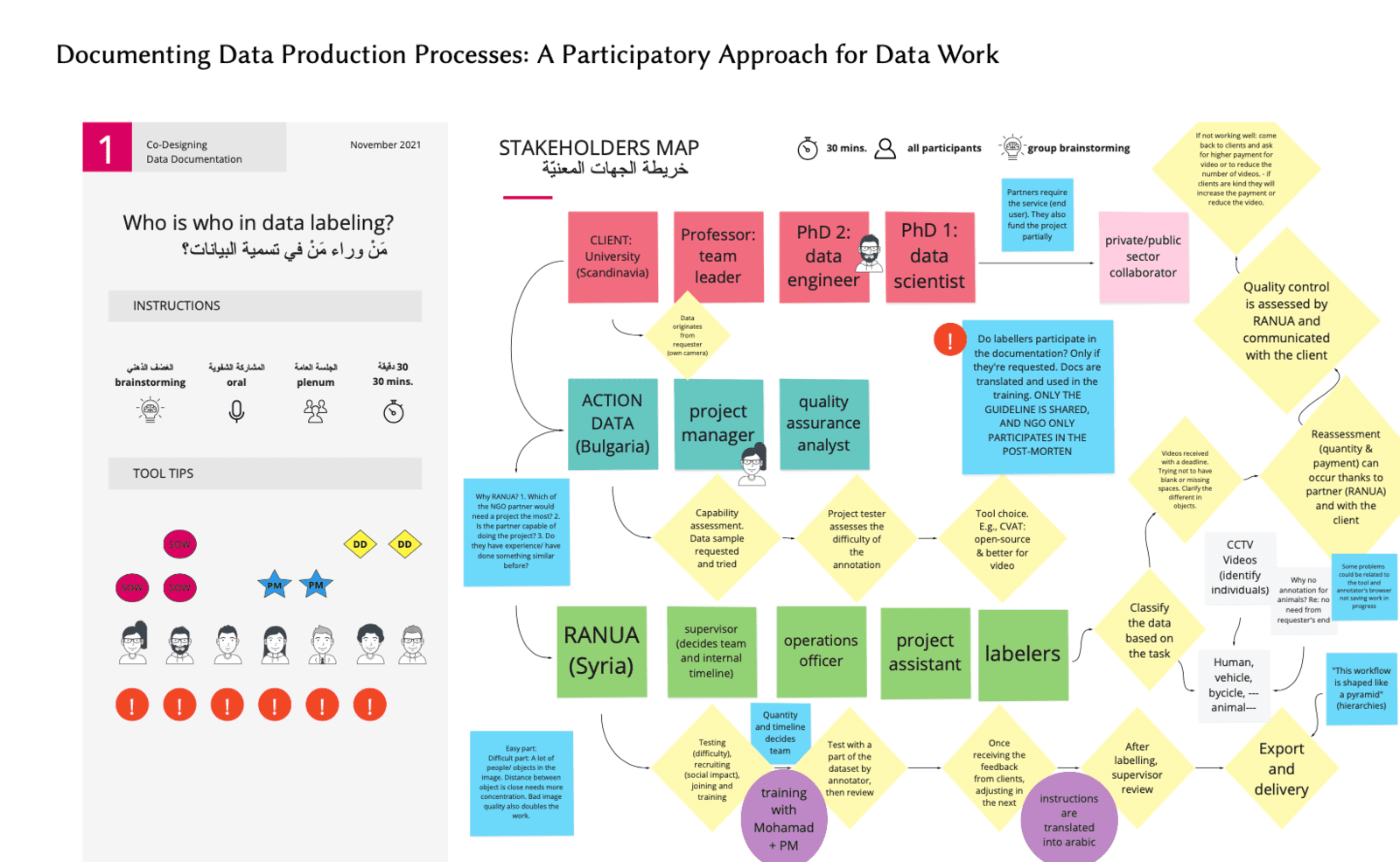

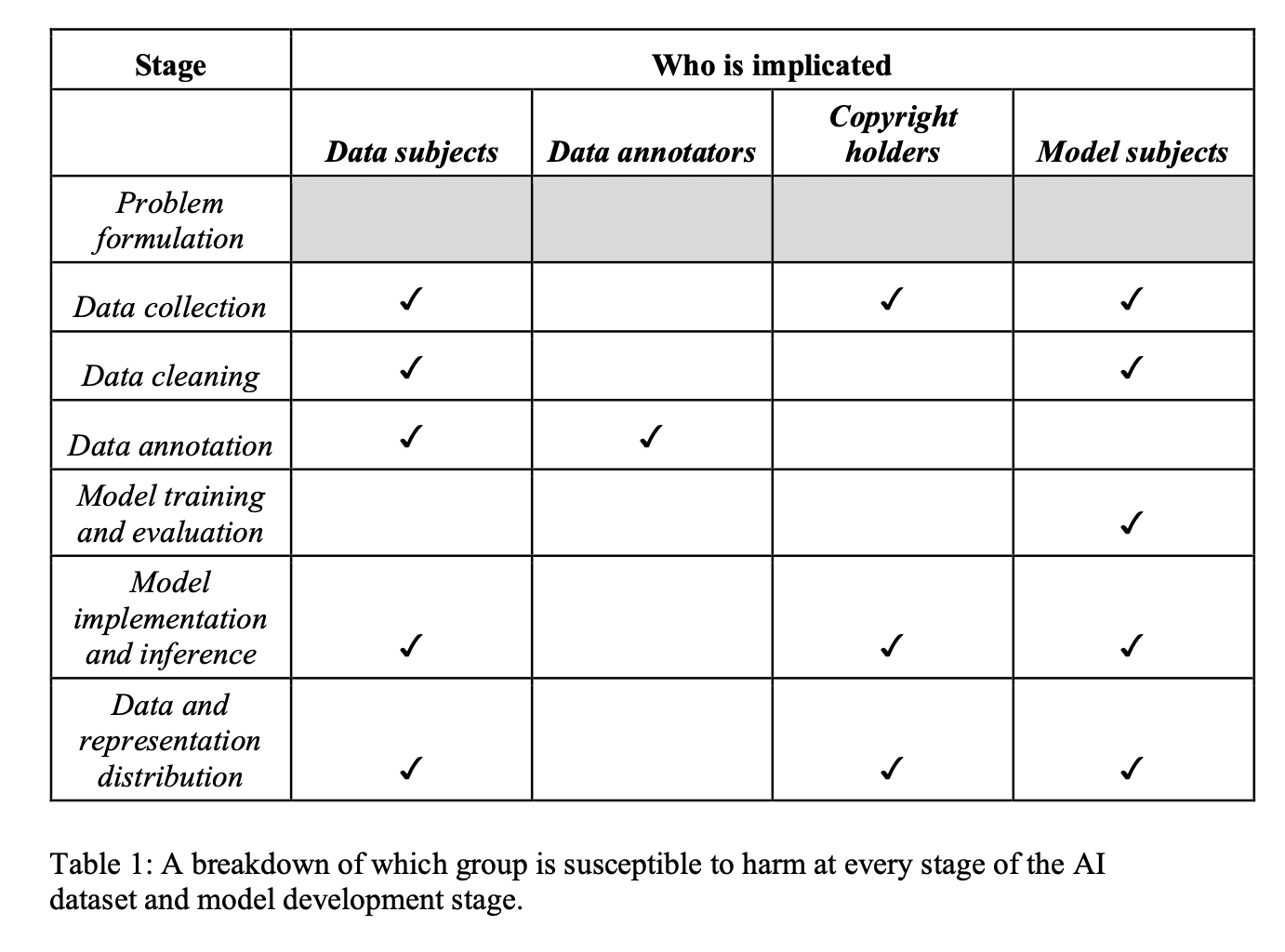

In a recent paper, researchers Mehtab Khan and Alex Hanna highlight the need for greater scrutiny, transparency, and accountability in how massive datasets for machine learning models are created.

Ines Almeida

13.08.23 11:51 AM - Comment(s)

Machine learning models rely heavily on their training datasets, inheriting inherent biases and limitations. This research proposes "datasheets for datasets" increasing transparency and mitigating risks.

Ines Almeida

13.08.23 11:21 AM - Comment(s)

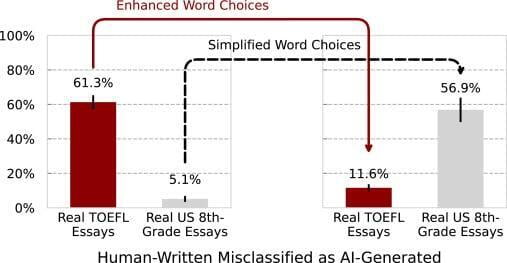

A new study reveals troubling bias in AI detectors of machine-generated text against non-native English speakers. The findings raise important questions around AI fairness and underscore the need for more inclusive technologies.

Ines Almeida

13.08.23 05:47 AM - Comment(s)

Ines Almeida

12.08.23 09:22 AM - Comment(s)

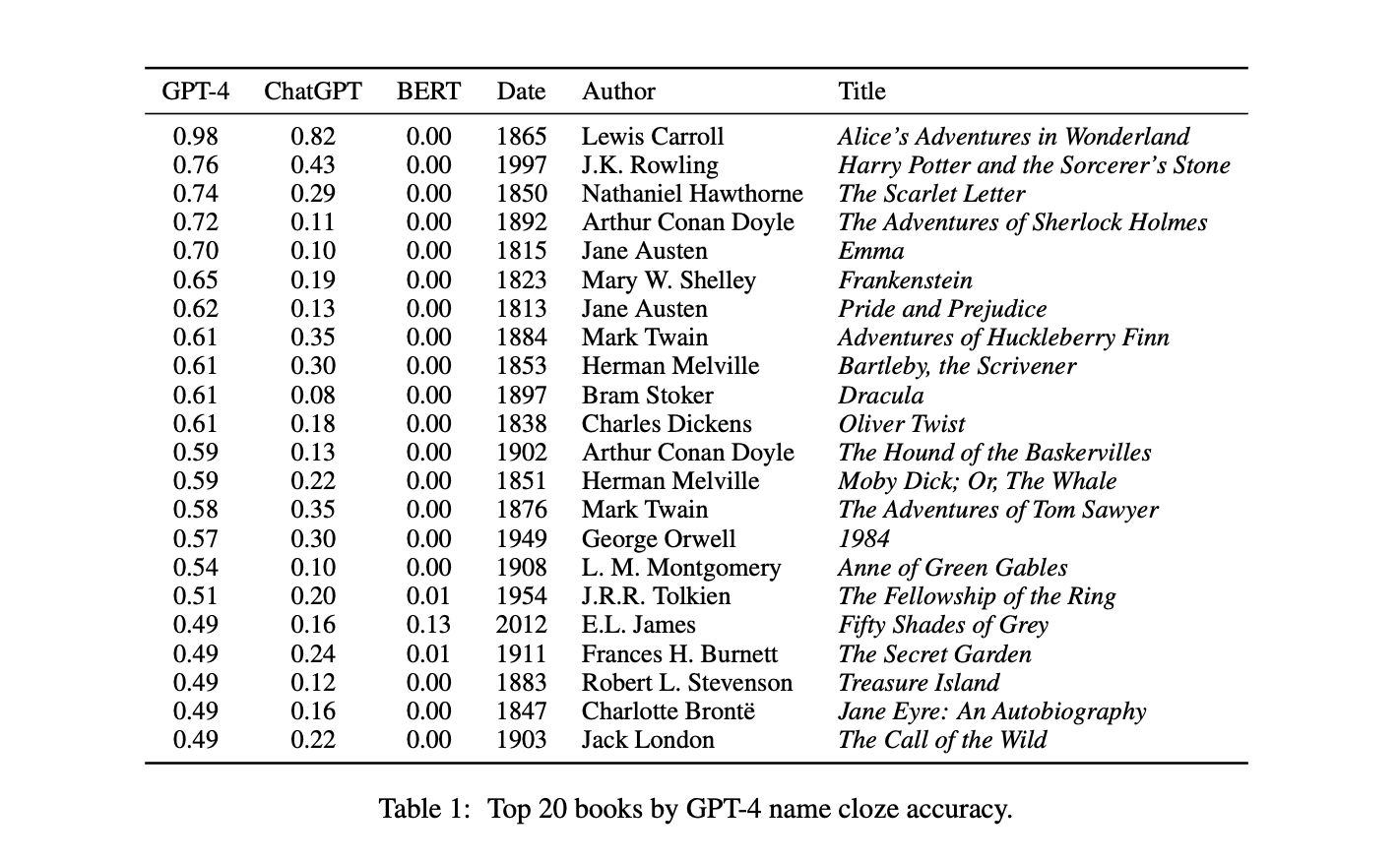

New research from the University of California, Berkeley sheds light on one slice of these models' knowledge: which books they have "read" and memorized. The study uncovers systematic biases in what texts AI systems know most about.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

Backpack models have an internal structure that is more interpretable and controllable compared to existing models like BERT and GPT-3.

Ines Almeida

10.08.23 07:59 AM - Comment(s)