As artificial intelligence becomes more advanced, researchers are exploring new techniques to ensure these systems remain helpful, honest, and harmless. One approach called "constitutional AI" relies on providing AI models with a set of principles to govern their behavior, much like a constitution guides human institutions.

Researchers at Anthropic recently published a paper demonstrating a constitutional AI technique to train natural language AI assistants. Their goal was to make the assistants helpful, while avoiding harmful, dangerous, or unethical content. Critically, they aimed to do this without any direct human oversight labeling specific model outputs as problematic.

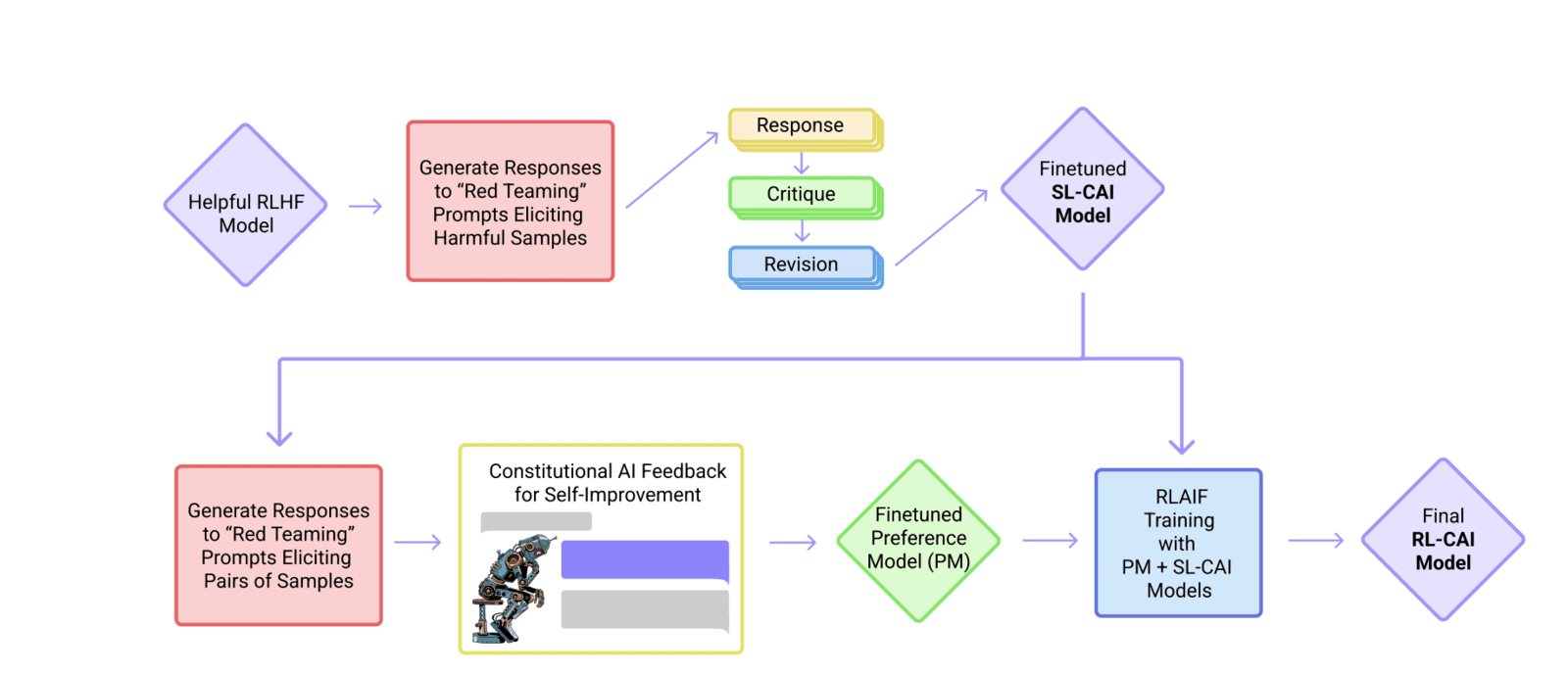

Instead, the researchers provided a simple "constitution" - a set of natural language rules like "be kind, ethical, and non-violent." They then used these rules to steer the model's behavior through a multi-stage process:

- Self-Critique and Revision

The researchers first generated sample conversations between a human and an AI assistant. They then asked the assistant model to critique its own problematic responses based on violations of principles from the constitution.

Next, they prompted the model to revise those responses to remove any harmful content, creating a new annotated dataset. For example, the model might revise a racist response to promote equality instead.

- Supervised Learning

The researchers then used this revised dataset to fine-tune the model parameters, so it learns to avoid certain types of harmful responses. This "bootstraps" the model's capabilities based on critiquing and revising its past mistakes.

- AI Feedback for Reinforcement Learning

Finally, the researchers had the AI generate reward signals to reinforce harmless behavior. They created fake conversations with good and bad responses. The AI then scored each response by how well it followed the constitution's principles.

They used these AI-generated scores to further fine-tune the model to maximize its "rewards" for behaving ethically. This stage was analogous to reinforcement learning from human feedback.

The researchers found this approach produced AI assistants that were less harmful and more transparent than systems trained only with human oversight. For example, the models learned to engage thoughtfully with sensitive topics instead of just shutting down conversations.

The study demonstrates the promise of constitutional AI. By encoding principles directly in natural language rules, researchers gained precision and interpretability compared to labeled training data. This technique also lowers the bar for experimentation, since new principles can quickly alter model behavior without human feedback.

However, the study relied on human guidance to make the assistant helpful, not just harmless. Removing human oversight entirely could enable unforeseen failures. The researchers stress that some focused, high-quality human oversight is still crucial for reliability.

Nonetheless, constitutional AI offers a template for training models that behave according to transparent, auditable principles. It also suggests the synergy between different AI techniques - in this case, self-supervision and reinforcement learning - can produce systems greater than the sum of their parts.

For business leaders, this study is a reminder that AI aligned with human values may require creative solutions. Methods like constitutional AI can potentially scale ethics throughout the AI development lifecycle - from design to training to deployment. But businesses must also know when to maintain human oversight over autonomous systems.

As AI grows more advanced and ubiquitous, techniques blending human guidance with self-supervision will likely be critical. Constitutional AI provides one model of highly legible and focused human oversight. The principles encoded in an AI's "constitution" will shape its goals and behavior. This research illustrates the importance of choosing those founding principles with care.

Sources: