Imagine you ask your phone "Who wrote the song Hello by Adele?" and it gives you an incorrect answer, insisting the song is by Taylor Swift. This shows artificial intelligence sometimes confuses its own training knowledge with external facts.

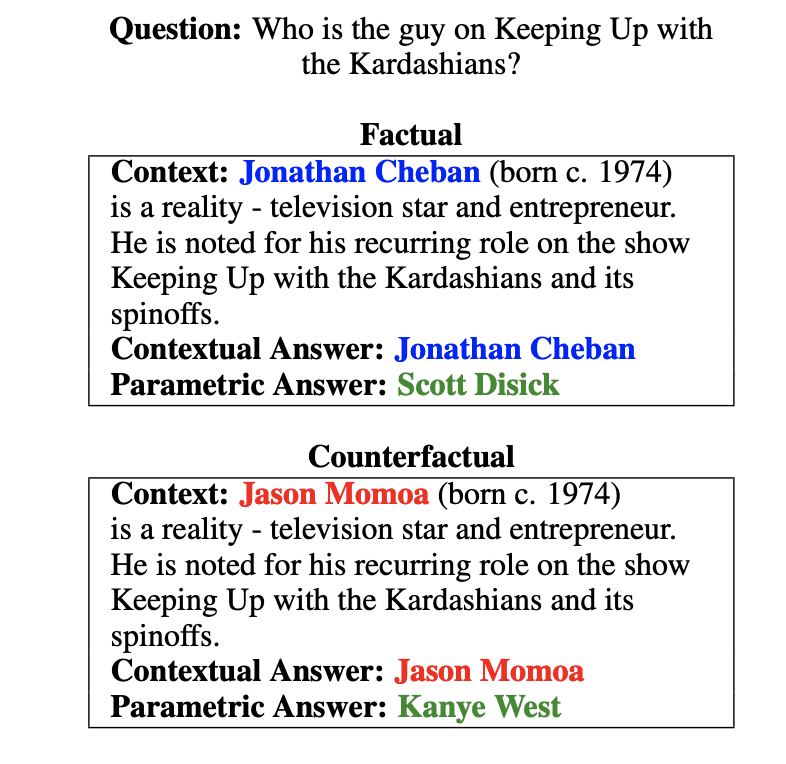

Researchers want to fix this issue to make AI assistants more helpful and honest. Their solution: Build QA Systems that catch knowledge gaps and avoid misleading users by teaching the system to provide two responses:

- The factual answer based on given information (e.g. Adele)

- What it privately recalls from its memory (e.g. Taylor Swift)

This highlights any mismatches between its training knowledge and external data. It's like when we say "Hmm, I thought X, but the website says Y."

The team trained the AI model by creating quizzes with tricky examples:

- Swapping names in passages to elicit different responses from the context vs. the model's recollection

- Removing passages altogether so the system must say "I don't know"

After this special training, the model reliably distinguished its own knowledge from given facts. This improved its accuracy and truthfulness.

Say you ask about a movie release date. The system can now respond:

"The article says July 2022. But I thought it was December 2022."

This catches any knowledge gaps and avoids misleading users.

While not perfect, it's major progress toward AI that collaborates in a transparent, helpful manner. The benefits for businesses are clear:

- Avoid frustrated users with incorrect responses

- Build trust by exposing limitations upfront

- Reduce risk from applying flawed knowledge

- Clarify when external data should override internal beliefs

By recognizing and sharing when its knowledge is incomplete, the AI becomes a more reliable and honest partner. This research brings us closer to truly cooperative human-AI interaction.

Sources:

DisentQA: Disentangling Parametric and Contextual Knowledge with Counterfactual Question Answering