The 2024 AI Index Report from the Stanford Institute for Human-Centered Artificial Intelligence (HAI) provides a comprehensive overview of the AI landscape. In a series of articles, we highlight key findings of the report, focusing on trends and insights that are particularly relevant for business leaders.

In this article, we'll explore the current state of responsible AI, examining the lack of standardized evaluations for large language models (LLMs), the discovery of complex vulnerabilities in these models, the growing concern among businesses about AI risks, and the challenges posed by LLMs outputting copyrighted material. We'll also discuss the low transparency scores of AI developers and the rising number of AI incidents. By understanding these critical issues, business leaders can make more informed decisions about the responsible development and deployment of AI systems.

Lack of Standardized Evaluations for LLM Responsibility

One of the most significant findings from the 2024 AI Index Report is the lack of robust and standardized evaluations for assessing the responsibility of LLMs. New analysis reveals that leading AI developers, such as OpenAI, Google, and Anthropic, primarily test their models against different responsible AI benchmarks. This inconsistency in benchmark selection complicates efforts to systematically compare the risks and limitations of top AI models, making it difficult for businesses to make informed decisions when choosing AI solutions. To improve responsible AI reporting, it is crucial that a consensus is reached on which benchmarks model developers should consistently test against.

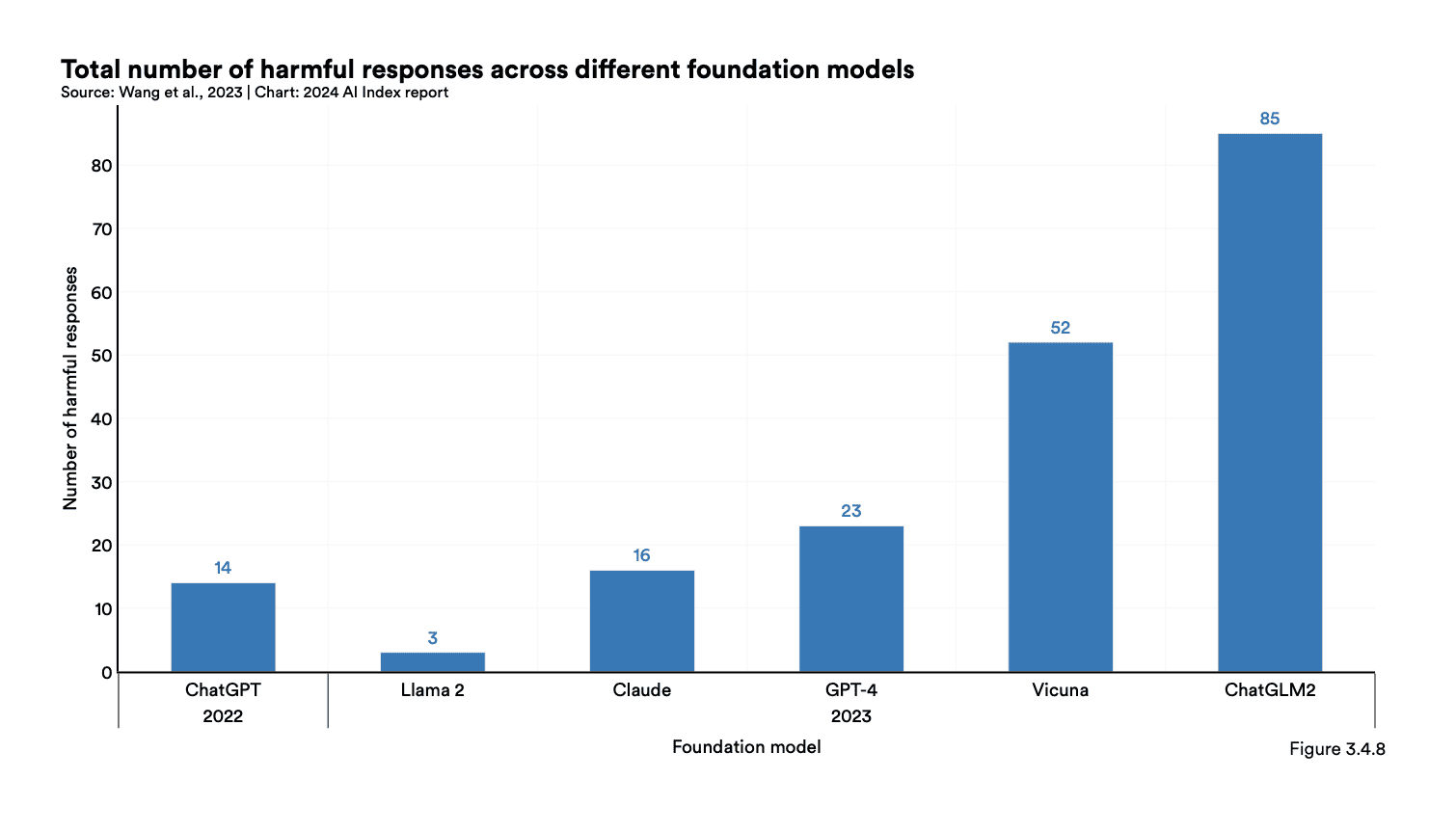

Complex Vulnerabilities Discovered in LLMs

Researchers have uncovered increasingly complex vulnerabilities in LLMs over the past year. While previous efforts to "red team" AI models focused on testing adversarial prompts that intuitively made sense to humans, recent studies have found less obvious strategies to elicit harmful behavior from LLMs. For example, asking models to infinitely repeat random words can lead to the inadvertent revelation of sensitive personal information from training datasets. This finding highlights the need for businesses to be aware of potential risks associated with LLMs and to implement appropriate safeguards and monitoring mechanisms.

AI Risks Concern Businesses Globally

A global survey on responsible AI highlights that companies' top AI-related concerns include privacy, security, and reliability. The survey shows that while organizations are beginning to take steps to mitigate these risks, most have only mitigated a portion of them so far. For business leaders, this underscores the importance of prioritizing responsible AI practices and investing in comprehensive risk mitigation strategies. By proactively addressing AI risks, businesses can build trust with their stakeholders and ensure the long-term success of their AI initiatives.

LLMs Can Output Copyrighted Material

Multiple researchers have demonstrated that the generative outputs of popular LLMs may contain copyrighted material, such as excerpts from The New York Times or scenes from movies. This raises significant legal questions about whether such output constitutes copyright violations. For businesses looking to leverage LLMs for content generation or other applications, it is essential to be aware of these potential legal risks and to implement appropriate monitoring and filtering mechanisms to prevent the unauthorized use of copyrighted material.

Low Transparency Scores for AI Developers

The newly introduced Foundation Model Transparency Index reveals that AI developers generally lack transparency, particularly regarding the disclosure of training data and methodologies. This lack of openness hinders efforts to further understand the robustness and safety of AI systems. For businesses, this means that they may not have access to all the information they need to fully assess the risks and limitations of the AI solutions they are considering. To make informed decisions, business leaders should demand greater transparency from AI developers and prioritize solutions that provide comprehensive documentation and disclosure.

Rising Number of AI Incidents

According to the AI Incident Database, which tracks incidents related to the misuse of AI, there were 123 reported incidents in 2023, representing a 32.3% increase from 2022. Since 2013, AI incidents have grown by over twentyfold. A notable example includes AI-generated, sexually explicit deepfakes of Taylor Swift that were widely shared online. For businesses, this trend underscores the importance of implementing robust AI governance frameworks and monitoring systems to detect and mitigate potential misuse of their AI systems. By staying vigilant and responsive to emerging AI risks, businesses can protect their reputation and maintain the trust of their customers and stakeholders.

Conclusion

The 2024 AI Index Report highlights the urgent need for businesses to prioritize responsible AI practices as they increasingly adopt and deploy AI systems. From the lack of standardized evaluations for LLM responsibility to the discovery of complex vulnerabilities and the rising number of AI incidents, the report underscores the importance of proactively addressing AI risks and challenges.

By demanding greater transparency from AI developers, investing in comprehensive risk mitigation strategies, and implementing robust AI governance frameworks, business leaders can ensure the responsible development and deployment of AI systems. Only by prioritizing responsible AI practices can businesses fully realize the benefits of this transformative technology while protecting the interests of their stakeholders and society at large.