Blog tagged as Mixture of Experts

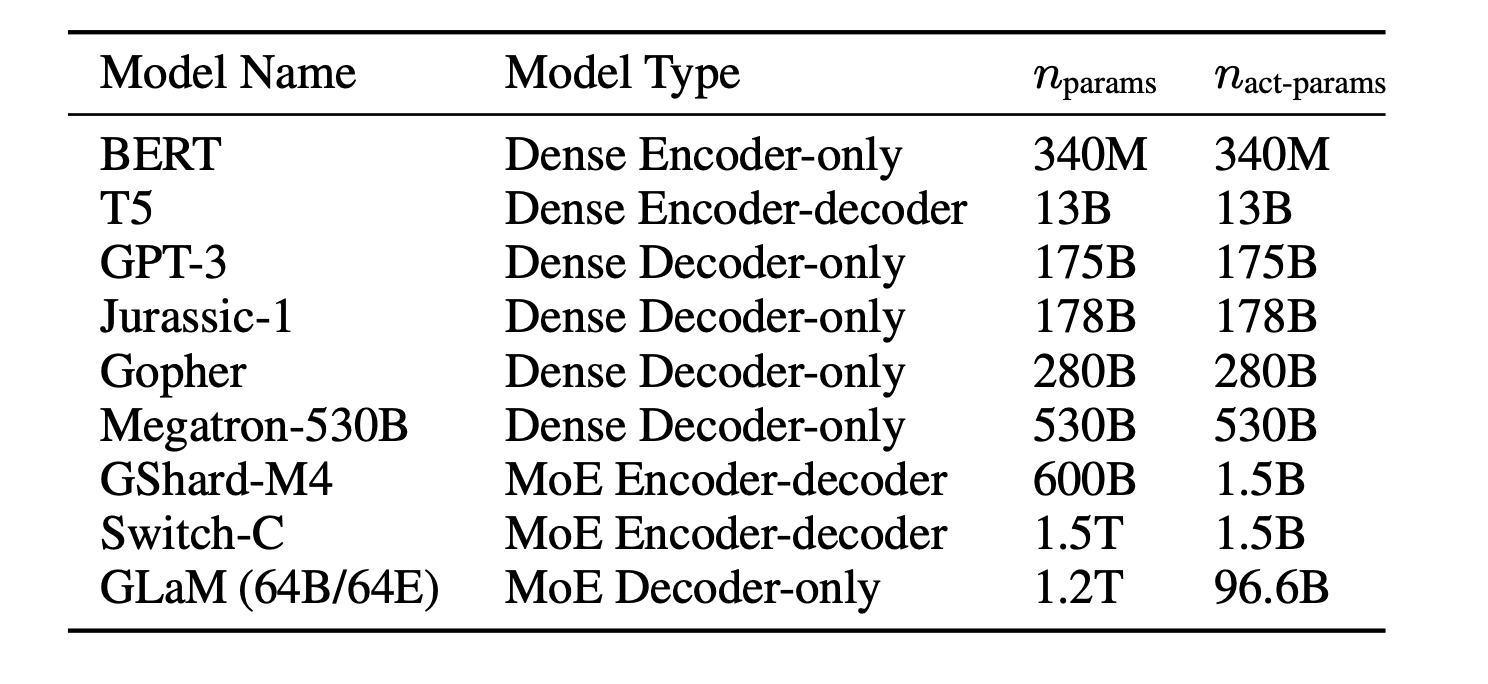

Rumors are swirling that GPT-4 may use an advanced technique called Mixture of Experts (MoE) to achieve over 1 tr parameters. This offers an opportunity to demystify MoE

Ines Almeida

17.08.23 02:25 PM - Comment(s)