Artificial intelligence (AI) has rapidly permeated nearly every sector of society, bringing immense commercial investment and public interest. AI systems like chatbots, facial recognition, and recommendation engines mediate how we communicate, access information, use transportation, and make purchases. The most advanced form of AI today are foundation models: general purpose systems trained on massive datasets that can be adapted to many applications. Foundation models include systems like GPT-4 for text, Midjourney for images, and Codex for code.

The rise of foundation models has raised pressing questions about their societal impact and how to ensure this technology advances the public interest. Specifically, a lack of transparency about how foundation models are built and used makes it difficult to properly scrutinize them or propose interventions when issues arise. This concerning trend parallels the trajectory of other digital technologies like social media. When new technologies emerge, early optimism and rapid adoption precedes growing awareness of harms enabled by the technology in question. After the fact, society often realizes the technology suffered from a lack of transparency by its builders.

To avoid repeating past mistakes, many experts such as Prof Emily M. Bender and the members of the DAIR institute have called for greater transparency in AI. However, what constitutes meaningful transparency for foundation models remains unclear. To clarify how transparent today's developers are, researchers at Stanford University's Institute for Human-Centered Artificial Intelligence conducted the Foundation Model Transparency Index. This multi-month research initiative systematically assessed and scored leading foundation model developers on 100 concrete metrics of transparency.

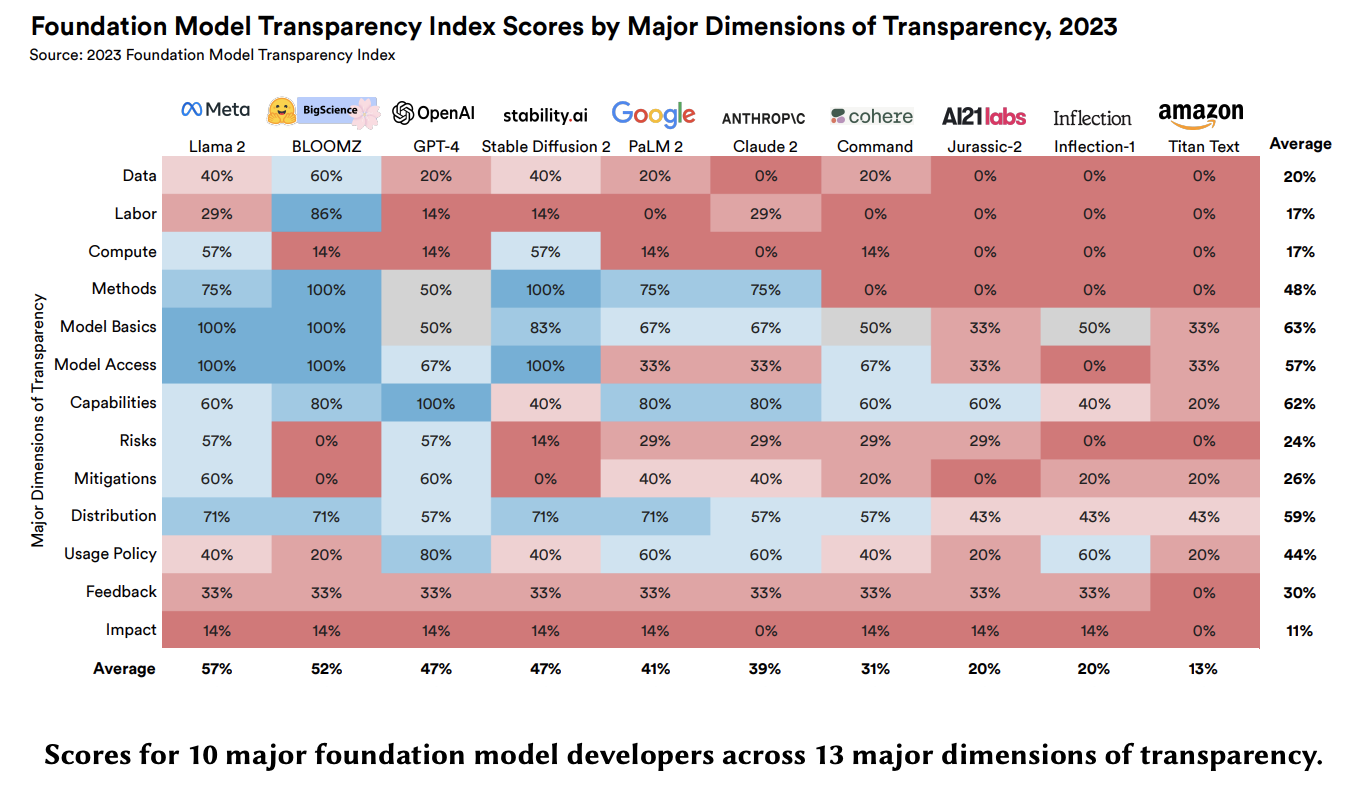

The results reveal a fundamental lack of transparency across the AI industry. The highest scoring developer in the index scored just 54 out of 100 possible points. On average, developers scored only 37 out of 100. This concerning opacity spans critical issues like the data used to train models, the labor practices involved, the risks posed by systems, and their real-world usage. The index provides an unprecedented, empirical view into the black box of AI development while establishing exactly where greater transparency is most needed.

Here, we explore the goals, approach, and key findings of this pioneering research. Our aim is to clarify the push for transparency in AI and what it might achieve for society.

The Case for Transparency in AI

Transparency is a vital prerequisite for accountability, robust science, continuous innovation, and effective governance of digital technologies. Without transparency, the public cannot properly understand technologies mediating essential aspects of life nor propose interventions when harms become apparent. This lesson has become abundantly clear from prior technologies where opacity enabled wide-ranging harms to emerge before society could respond.

Social media serves as a cautionary tale. For years, social media companies obscured how their platforms were built, how content was moderated, and how user data was handled. The resulting opacity let harms proliferate, ranging from disinformation influencing elections to illegal data sharing enabling voter manipulation. By the time society grasped the repercussions, immense damage was already done.

Today, foundation models appear on track to repeat this trajectory. As capabilities have rapidly advanced from GPT-2 to GPT-3 and now GPT-4, transparency about these systems has dramatically decreased. For example, OpenAI stated that its release of GPT-4 intentionally omitted all details regarding model architecture, training data, compute usage, and other key factors. This decrease parallels social media's path towards opacity as profits and users grew. Without transparency, foundation models will likely lead to unintended consequences that cannot be addressed until significant harm occurs.

Calls for transparency in AI are mounting from experts across civil society, academia, industry, and government:

- 80+ civil society groups urged developers to release model cards explaining characteristics of AI systems.

- 400+ researchers signed a pledge to document details about datasets, models, and experiments.

- The EU AI Act requires high-risk AI systems to be transparent and provide information to authorities.

- The White House secured commitments from companies to share more information about development and risks.

However, the AI field lacks clarity on what transparency entails and which developers are transparent about what issues. The Foundation Model Transparency Index aimed to address this knowledge gap with a rigorous, empirical assessment.

Measuring Transparency in Foundation Models

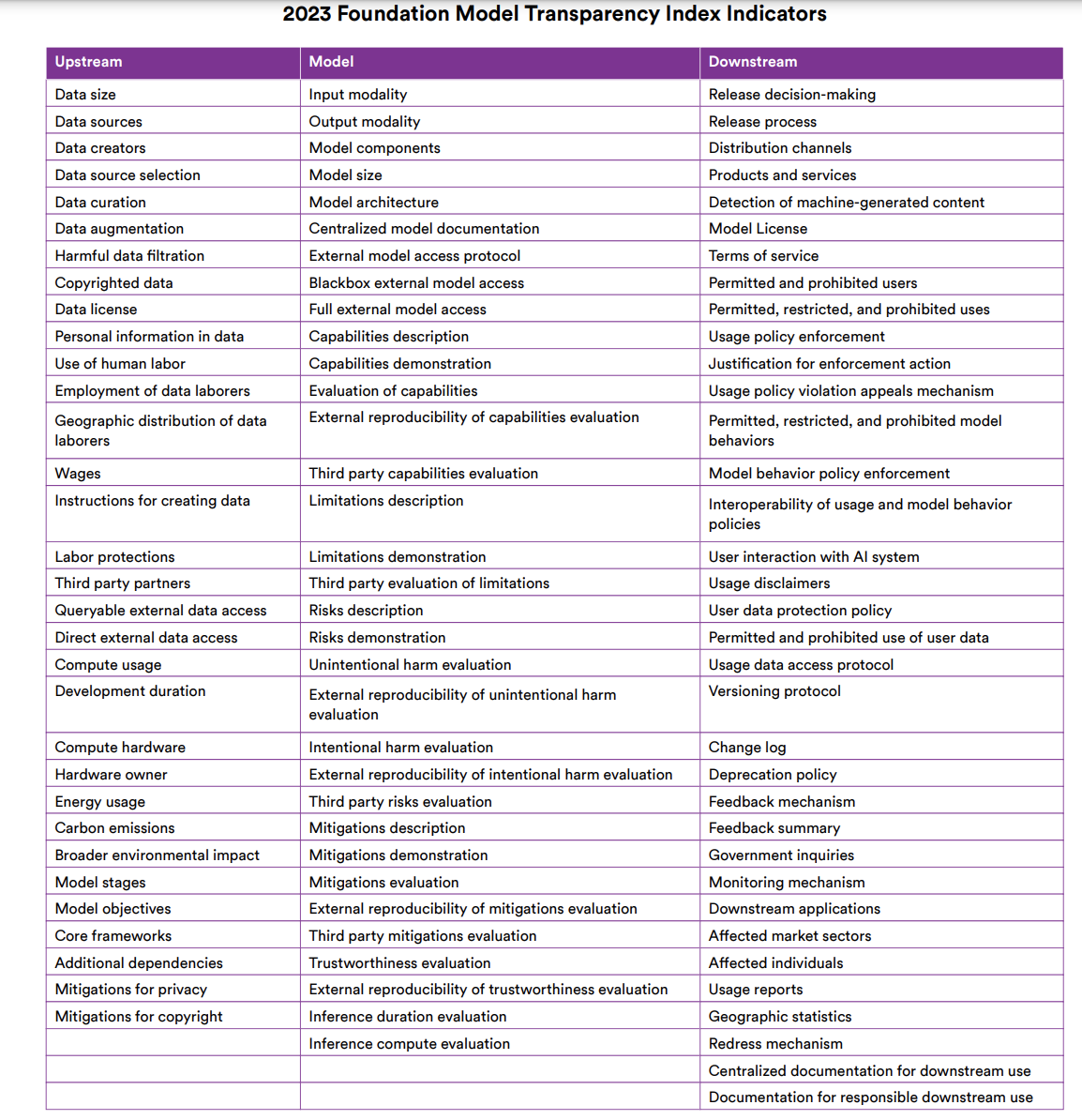

The Foundation Model Transparency Index began by articulating 100 specific indicators that comprehensively characterize transparency for developers of foundation models. Indicators span the full supply chain of foundation models including:

- Upstream - Data, compute, code, labor used to build models

- Model - Technical properties, capabilities, risks, limitations

- Downstream - Distribution, policies, societal impact of models

This multi-dimensional view of transparency builds on emerging best practices like model cards and supply chain tracing for AI. Researchers engaged a range of experts to refine the set of 100 indicators, which are tailored to foundation models and emphasize issues like labor, bias, and environmental impact.

The index then scored 10 major foundation model developers against these 100 indicators based solely on publicly available information. The 10 developers - spanning startups, Big Tech firms, and open collaborations - included leaders across the foundation model ecosystem:

- OpenAI (GPT-4)

- Anthropic (Claude 2)

- Google (PaLM 2)

- Meta (Lamma 2)

- Amazon (Titan Text)

- Microsoft (Turing NLG)

- Cohere (Cohere API)

- AI21 Labs (Jurassic-2)

- Hugging Face (BLOOMZ)

- Stability AI (Stable Diffusion 2)

Scoring adhered to a rigorous process. Researchers independently assessed each of the 1,000 (developer, indicator) pairs, assigning a 1 if the developer was transparent on the indicator and 0 otherwise based on clear criteria. Disagreements were resolved through discussion and examination of sources. Developers were allowed to contest scores prior to publication. This scoring yielded a 0-100 overall transparency score for each developer along with granular subdomain scores.

Key Findings on the State of Transparency in AI

The Foundation Model Transparency Index yields unprecedented empirical insights into transparency across the foundation model ecosystem. Here, we summarize 10 key research findings that paint a concerning picture of pervasive opacity among organizations building the most impactful AI today:

- No developer comes close to adequate transparency. The highest score is 54/100, with an average score of just 37/100. Even top-scoring Meta leaves much undisclosed. This fundamental opacity spans critical issues like labor, bias, and environmental impact.

- Upstream resources used to build models are a massive blindspot. Scores on upstream data, compute, and labor average just 20%, 17%, and 17% respectively. Many details like data provenance, worker wages, and carbon emissions remain obscured.

- Downstream societal impacts are almost entirely opaque. Developers score only 11% on downstream impact issues like usage statistics, affected communities, and redress mechanisms. There is virtually no transparency into how models affect society once deployed.

- Open model developers are consistently more transparent. OpenAI (48) scores below Meta (54), Hugging Face (53), and Stability AI (53) which all release model weights and data. Closed access exacerbates opacity.

- Capabilities transparency doesn’t extend to risks and limitations. Most developers demonstrate capabilities (62%) but few evaluate risks (24%), limitations (60%), or model weaknesses (26%). One-sided transparency creates misplaced trust.

- Labor practices are obscured across the board. Just one developer discloses any information about labor conditions and wages. This occludes concerns about exploitative outsourcing raised by many experts.

- Data transparency narrowly focuses on augmentation and curation. Data provenance, creators, copyright, and licensing are widely opaque. This prevents scrutiny of unauthorized data practices which are the subject of ongoing lawsuits.

- Compute and environmental impact are concealed. Only two developers report emissions and energy use. Foundation models can require carbon emissions rivaling a car’s lifetime usage, but most developers do not disclose.

- Release decisions happen in a black box. Nearly all developers give no information about how and why they decide what models to release or not. Release strategy plays an outsized role in impact but processes remain opaque.

- Transparency initiatives do not penetrate industry. Despite some documentation efforts by researchers and promises from developers, transparency on critical issues like bias testing and model performance remains scarce.

In summary, the index paints a concerning picture of an ecosystem trending towards opacity as risks rise. It also clarifies where transparency is most lacking across areas like labor, environmental impact, release processes, and downstream usage. These empirical findings make evident the need for transparency while focusing attention on the most critical blind spots.

Paths Forward to Increase Transparency

The Foundation Model Transparency Index establishes an unprecedented understanding of where industry practices currently fall short on transparency while spotlighting areas for urgent improvement. But how exactly can progress be made to rectify this fundamental opacity? We outline promising pathways forward identified in the report.

1. Industry Self-Regulation

The most direct path is for developers to voluntarily increase transparency. The index provides developers clear guidance on where they lack transparency relative to competitors. It also demonstrates how transparency on nearly every indicator is feasible today, since some developer scored points. Developers should:

- Proactively disclose more information about existing models that power live systems affecting millions.

- Make new models substantially more transparent about upstream sources, technical properties, and downstream impacts.

- Draw on practices from peers who are transparent on specific issues, following emerging best practices.

- Work closely with deployers of their models (e.g. API providers) to gather and share aggregated usage statistics.

2. Transparency requirements

Governments are actively considering requirements for transparency in AI. The index empirically grounds policymaking, clarifying where developers are opaque and where interventions are most needed. Policymakers should:

- Make transparency a top priority. Transparency unlocks public accountability and supports effective regulation.

- Enforce existing laws (e.g. sectoral regulations) requesting companies share information. Require transparency, not just promises.

- Use the Index to guide requirements for transparency with greater nuance. For instance, target areas like labor and data where opacity is extreme.

While requirements risk unintended consequences like stifling innovation, targeted transparency regulation can unlock public understanding and oversight.

3. Corporate and consumer pressure

Public pressure can drive change even absent regulation. The Index clarifies how developers compare and where consumers and corporations deploying models should push them for greater transparency.

- Corporations with purchasing power should require transparency provisions in contracts licensing models. Collectively, major cloud providers could exert influence on developers.

- Rankings like the Index can become a basis for corporate responsibility rankings and consumer guides that pressure laggards. Public understanding and concern drives change.

4. Academic engagement

Finally, the research community has an essential role to play through standards and strong norms around transparency. Researchers should:

- Adopt and refine emerging best practices like model cards to release details about training data, model development, and evaluation results.

- Extend initiatives like PapersWithCode that log experimental results to also capture details about datasets, compute, and other factors essential for reproducibility.

- Develop conference policies that require transparency as a prerequisite for publishing research on foundation models and other high-impact AI systems.

Taken together, these complementary approaches can put transparency as a front-and-center priority for the field. But achieving meaningful transparency requires sustained effort from all stakeholders: developers, policymakers, researchers, corporations, and civil society. The Foundation Model Transparency Index aims to provide common ground for this collective push by empirically outlining the current state of affairs.

Measuring Progress Towards Transparent AI

The Foundation Model Transparency Index represents a critical first step, not the final word, on transparency in AI. The urgency of public calls for transparency demands that we move beyond principles to measurement. By quantifying the current state of transparency, the Index spotlights where society needs more information to properly understand AI systems mediating essential aspects of life.

Moving forward, the Index can fuel continued progress by benchmarking how transparency evolves across critical issues like labor practices, algorithmic bias, and environmental impact. The research team plans to regularly update the Index, scoring developers on expanded sets of indicators that capture emerging risks, capabilities, and best practices. With time, they hope transparency will become standard practice for developers building society-shaping technologies like foundation models.

In other words, the Index can help realize a long-term vision: an AI ecosystem that is consistently transparent about the technologies it creates and deploys. But achieving accountable AI guided by public oversight will require all of us—as consumers, citizens, experts, and institutions—to collectively demand meaningful transparency.

Humanity cannot afford to repeat the harms that opaque technologies have inflicted throughout history. Algorithmic systems will never be perfect, but transparency provides the essential ingredients for public awareness, scientific exchange, and equitable governance. We must continue pushing towards transparent AI that advances prosperity for all. The Foundation Model Transparency Index marks one milestone in what will surely be a long journey.

Note: Read a critique to this Index here.