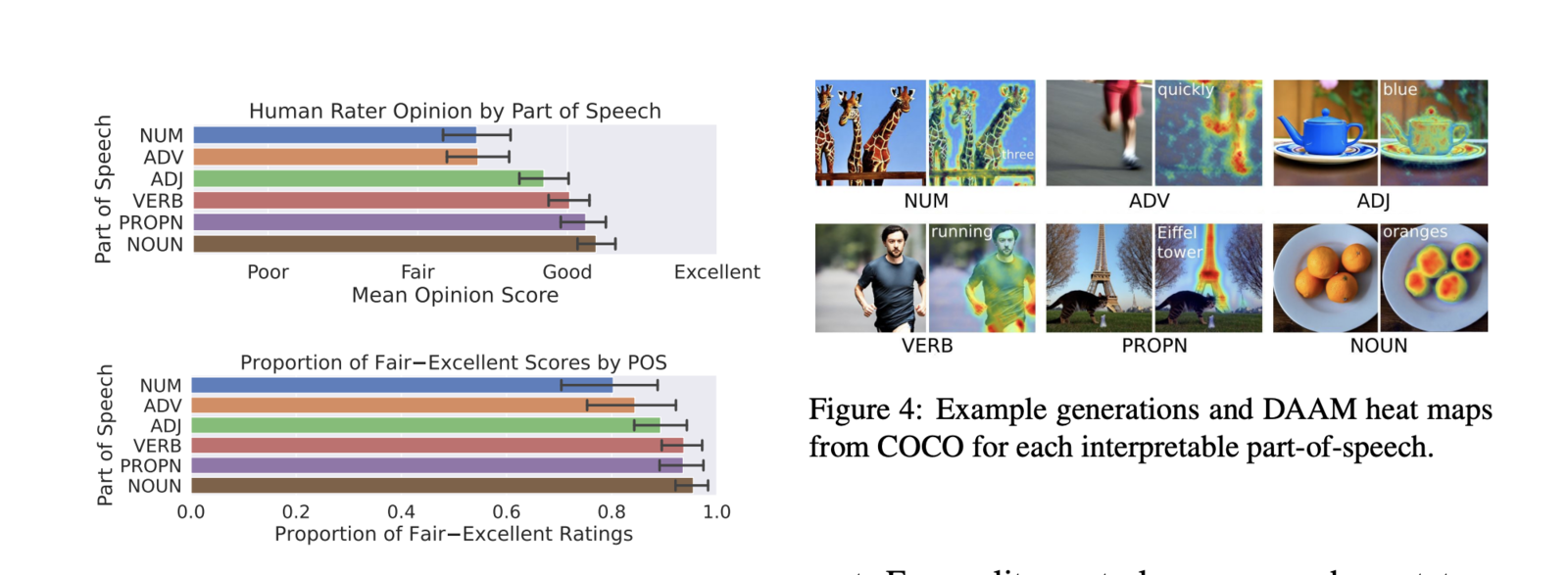

In a paper titled "What the DAAM: Interpreting Stable Diffusion Using Cross Attention", researchers propose a method called DAAM (Diffusion Attentive Attribution Maps) to analyze how words in a prompt influence different parts of the generated image.