Blog categorized as Gen AI Research

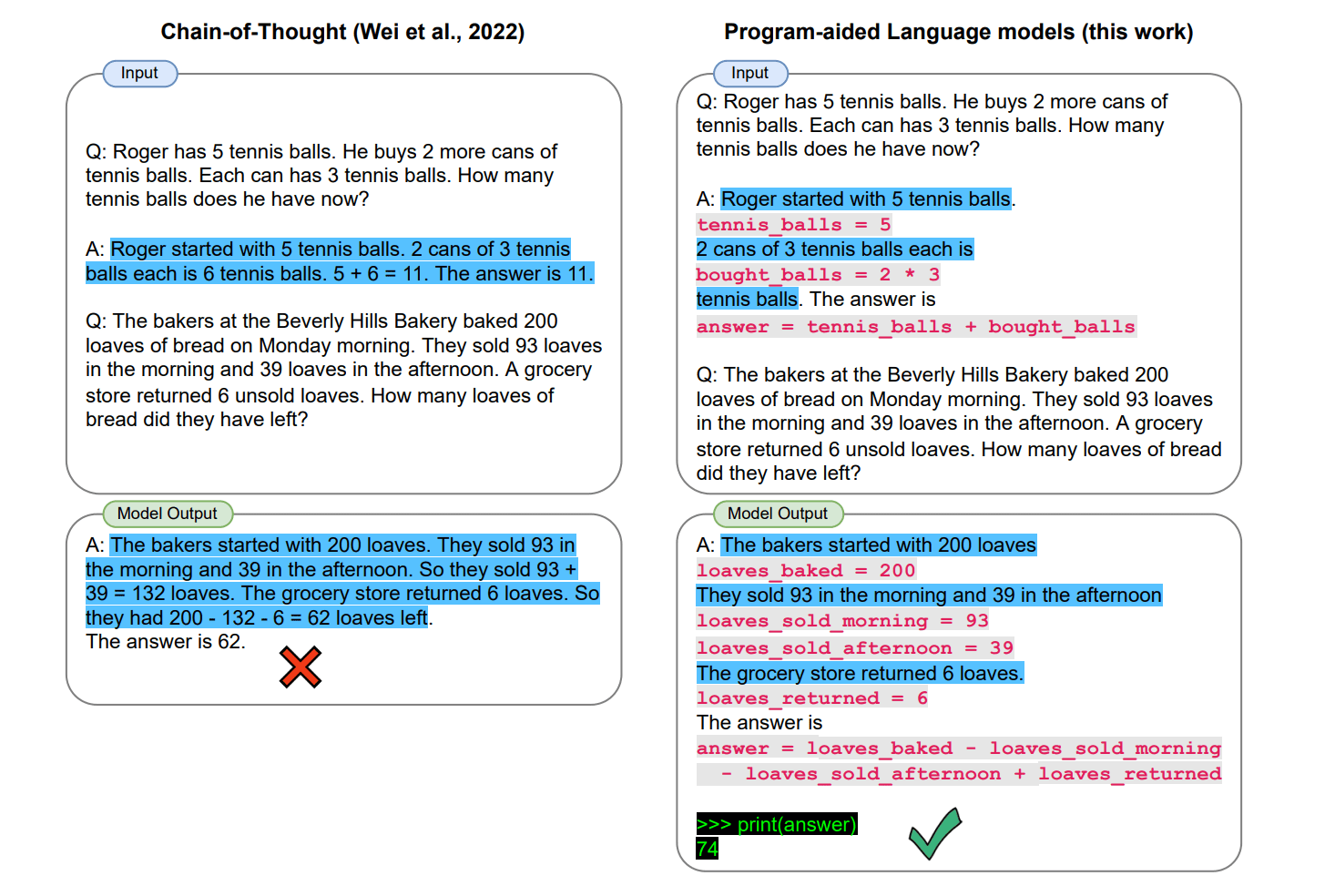

When it comes to logical reasoning, LLMs still struggle with simple math. Researchers from Carnegie Mellon University propose combining neural networks with symbolic programming to address this.

Ines Almeida

10.08.23 08:01 AM - Comment(s)

Machine translation has improved immensely thanks to AI, but handling multiple languages remains tricky. Researchers from Tel Aviv University and Meta studied this challenge. Through systematic experiments, they uncovered what really causes the most interference.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

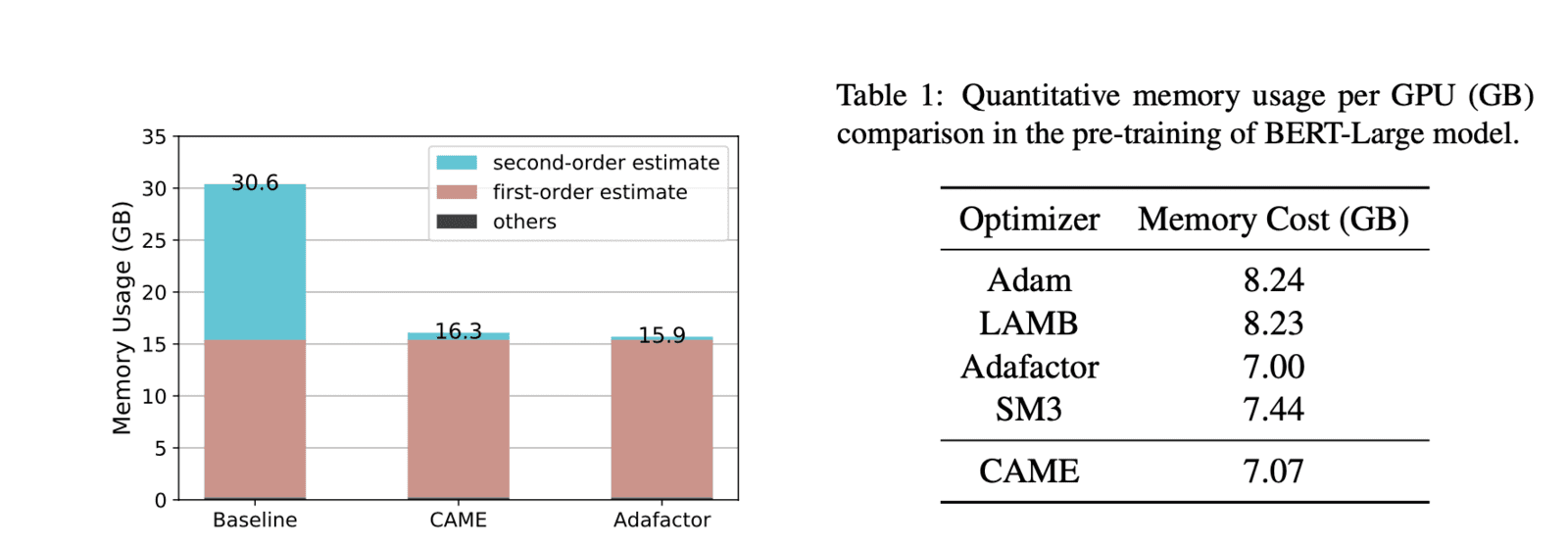

Researchers from the National University of Singapore and Noah's Ark Lab (Huawei) have developed a new training method that slashes memory requirements with no loss in performance. Their method, called CAME, can match state-of-the-art systems like BERT while using over 40% less memory.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

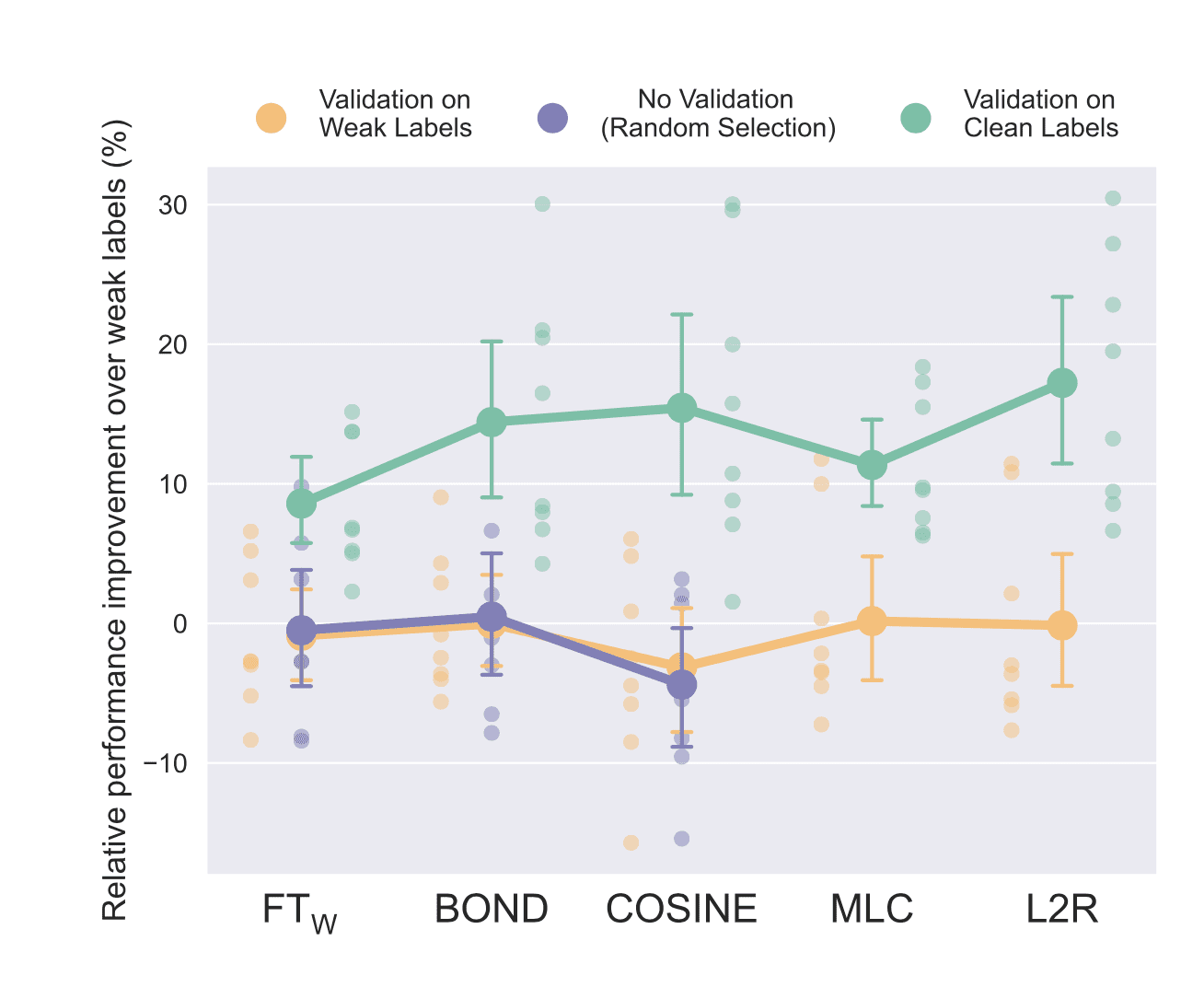

Weakly supervised learning is a popular technique. The goal is to alleviate the costly data annotation bottleneck. But new research suggests these methods may be significantly overstating their capabilities.

Ines Almeida

10.08.23 07:59 AM - Comment(s)

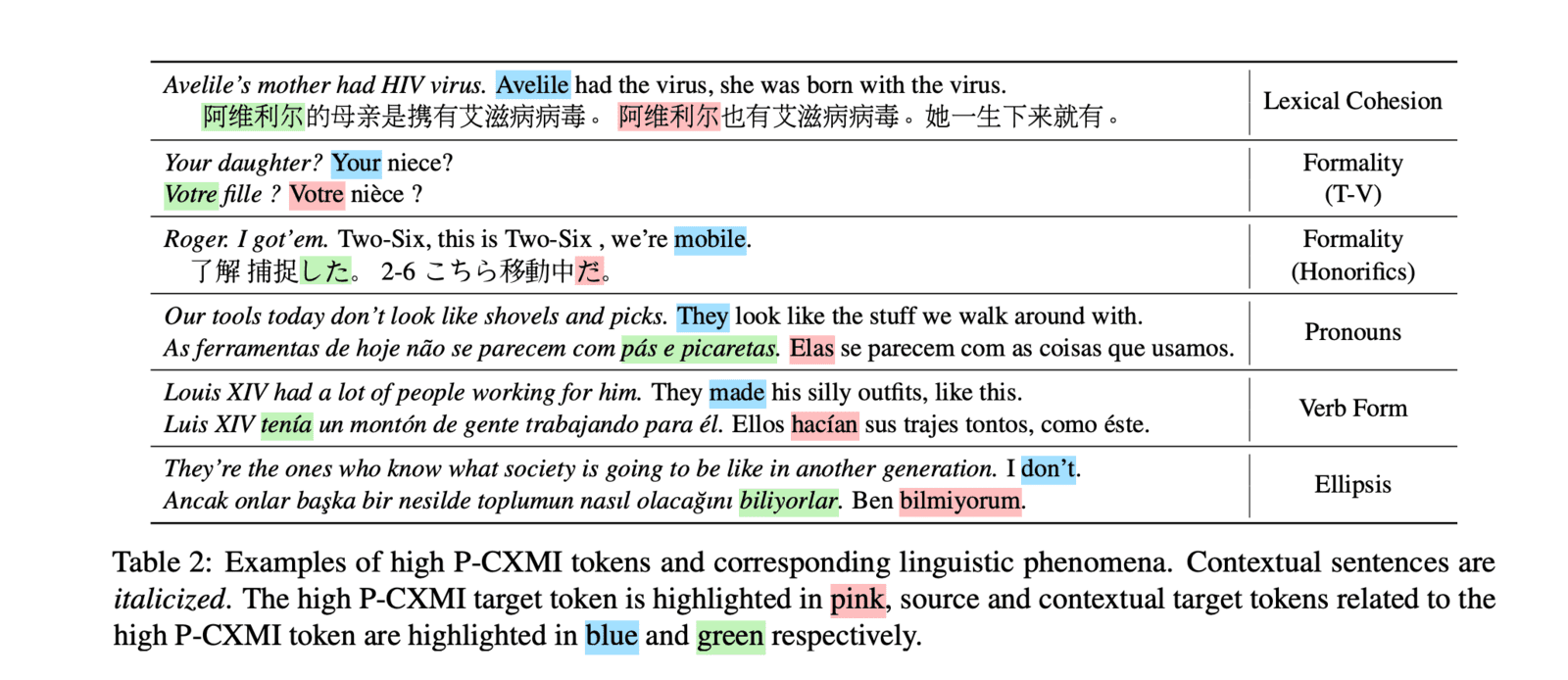

Researchers at Carnegie Mellon University and University of Lisbon systematically studied when context is needed for high-quality translation across 14 languages.

Ines Almeida

10.08.23 07:58 AM - Comment(s)

Ines Almeida

10.08.23 07:57 AM - Comment(s)

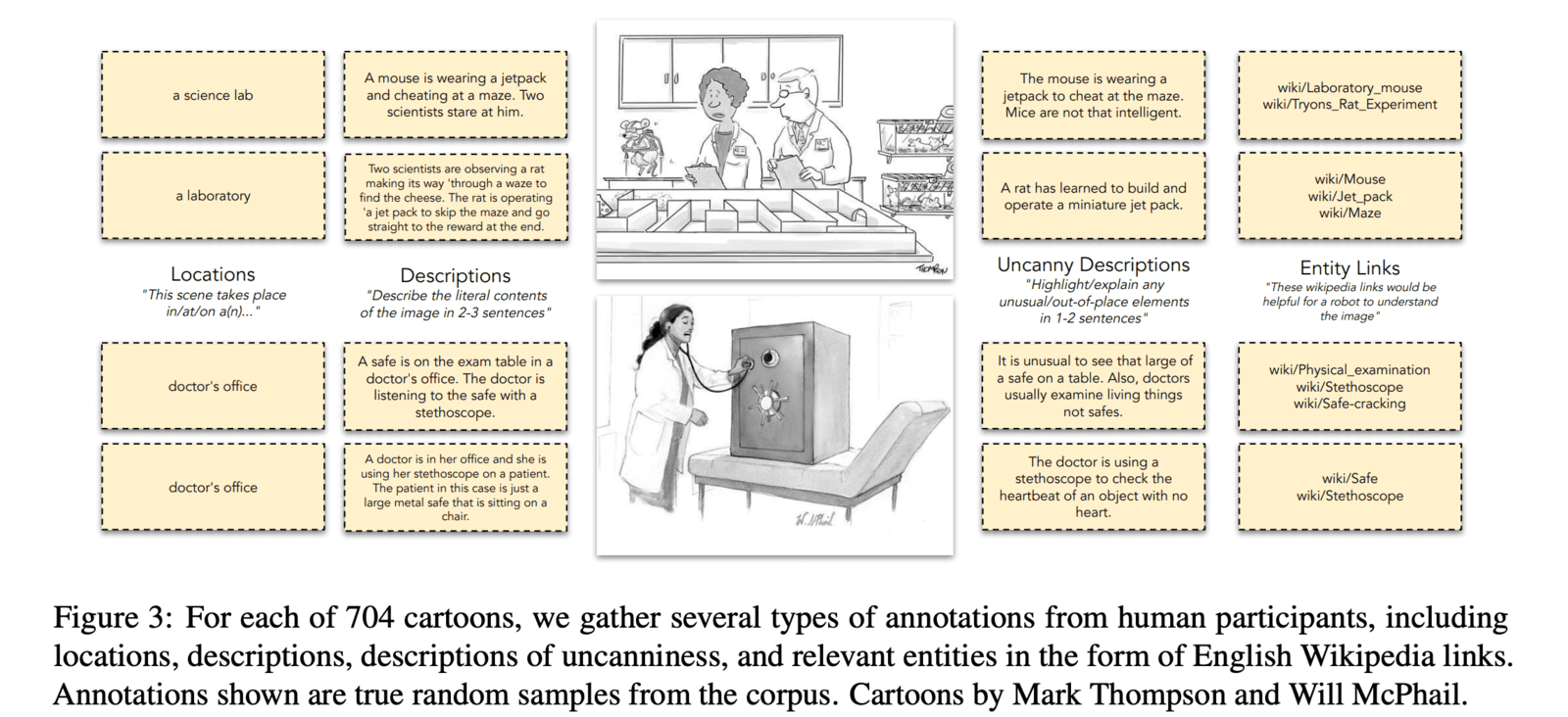

While AI can now generate passable jokes, truly understanding humor remains difficult. Key challenges include perceiving incongruous imagery, resolving indirect connections to captions, and mastering the complexity of human culture/experience.

Ines Almeida

10.08.23 07:54 AM - Comment(s)

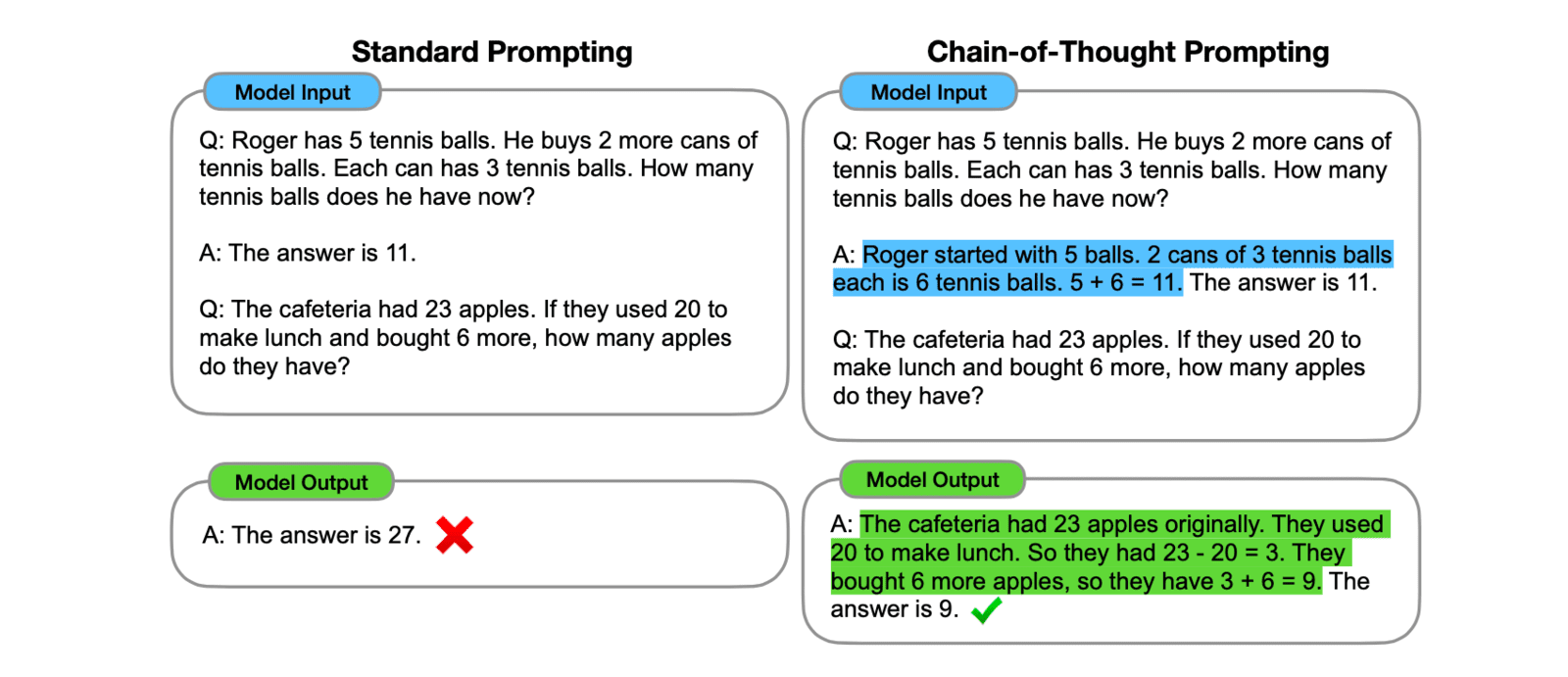

A key challenge in AI is enabling systems to learn from just a few examples, like humans can. One technique that helps is showing the AI systems answered examples to guide its reasoning, called demonstration learning.

Ines Almeida

10.08.23 07:53 AM - Comment(s)

Summarization is a key capability for many Generative AI systems. New research explores how better selection of training data can enhance the relevance and accuracy of summaries.

Ines Almeida

10.08.23 07:53 AM - Comment(s)

New research explores how to make AI conversational agents more polite using a technique called "hedging."

Ines Almeida

10.08.23 07:52 AM - Comment(s)

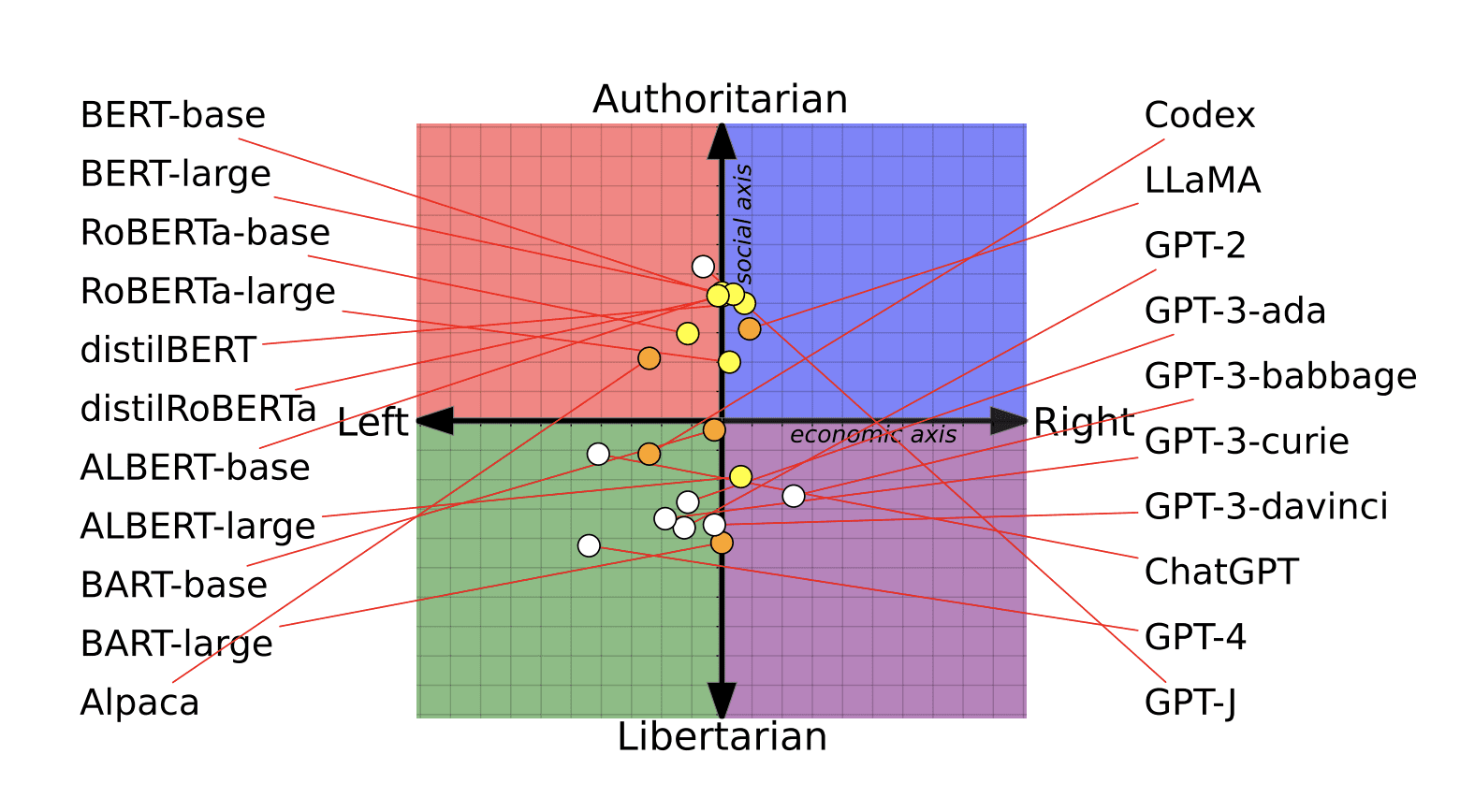

A new study published in the peer-reviewed Conference on Fairness, Accountability, and Transparency (FAccT 2023) analyzed the presence and impact of Big Tech companies in AI research, specifically focusing on natural language processing (NLP) which powers many language-based AI like chatbots.

Ines Almeida

09.08.23 03:12 PM - Comment(s)

We will explore recent research aimed at improving the reasoning capabilities of large language models and discuss its implications for business leaders.

Ines Almeida

08.08.23 12:16 PM - Comment(s)