Blog tagged as Gen AI Research

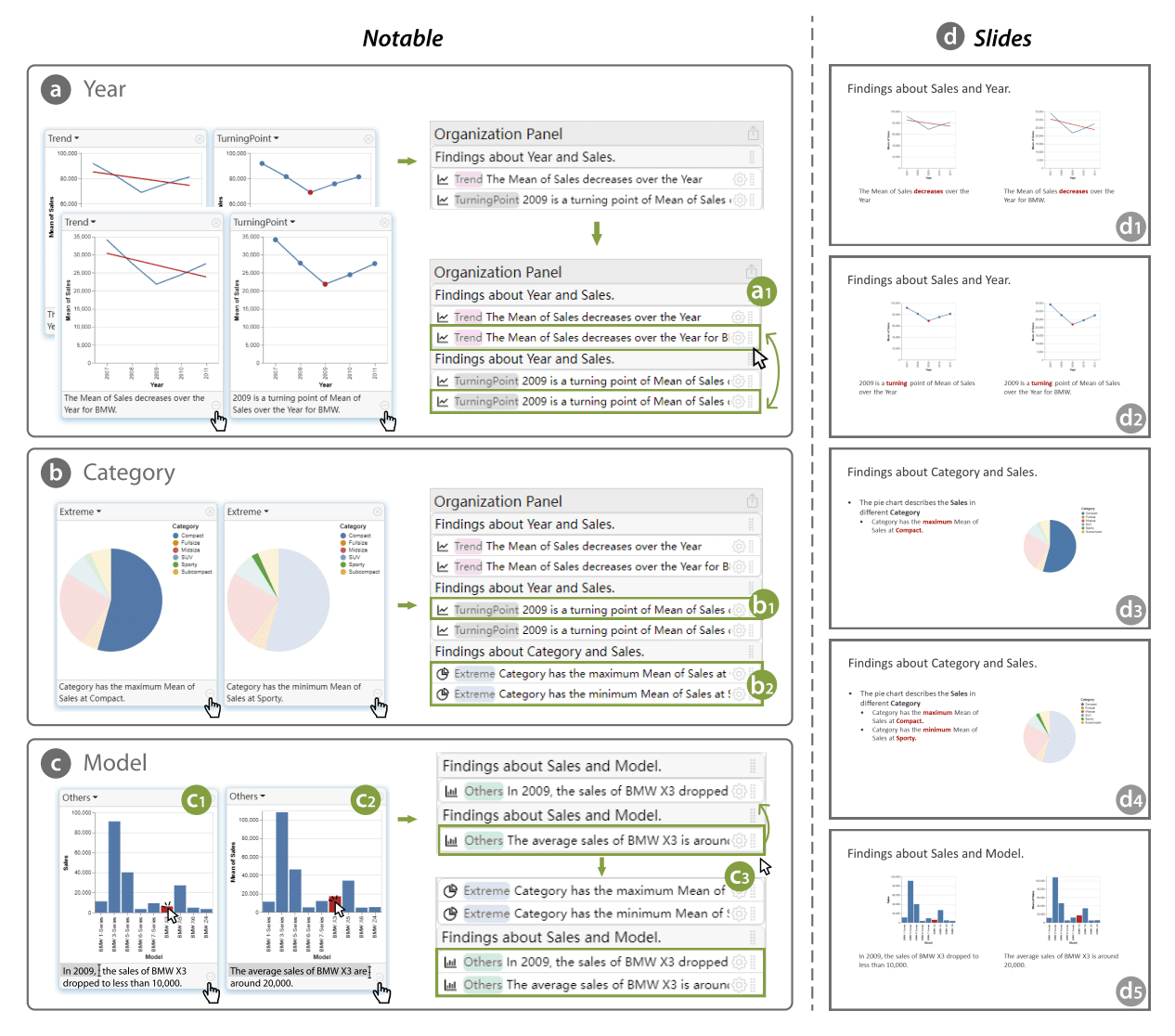

Data analysis often involves exploring data to unearth insights, then crafting stories to communicate those findings. But converting discoveries into coherent narratives poses challenges. Researchers have developed an AI assistant called Notable that streamlines data storytelling.

Ines Almeida

10.08.23 08:04 AM - Comment(s)

Back in 2018, researchers from Facebook AI developed a new method to improve story generation through hierarchical modeling. Their approach mimics how people plan out narratives.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

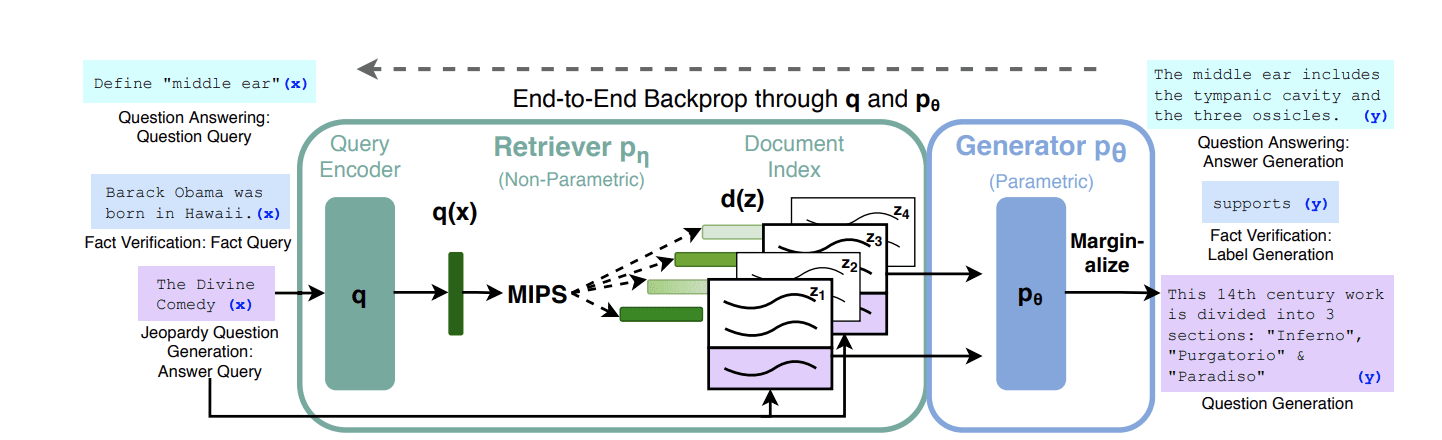

Retrieval-augmented generation (RAG) systems learn to retrieve and incorporate external knowledge when generating text.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

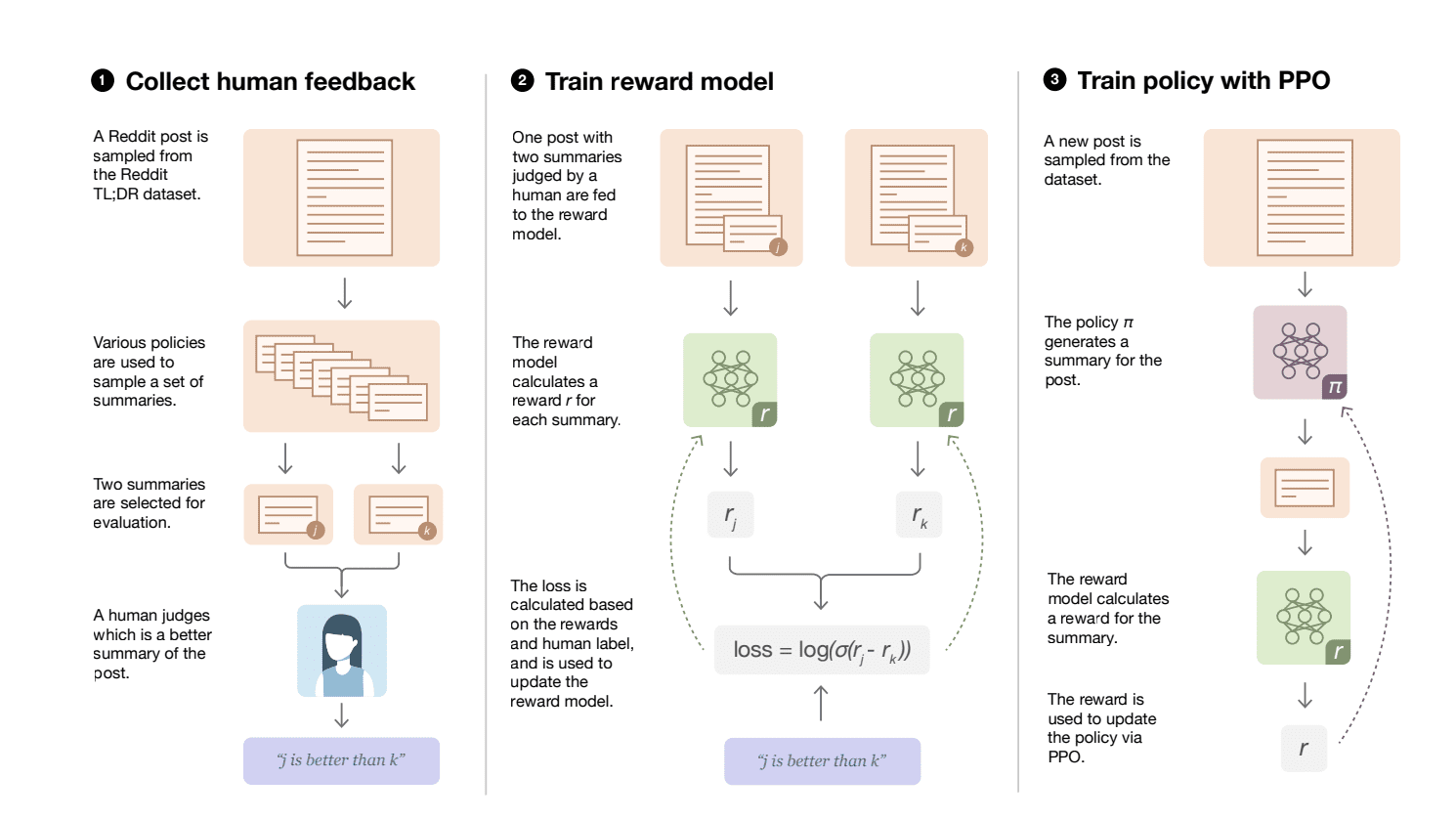

The researchers found that optimizing the AI for direct human preferences significantly boosted performance compared to just training it to mimic reference summaries.

Ines Almeida

10.08.23 08:02 AM - Comment(s)

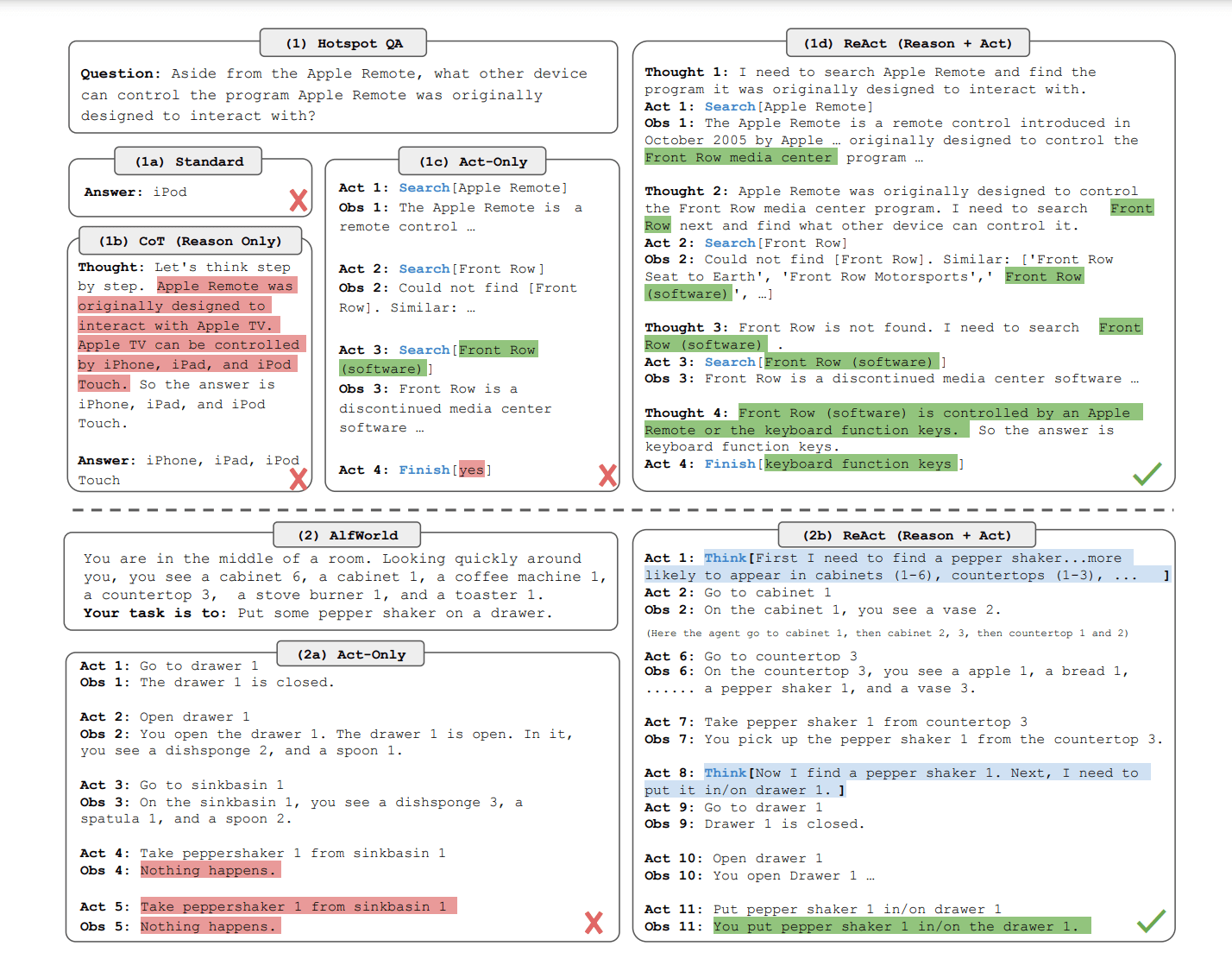

LLMs struggle with logical reasoning and decision-making when tackling complex real-world problems. Researchers propose an approach called ReAct that interleaves reasoning steps with actions to address this accuracy problem.

Ines Almeida

10.08.23 08:02 AM - Comment(s)

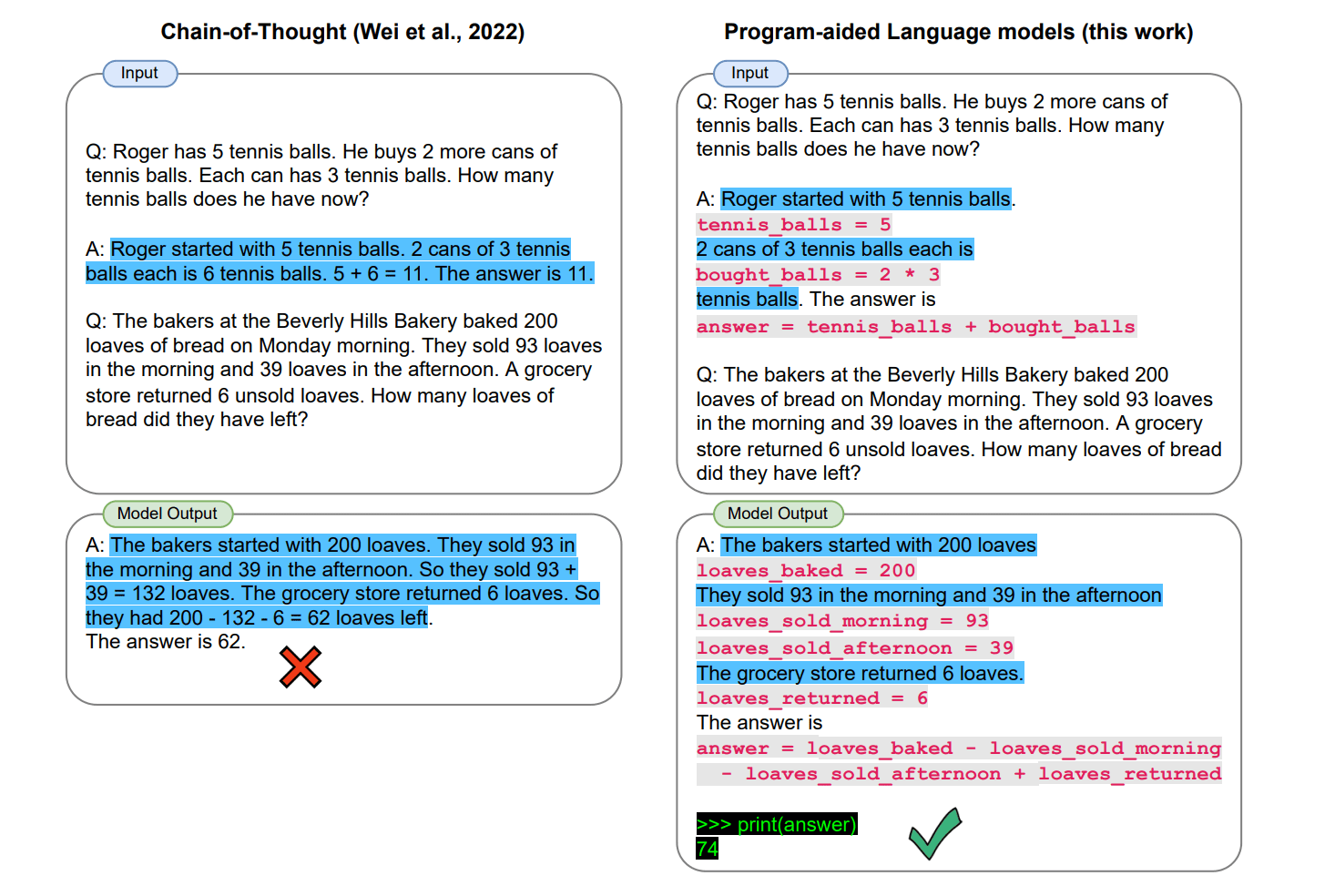

When it comes to logical reasoning, LLMs still struggle with simple math. Researchers from Carnegie Mellon University propose combining neural networks with symbolic programming to address this.

Ines Almeida

10.08.23 08:01 AM - Comment(s)

Machine translation has improved immensely thanks to AI, but handling multiple languages remains tricky. Researchers from Tel Aviv University and Meta studied this challenge. Through systematic experiments, they uncovered what really causes the most interference.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

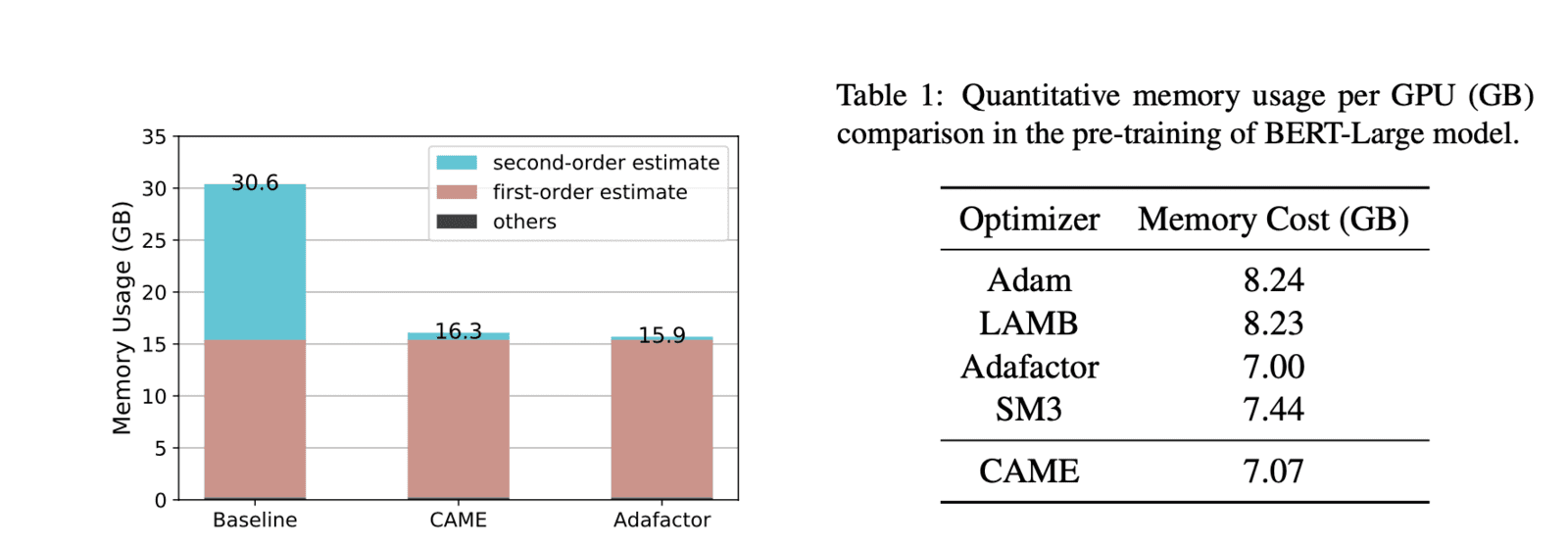

Researchers from the National University of Singapore and Noah's Ark Lab (Huawei) have developed a new training method that slashes memory requirements with no loss in performance. Their method, called CAME, can match state-of-the-art systems like BERT while using over 40% less memory.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

Backpack models have an internal structure that is more interpretable and controllable compared to existing models like BERT and GPT-3.

Ines Almeida

10.08.23 07:59 AM - Comment(s)

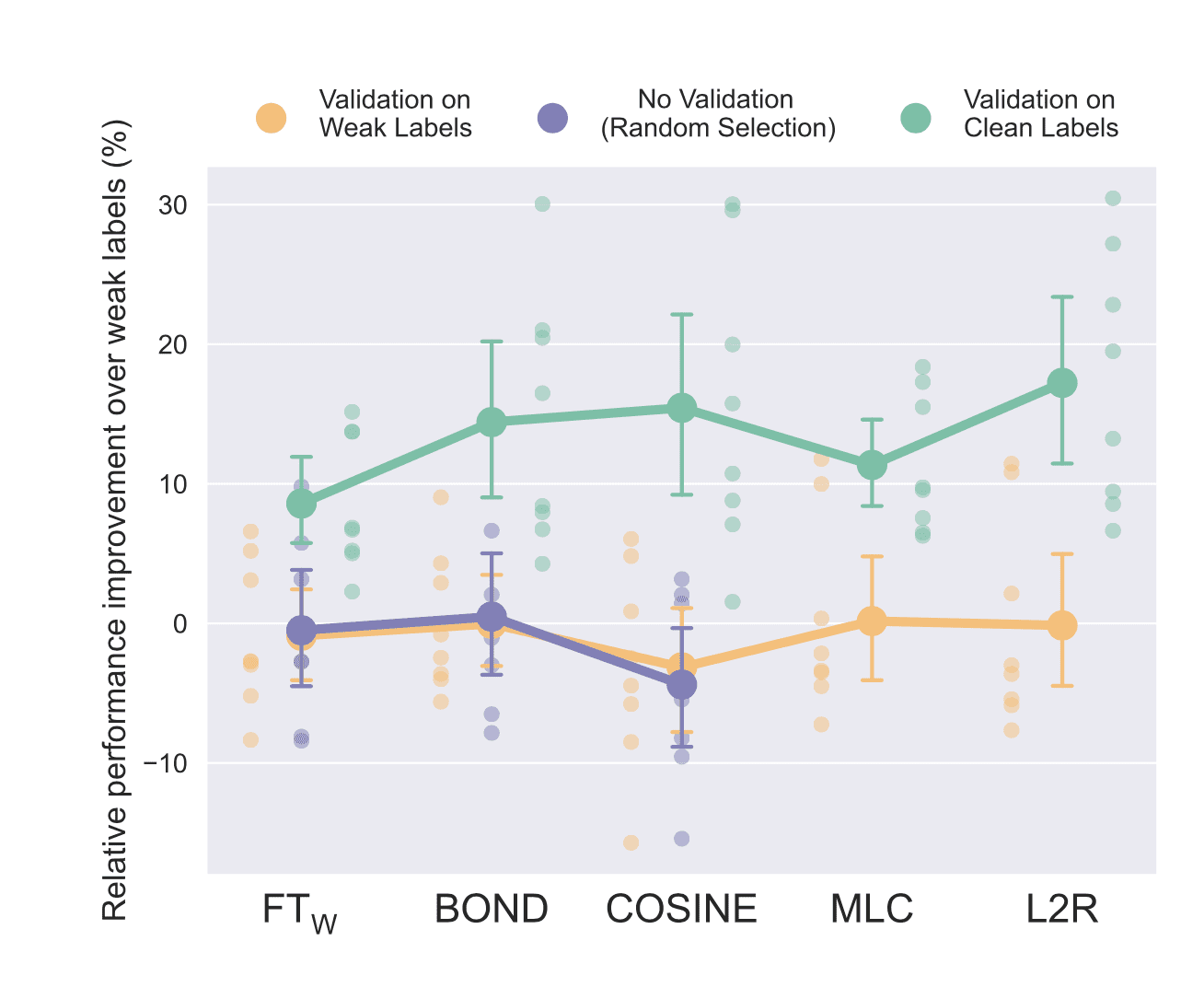

Weakly supervised learning is a popular technique. The goal is to alleviate the costly data annotation bottleneck. But new research suggests these methods may be significantly overstating their capabilities.

Ines Almeida

10.08.23 07:59 AM - Comment(s)

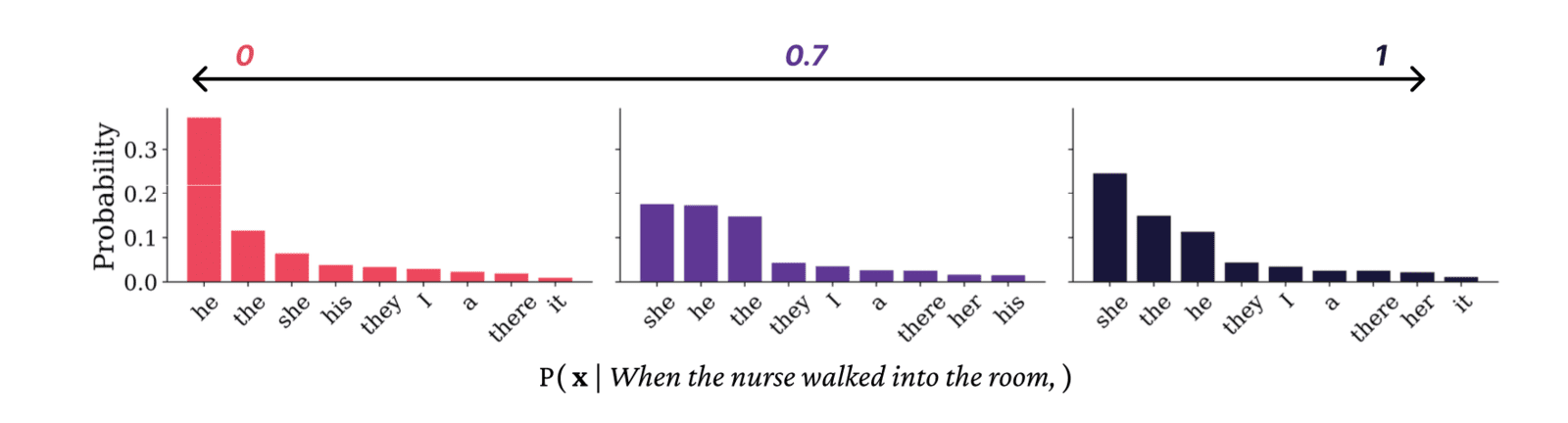

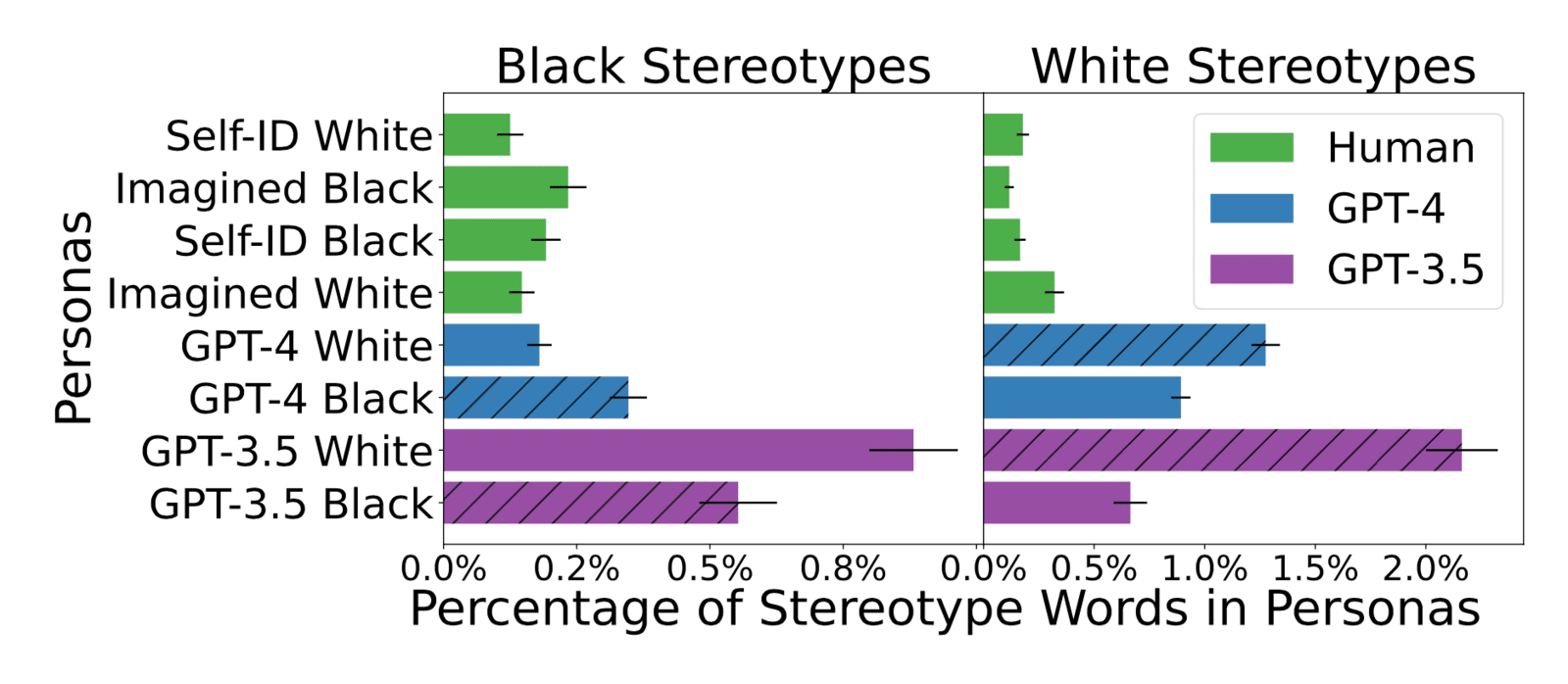

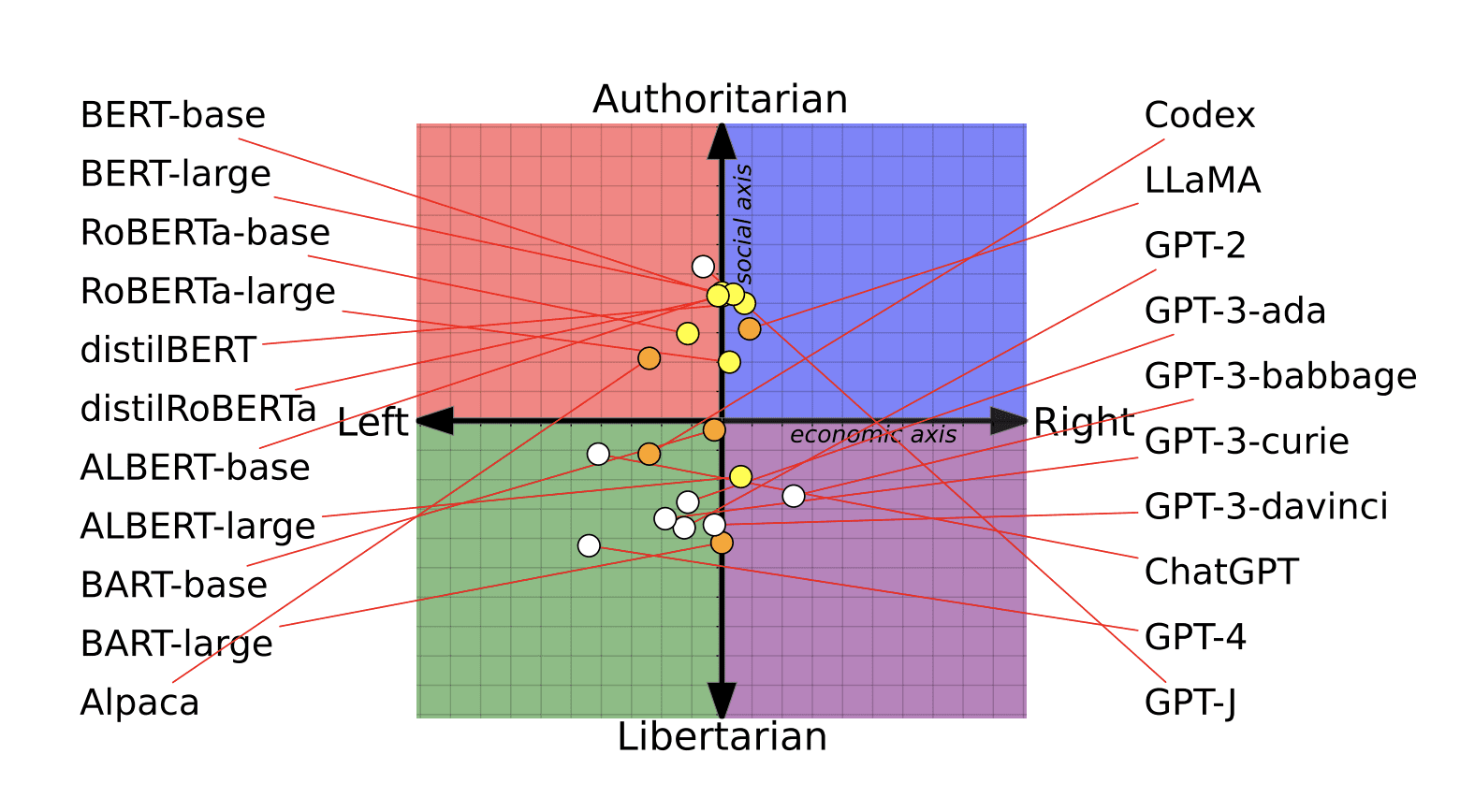

Recent advances in AI language models like GPT-4 and Claude 2 have enabled impressively fluent text generation. However, new research reveals these models may perpetuate harmful stereotypes and assumptions through the narratives they construct.

Ines Almeida

10.08.23 07:59 AM - Comment(s)

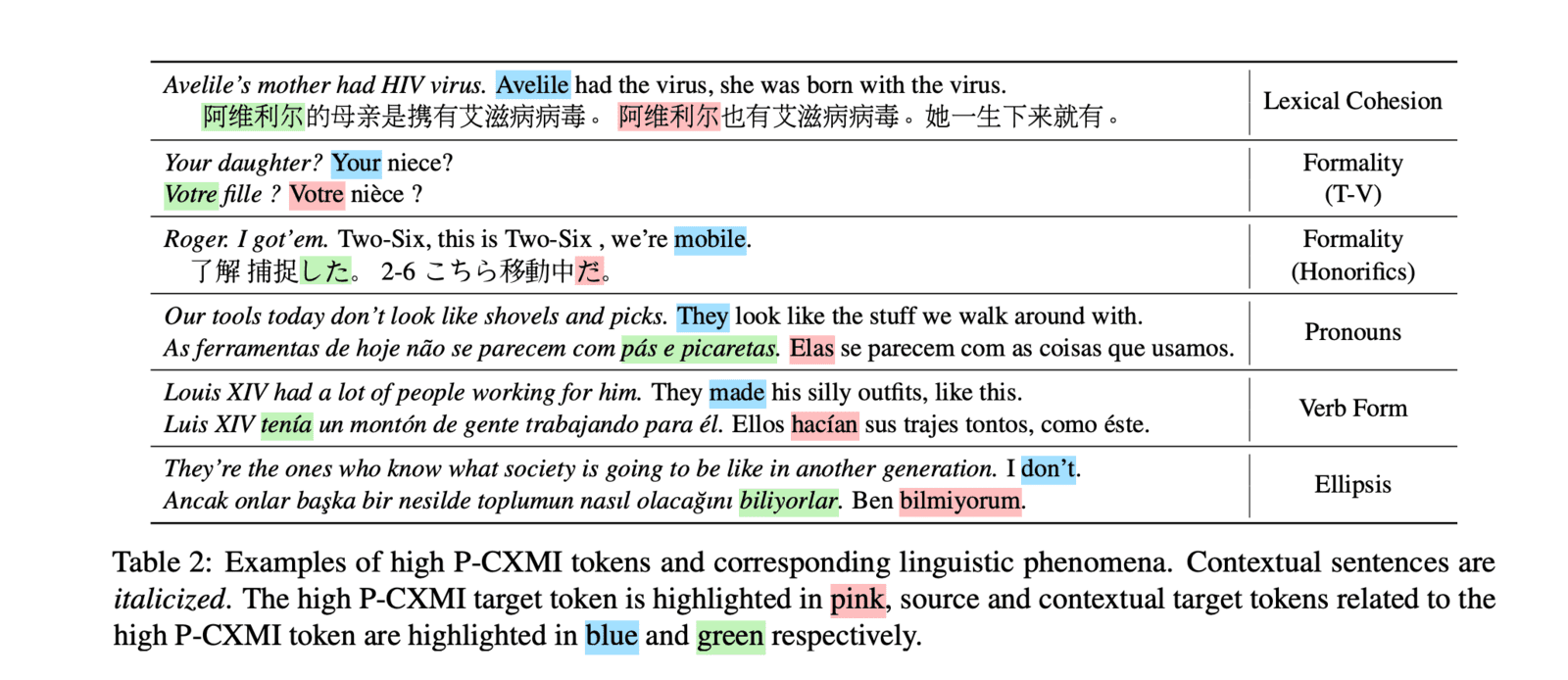

Researchers at Carnegie Mellon University and University of Lisbon systematically studied when context is needed for high-quality translation across 14 languages.

Ines Almeida

10.08.23 07:58 AM - Comment(s)

Ines Almeida

10.08.23 07:57 AM - Comment(s)

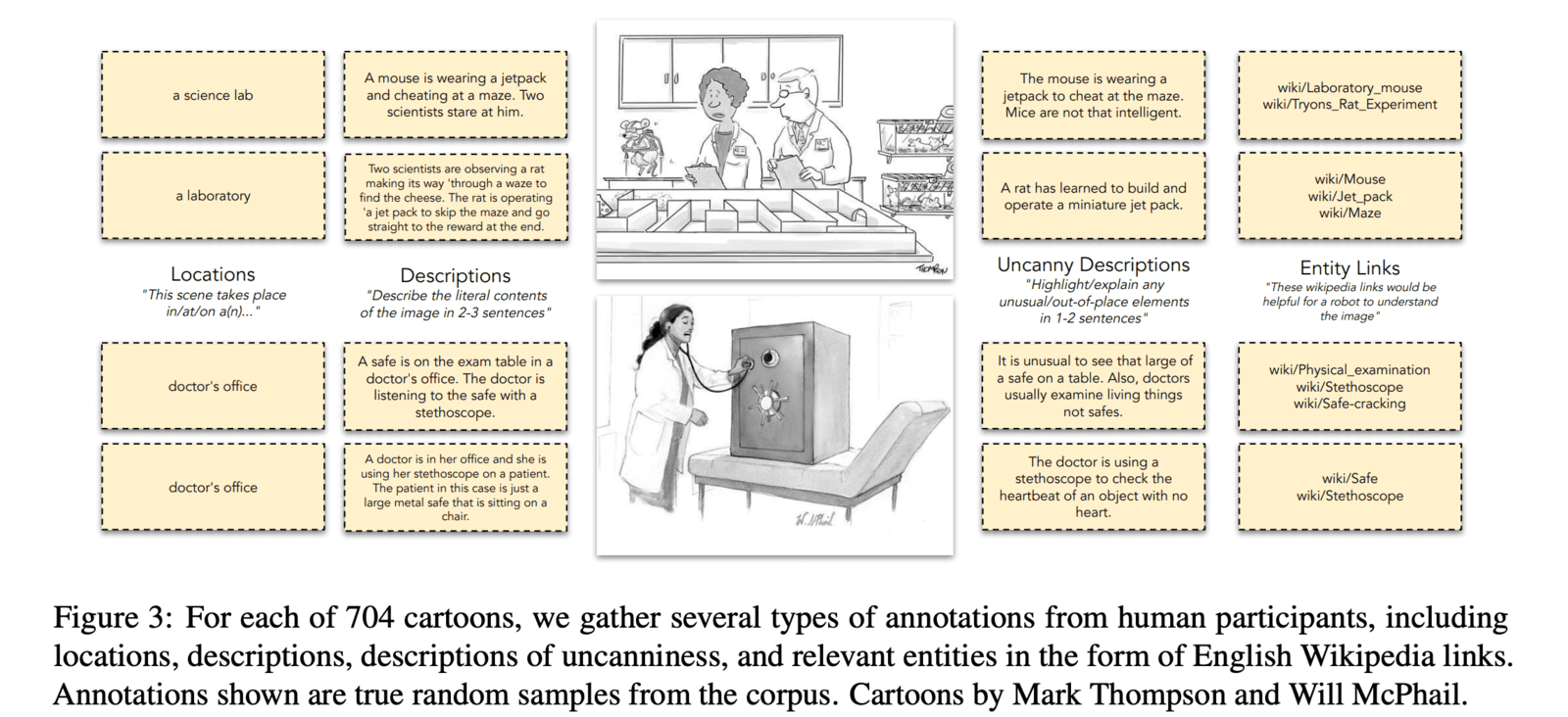

While AI can now generate passable jokes, truly understanding humor remains difficult. Key challenges include perceiving incongruous imagery, resolving indirect connections to captions, and mastering the complexity of human culture/experience.

Ines Almeida

10.08.23 07:54 AM - Comment(s)

A key challenge in AI is enabling systems to learn from just a few examples, like humans can. One technique that helps is showing the AI systems answered examples to guide its reasoning, called demonstration learning.

Ines Almeida

10.08.23 07:53 AM - Comment(s)

Summarization is a key capability for many Generative AI systems. New research explores how better selection of training data can enhance the relevance and accuracy of summaries.

Ines Almeida

10.08.23 07:53 AM - Comment(s)

New research explores how to make AI conversational agents more polite using a technique called "hedging."

Ines Almeida

10.08.23 07:52 AM - Comment(s)

A new study published in the peer-reviewed Conference on Fairness, Accountability, and Transparency (FAccT 2023) analyzed the presence and impact of Big Tech companies in AI research, specifically focusing on natural language processing (NLP) which powers many language-based AI like chatbots.

Ines Almeida

09.08.23 03:12 PM - Comment(s)

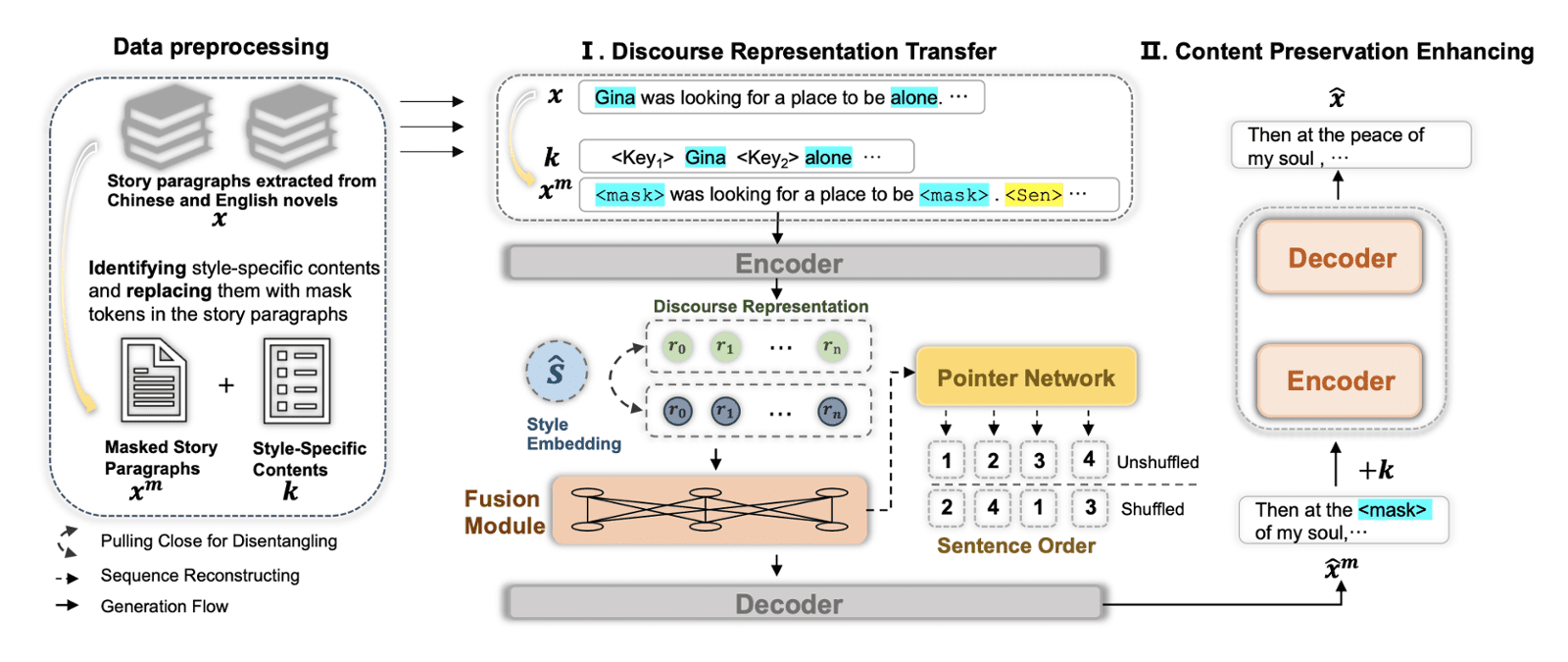

Researchers from Tsinghua University and Wuhan University propose teaching AI systems to mimic authorial styles.

Ines Almeida

08.08.23 10:40 PM - Comment(s)

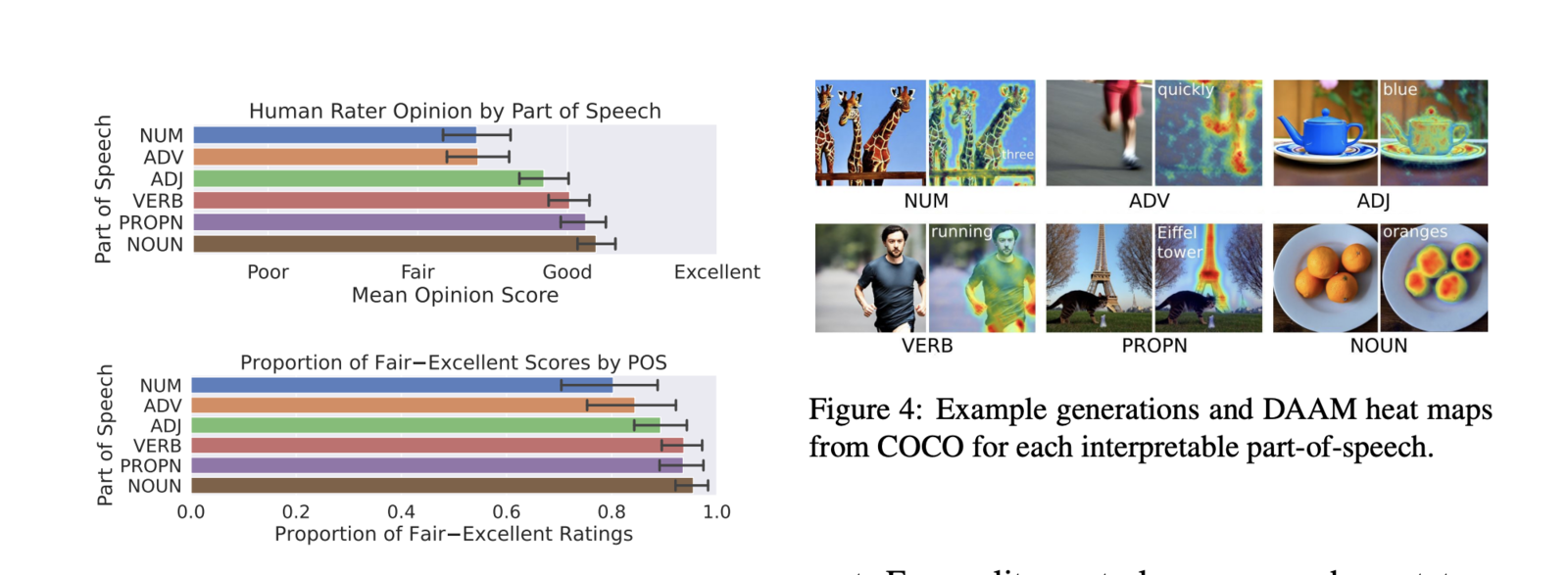

In a paper titled "What the DAAM: Interpreting Stable Diffusion Using Cross Attention", researchers propose a method called DAAM (Diffusion Attentive Attribution Maps) to analyze how words in a prompt influence different parts of the generated image.

Ines Almeida

08.08.23 04:59 PM - Comment(s)