Blog by Ines Almeida

Ines Almeida

10.08.23 11:43 AM - Comment(s)

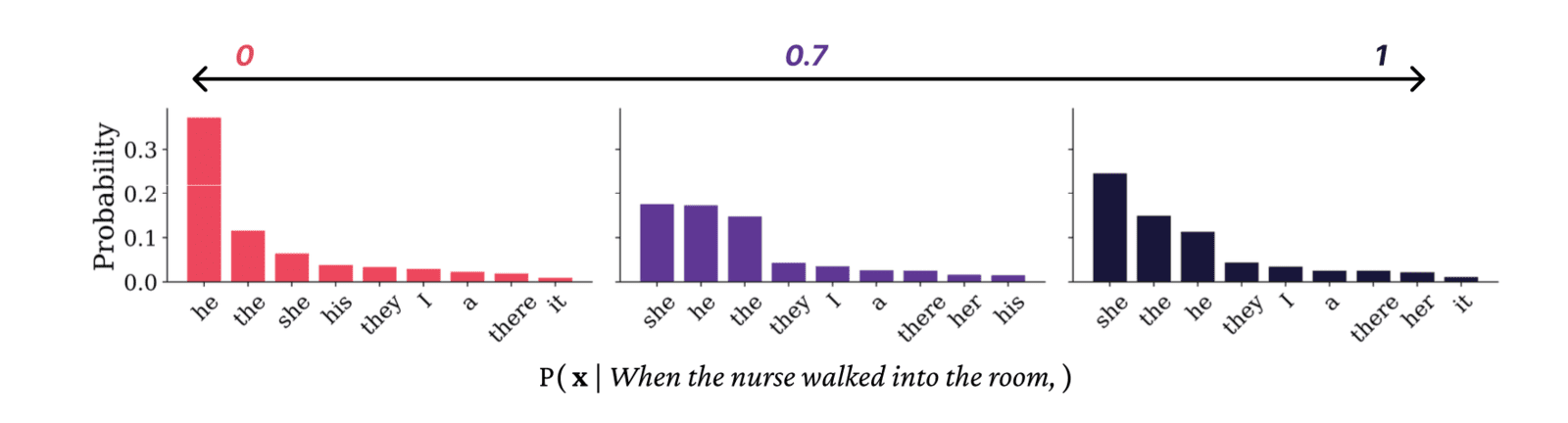

Researchers have developed a benchmark called the LAMBADA dataset to rigorously test how well AI models can leverage broader discourse context when predicting an upcoming word.

Ines Almeida

10.08.23 08:08 AM - Comment(s)

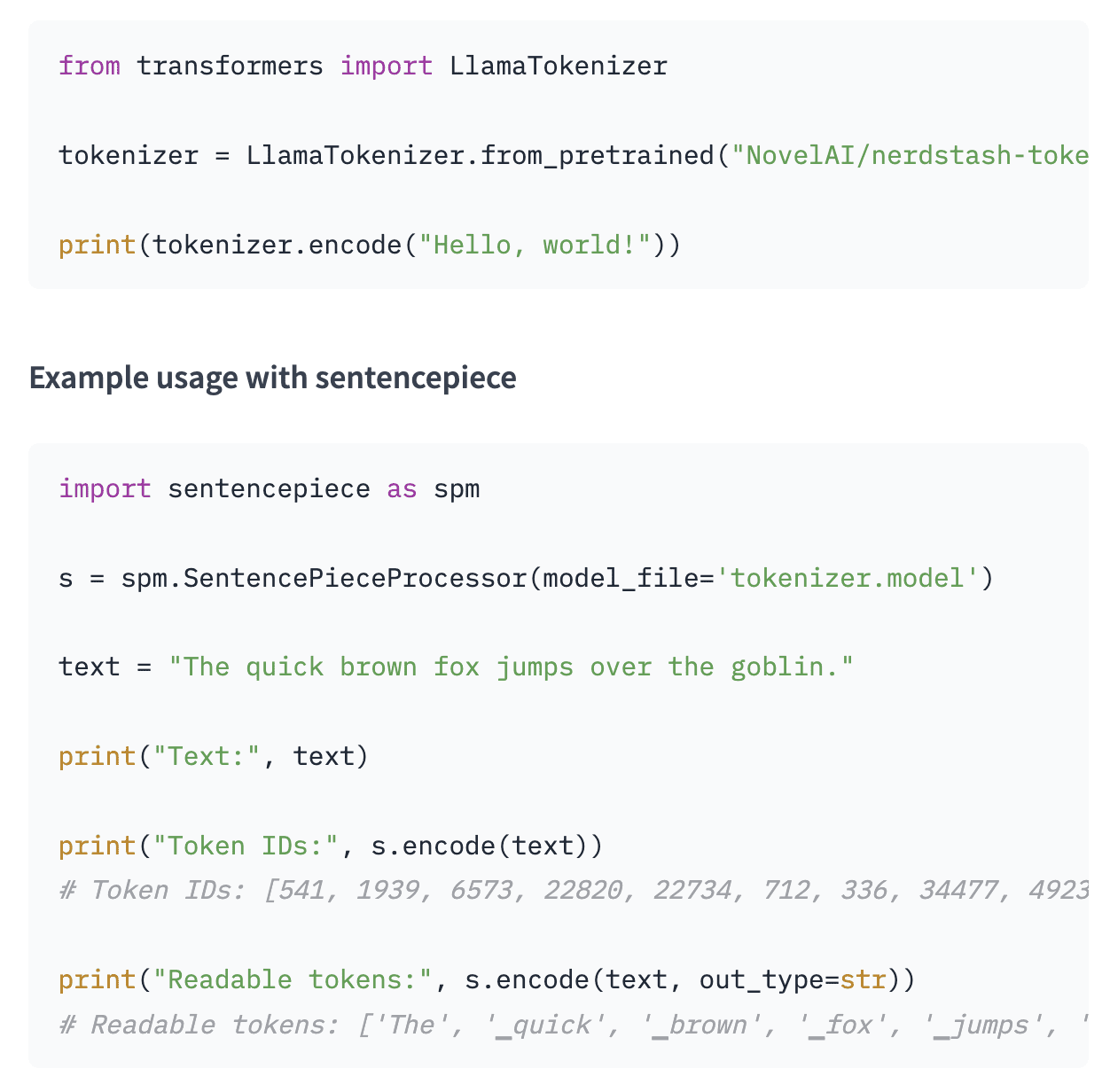

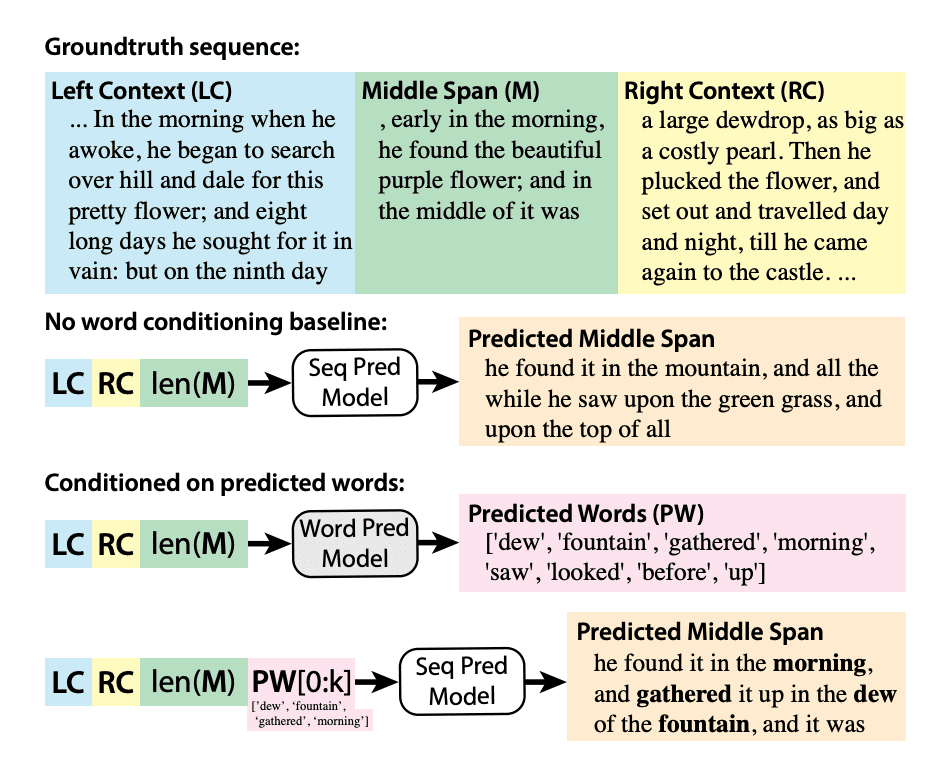

AI research from 2019 explored how to automatically generate reasonable suggestions for missing sections of text.

Ines Almeida

10.08.23 08:07 AM - Comment(s)

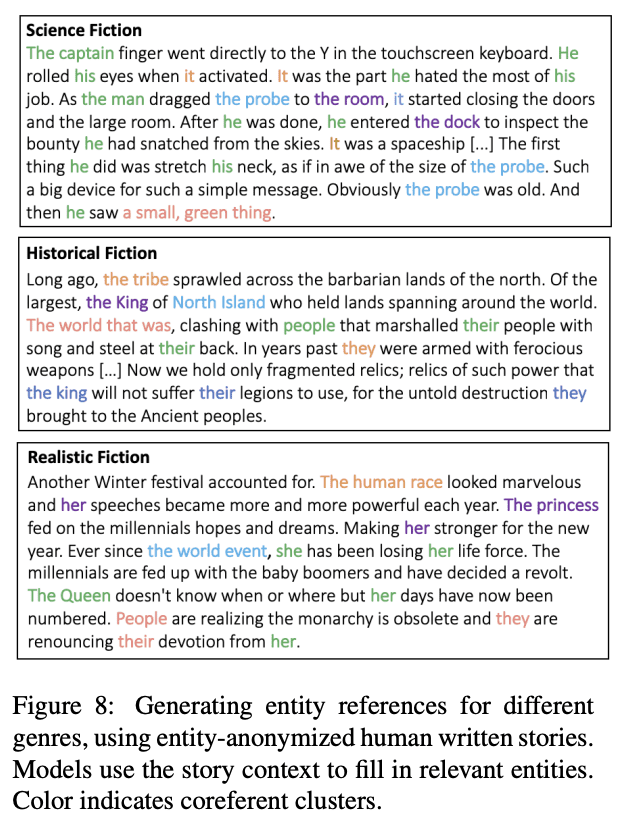

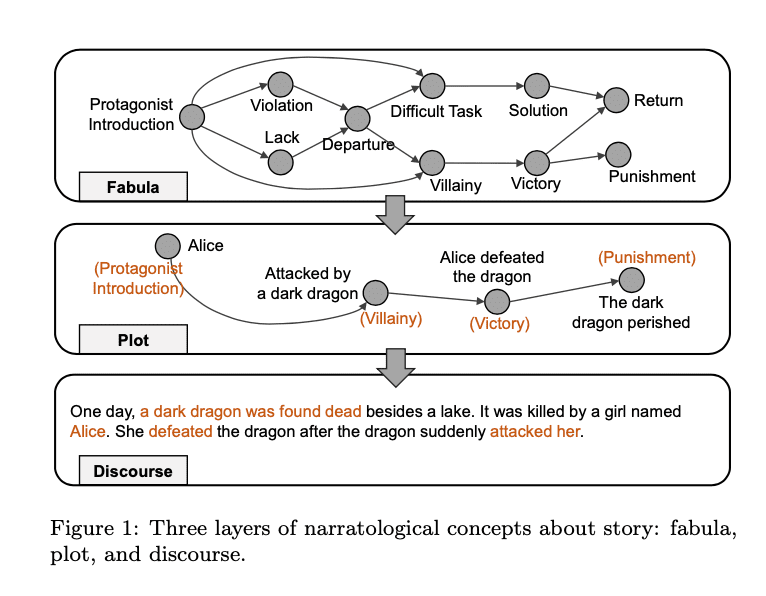

In 2019, researchers explored how artificial intelligence could use hierarchical models to improve computer-generated stories.

Ines Almeida

10.08.23 08:07 AM - Comment(s)

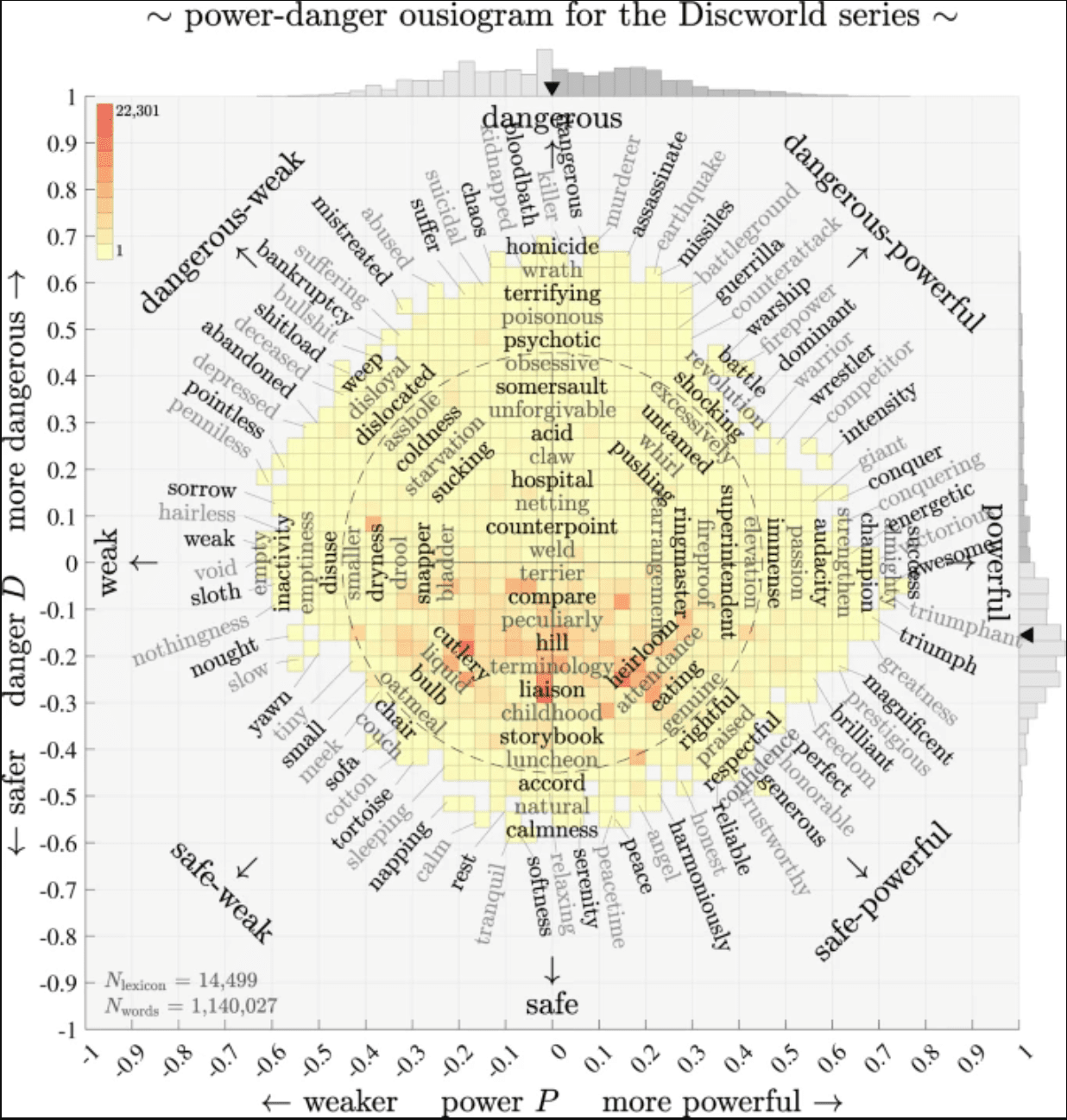

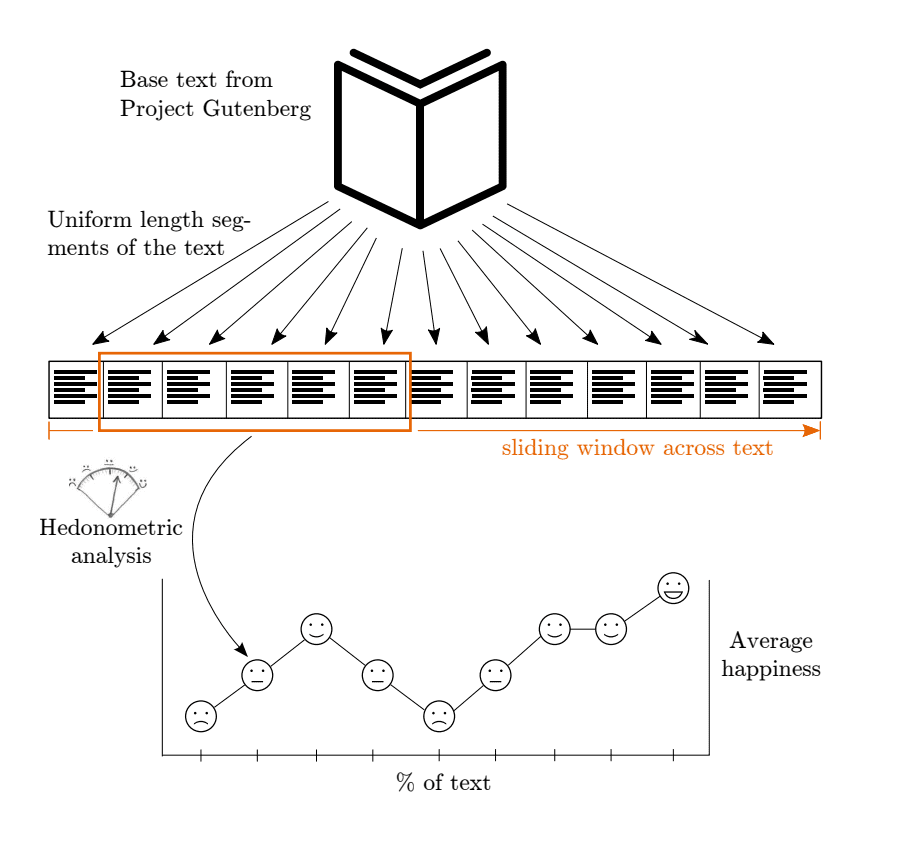

Books may seem like straightforward stories, but researchers are finding fascinating mathematical patterns hidden in the text.

Ines Almeida

10.08.23 08:06 AM - Comment(s)

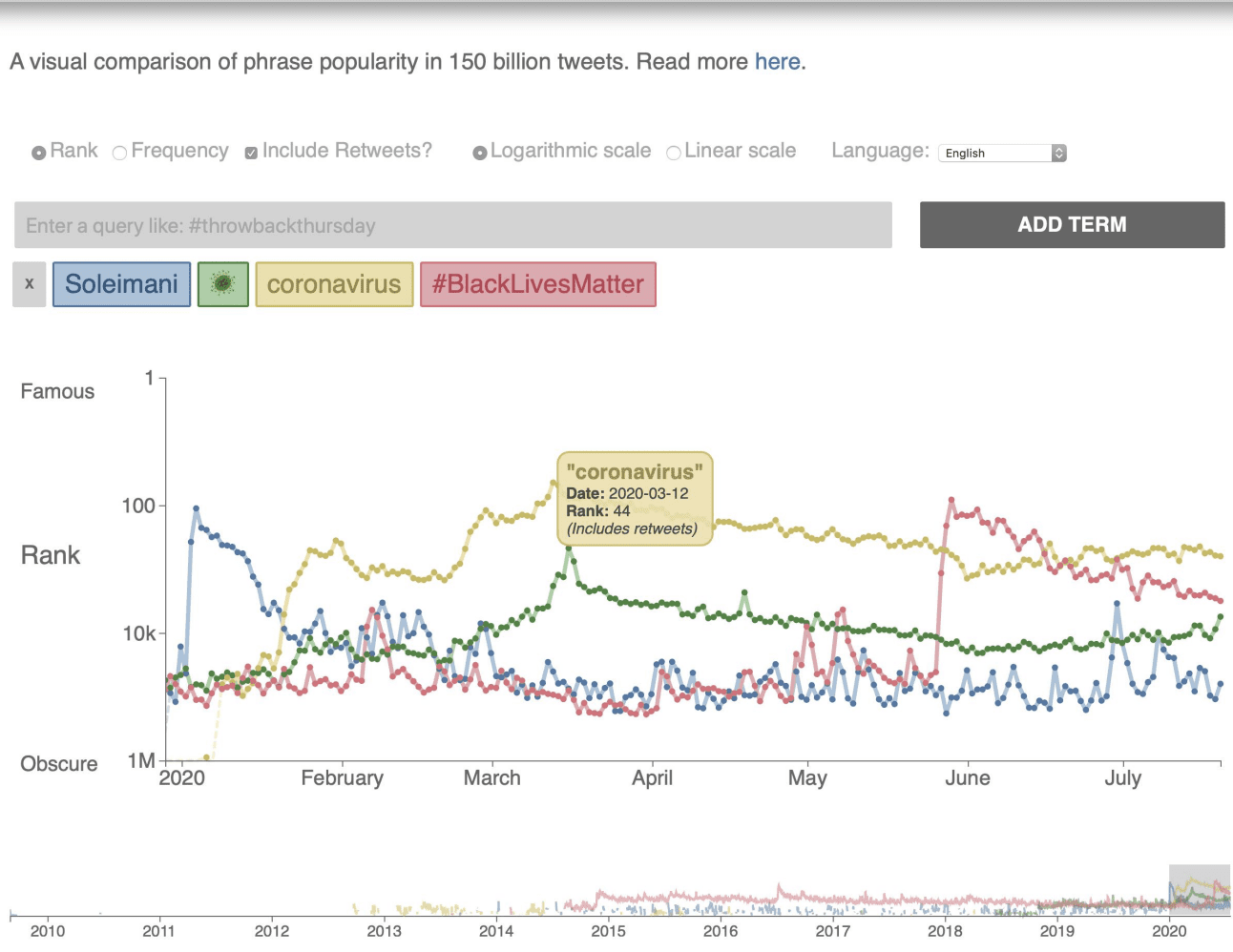

Researchers developed a tool called Storywrangler that leveraged Twitter data to create an "instrument for understanding our world through the lens of social media."

Ines Almeida

10.08.23 08:05 AM - Comment(s)

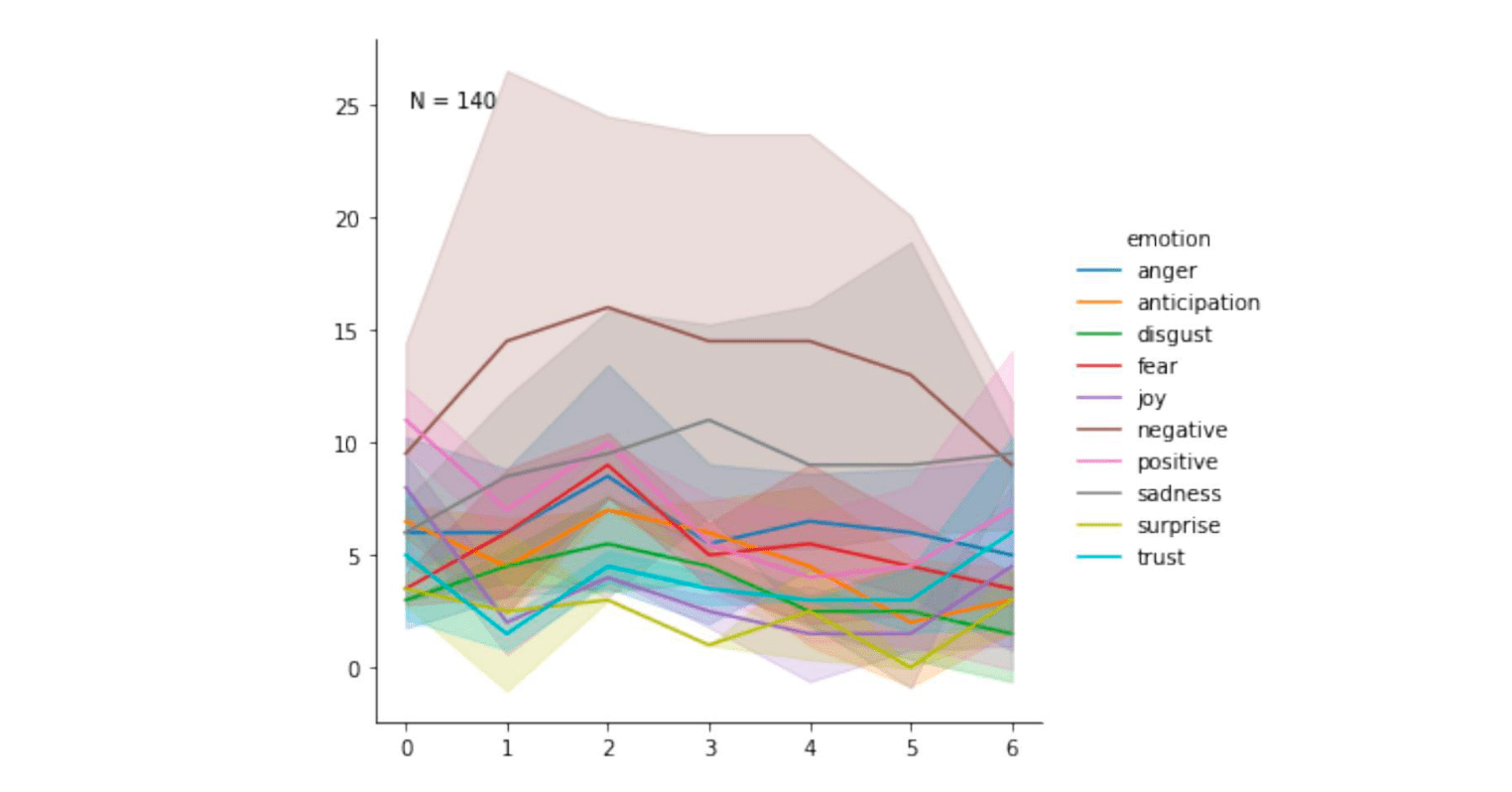

A 2016 study analyzed over a thousand stories to uncover the basic emotional arcs that form the building blocks of narratives.

Ines Almeida

10.08.23 08:05 AM - Comment(s)

New research explores how integrating structured knowledge into AI systems can enhance storytelling abilities.

Ines Almeida

10.08.23 08:05 AM - Comment(s)

New research from Stanford University demonstrates how "emotion maps" could improve story generation.

Ines Almeida

10.08.23 08:04 AM - Comment(s)

While machine translation has improved dramatically for major languages, quality translation still lags for thousands of smaller languages.

Ines Almeida

10.08.23 08:04 AM - Comment(s)

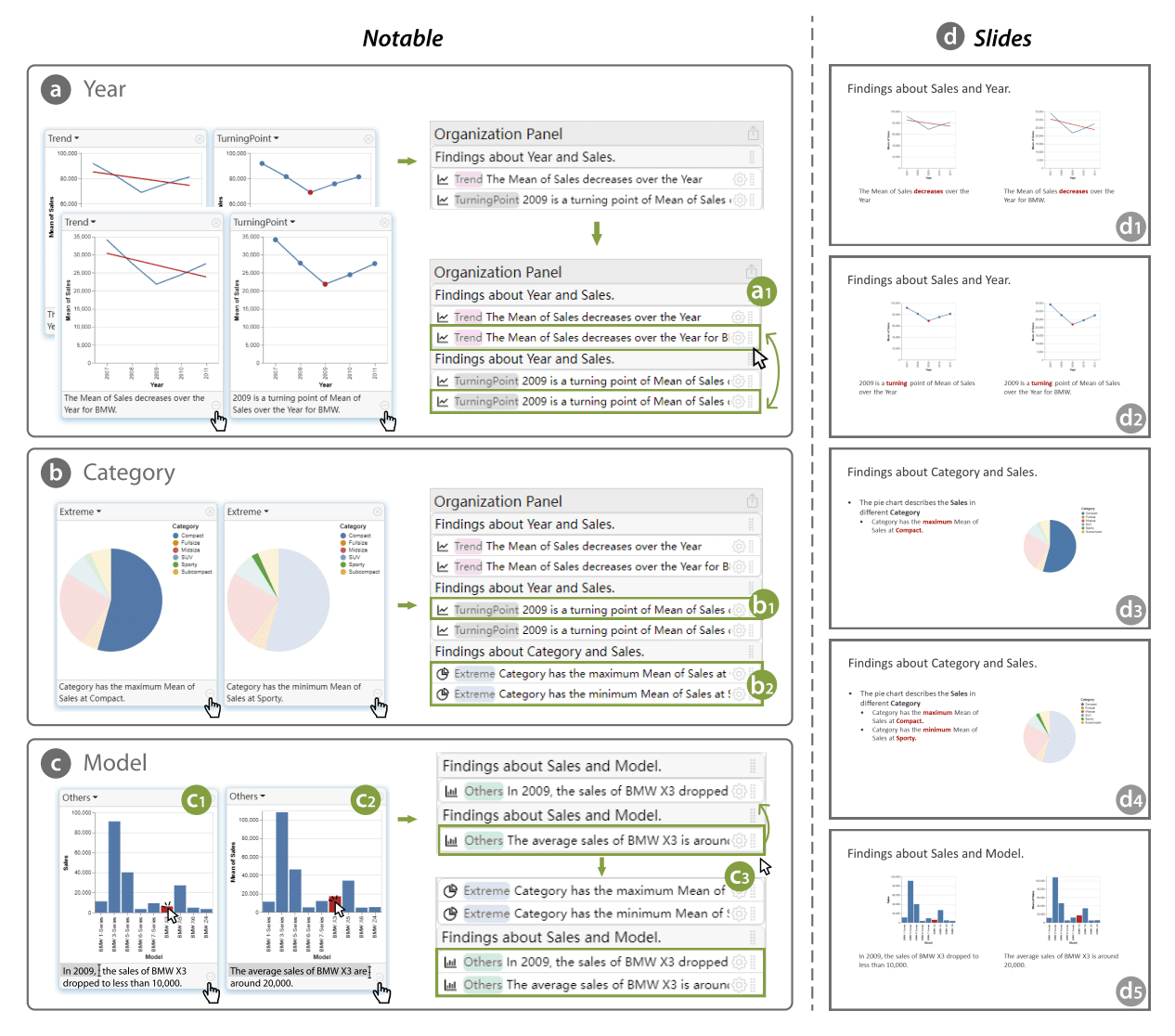

Data analysis often involves exploring data to unearth insights, then crafting stories to communicate those findings. But converting discoveries into coherent narratives poses challenges. Researchers have developed an AI assistant called Notable that streamlines data storytelling.

Ines Almeida

10.08.23 08:04 AM - Comment(s)

Back in 2018, researchers from Facebook AI developed a new method to improve story generation through hierarchical modeling. Their approach mimics how people plan out narratives.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

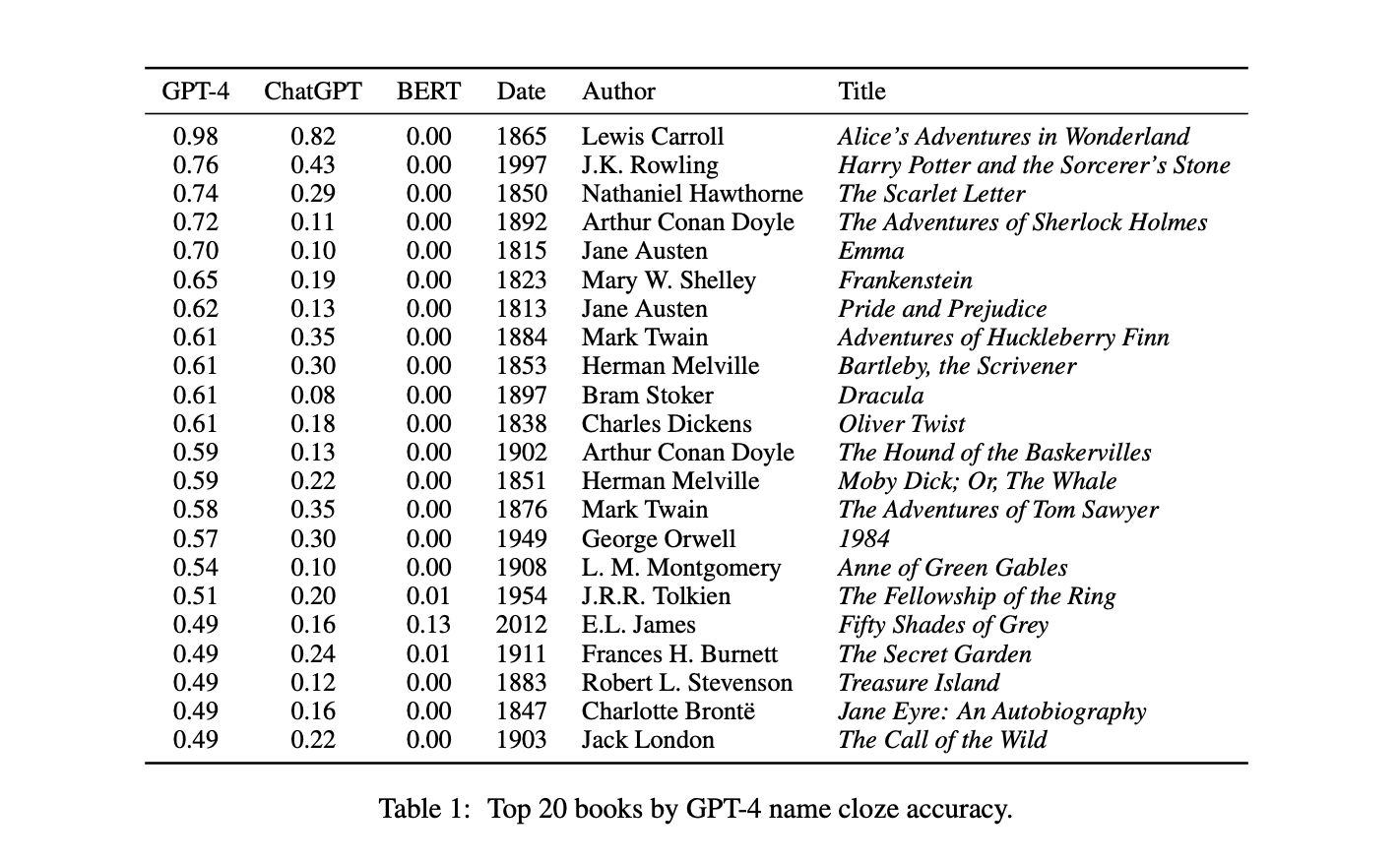

New research from the University of California, Berkeley sheds light on one slice of these models' knowledge: which books they have "read" and memorized. The study uncovers systematic biases in what texts AI systems know most about.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

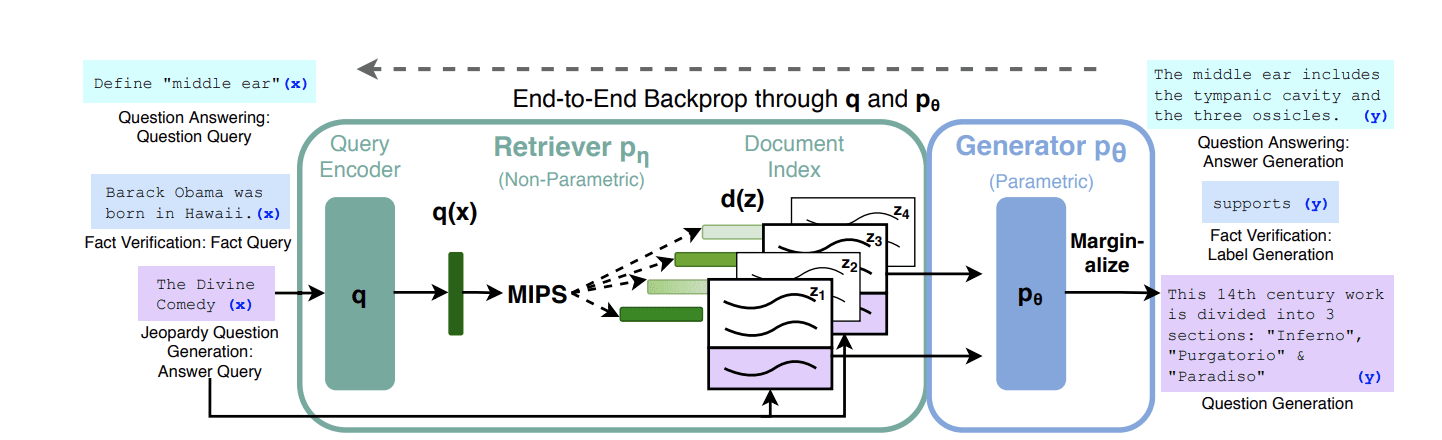

Retrieval-augmented generation (RAG) systems learn to retrieve and incorporate external knowledge when generating text.

Ines Almeida

10.08.23 08:03 AM - Comment(s)

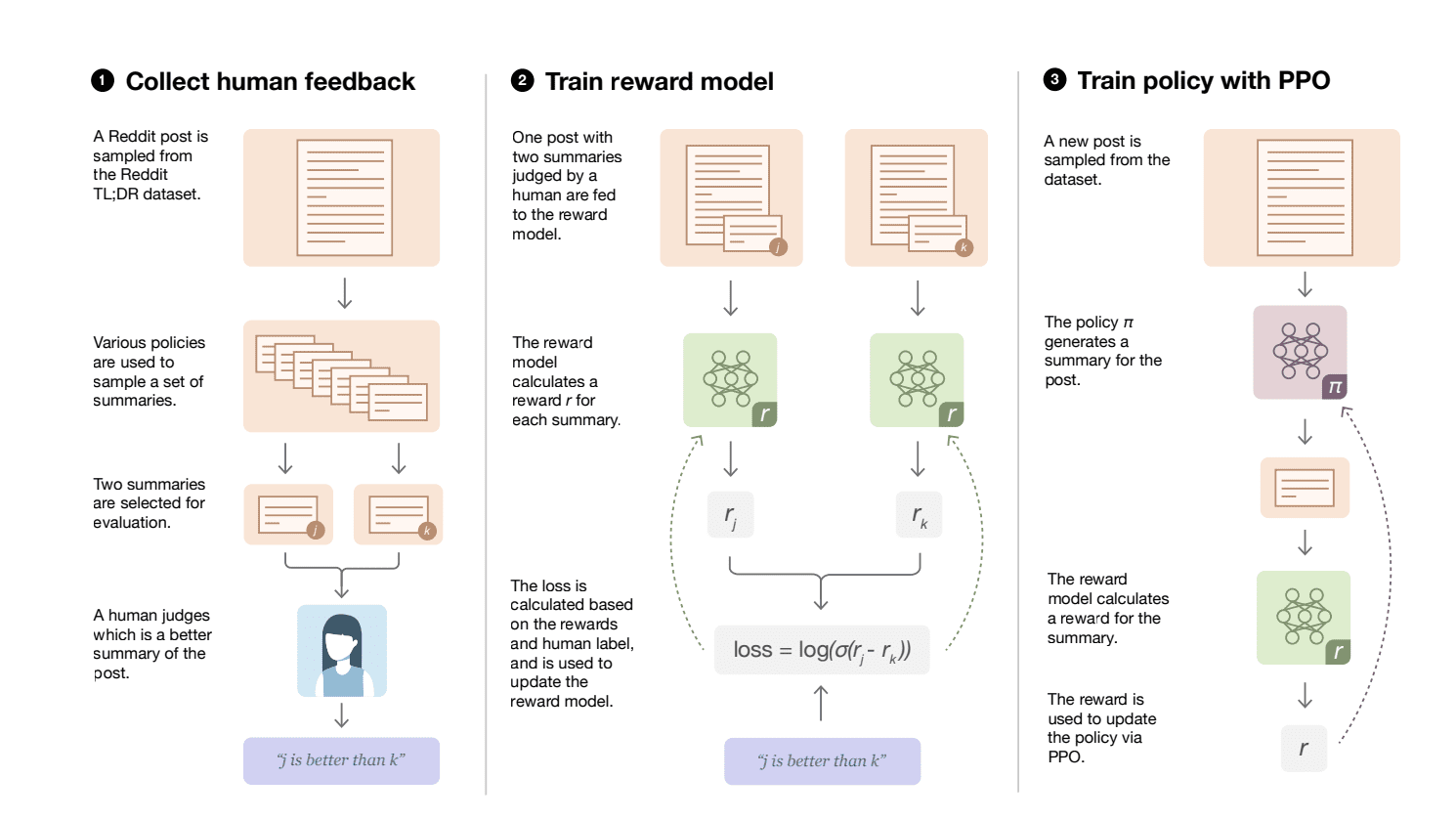

The researchers found that optimizing the AI for direct human preferences significantly boosted performance compared to just training it to mimic reference summaries.

Ines Almeida

10.08.23 08:02 AM - Comment(s)

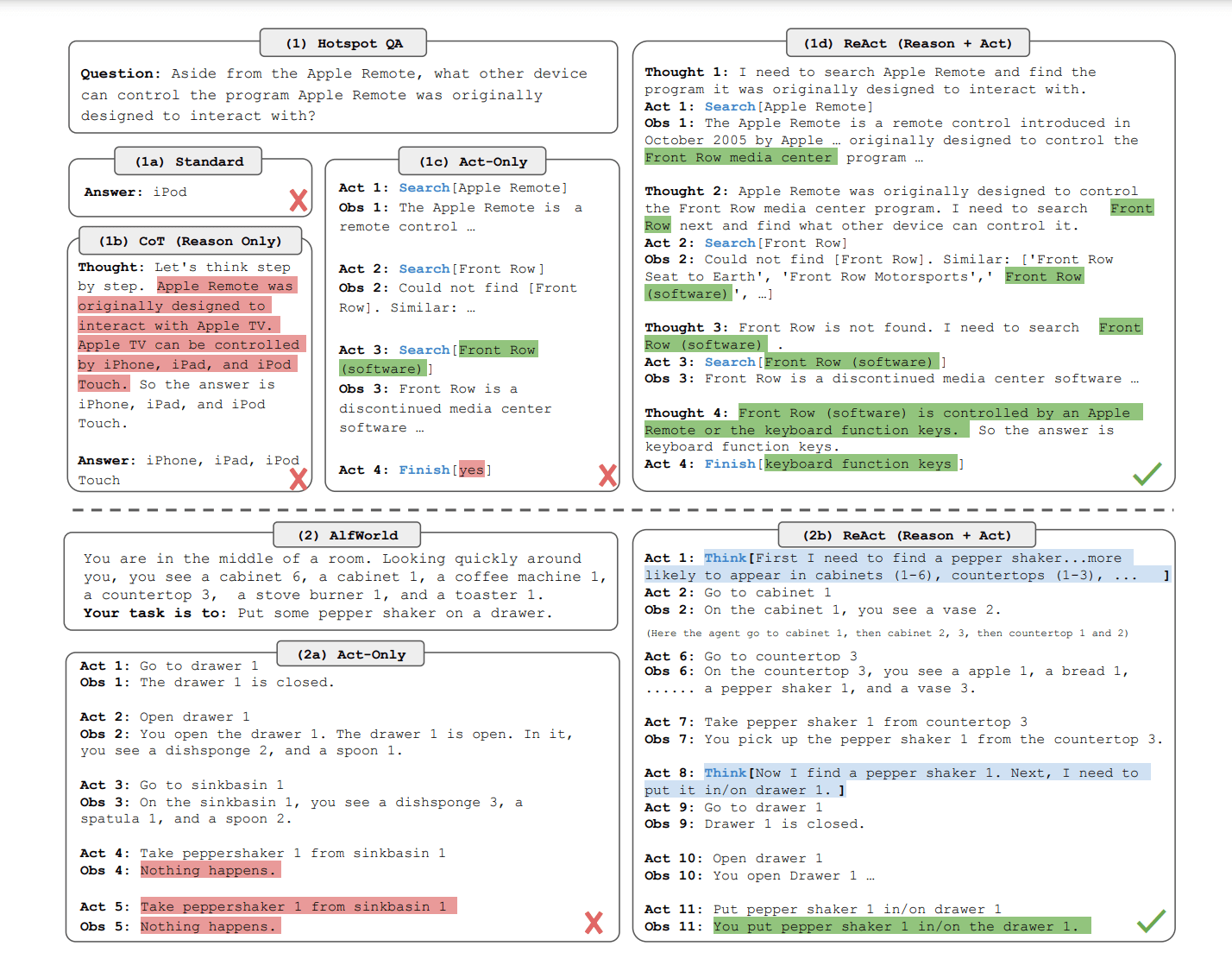

LLMs struggle with logical reasoning and decision-making when tackling complex real-world problems. Researchers propose an approach called ReAct that interleaves reasoning steps with actions to address this accuracy problem.

Ines Almeida

10.08.23 08:02 AM - Comment(s)

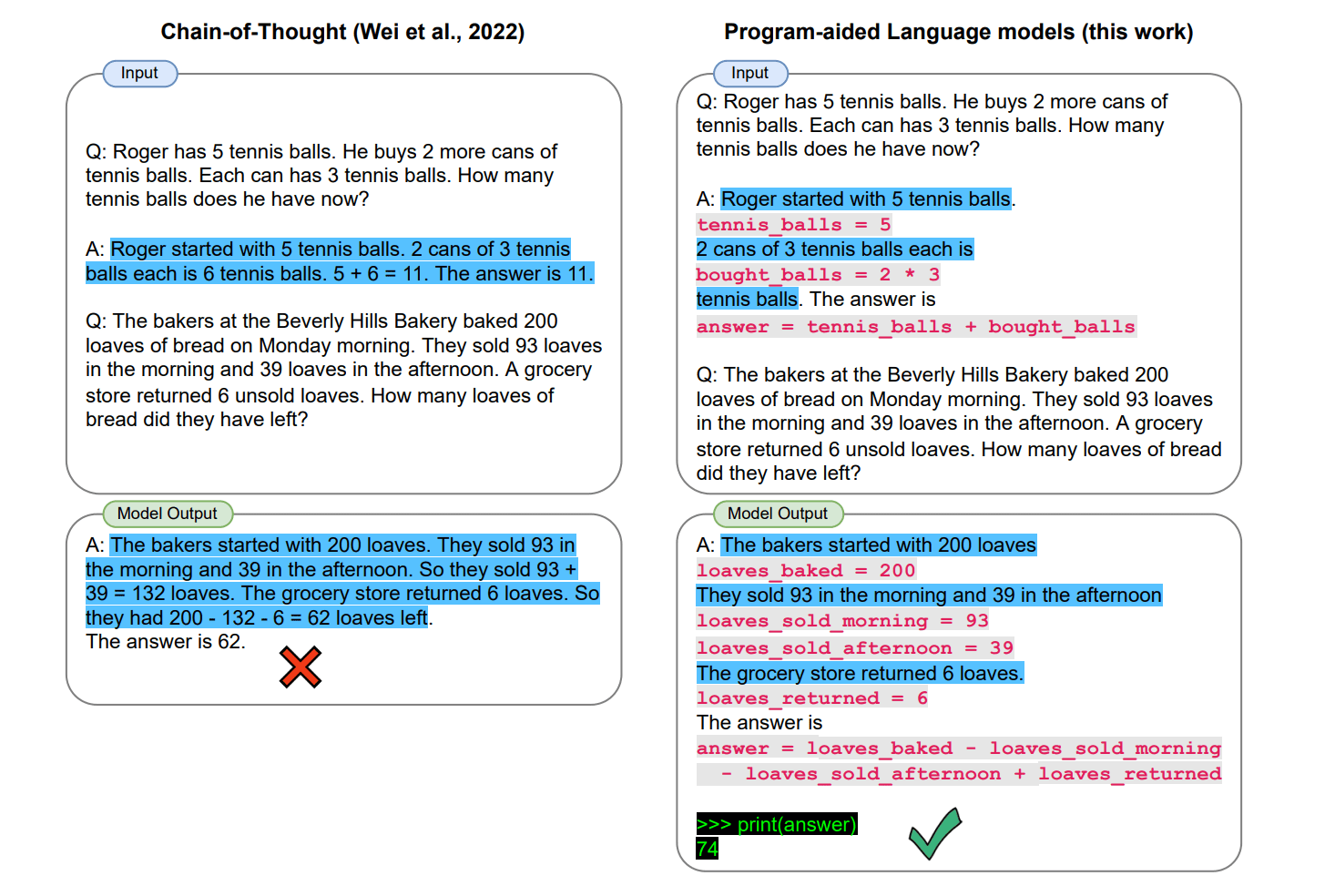

When it comes to logical reasoning, LLMs still struggle with simple math. Researchers from Carnegie Mellon University propose combining neural networks with symbolic programming to address this.

Ines Almeida

10.08.23 08:01 AM - Comment(s)

Machine translation has improved immensely thanks to AI, but handling multiple languages remains tricky. Researchers from Tel Aviv University and Meta studied this challenge. Through systematic experiments, they uncovered what really causes the most interference.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

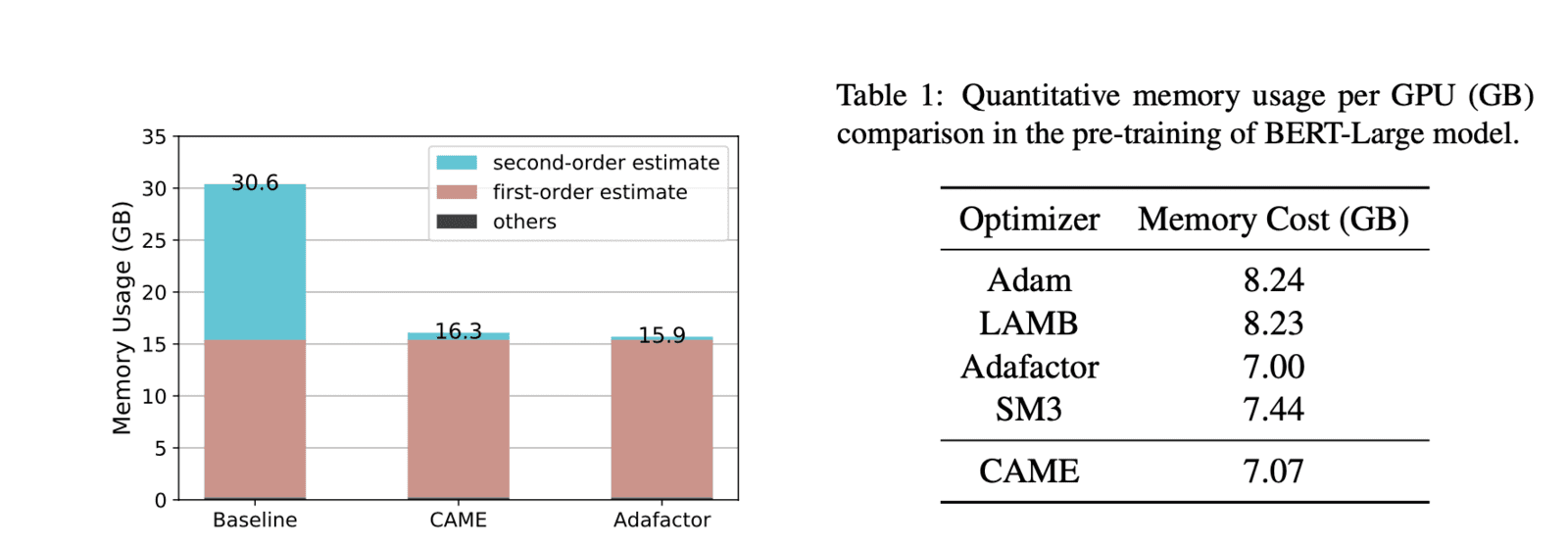

Researchers from the National University of Singapore and Noah's Ark Lab (Huawei) have developed a new training method that slashes memory requirements with no loss in performance. Their method, called CAME, can match state-of-the-art systems like BERT while using over 40% less memory.

Ines Almeida

10.08.23 08:00 AM - Comment(s)

Backpack models have an internal structure that is more interpretable and controllable compared to existing models like BERT and GPT-3.

Ines Almeida

10.08.23 07:59 AM - Comment(s)