Blog

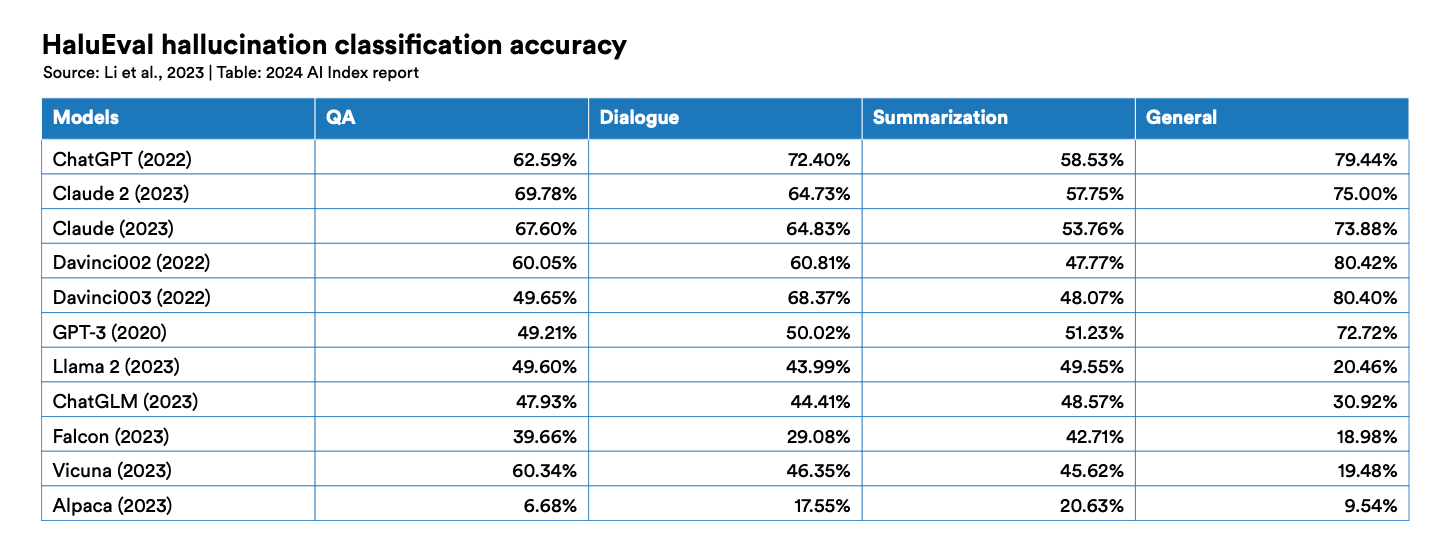

In this article, we'll dive into the key findings of the 2024 AI Index Report, focusing on benchmarks for truthfulness, reasoning, and agent-based systems, and explore their implications for businesses.

Ines Almeida

29.04.24 10:25 AM - Comment(s)

The 2024 HAI AI Index Report reveals a rapidly evolving AI landscape characterized by rising training costs, potential data constraints, the dominance of foundation models, and a shift towards open-source AI.

Ines Almeida

29.04.24 09:25 AM - Comment(s)

A new study from Harvard University reveals how LLMs can be manipulated to boost a product's visibility and ranking in recommendations.

Ines Almeida

15.04.24 02:00 PM - Comment(s)

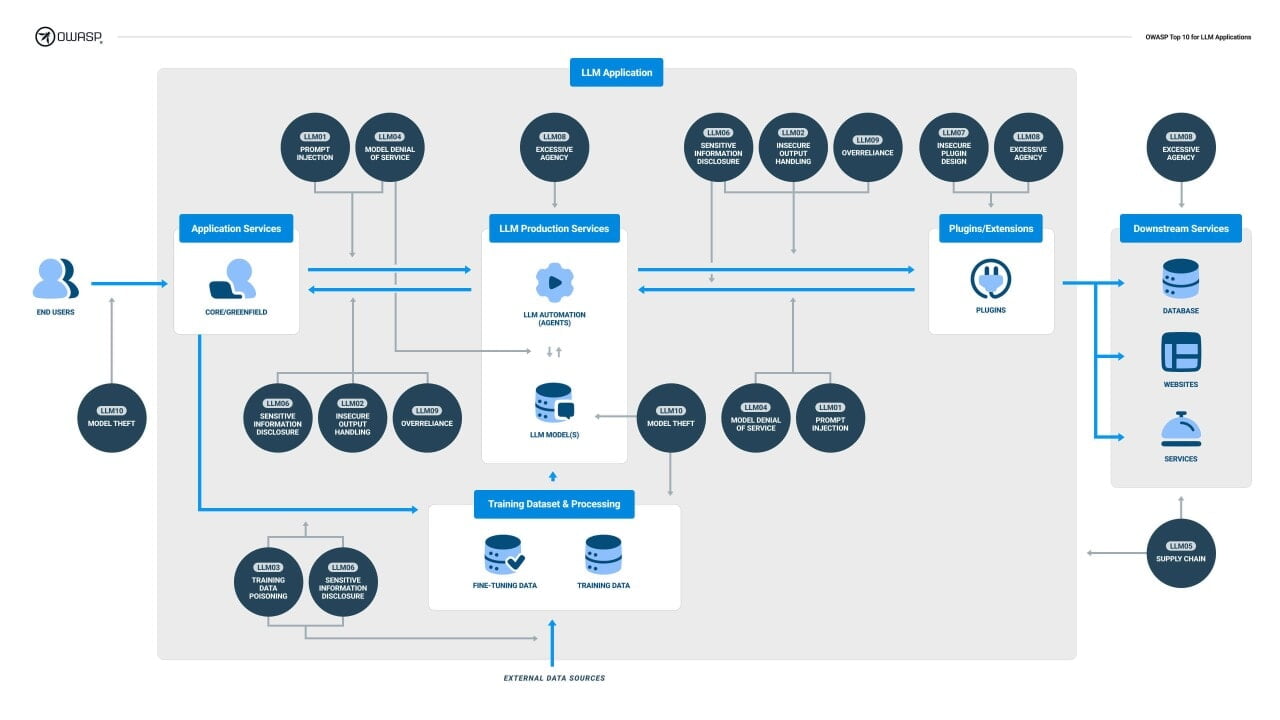

Recently, the Open Web Application Security Project (OWASP), a leading authority on cybersecurity, released their list of the Top 10 security risks for LLM applications. Here is what every executive should know about these critical LLM vulnerabilities.

Ines Almeida

04.04.24 04:21 PM - Comment(s)

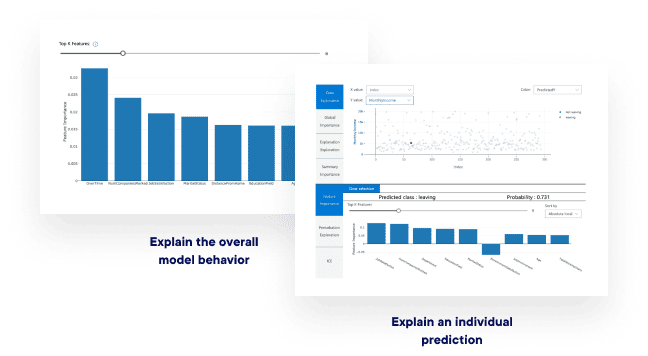

InterpretML is a valuable tool for unlocking the power of interpretable AI in traditional machine learning models. While it may have limitations when it comes to directly interpreting LLMs, the principles of interpretability and transparency remain crucial in the age of generative AI.

Ines Almeida

04.04.24 12:45 PM - Comment(s)

It is crucial for business leaders to understand the limitations and potential pitfalls of current approaches to measuring AI capabilities.

Ines Almeida

03.04.24 10:42 AM - Comment(s)

Among the myriad areas AI is transforming, innovation management stands out as a domain ripe for disruption. Innovation management, the art and science of bringing new and creative ideas to life, is critical for any organization aiming to maintain a competitive edge in the digital age.

Ines Almeida

22.02.24 03:45 PM - Comment(s)

Crafting a generative AI transformation roadmap is about combining strategic foresight, ethical responsibility, and a commitment to continuous learning and adaptation.

Ines Almeida

30.01.24 09:09 PM - Comment(s)

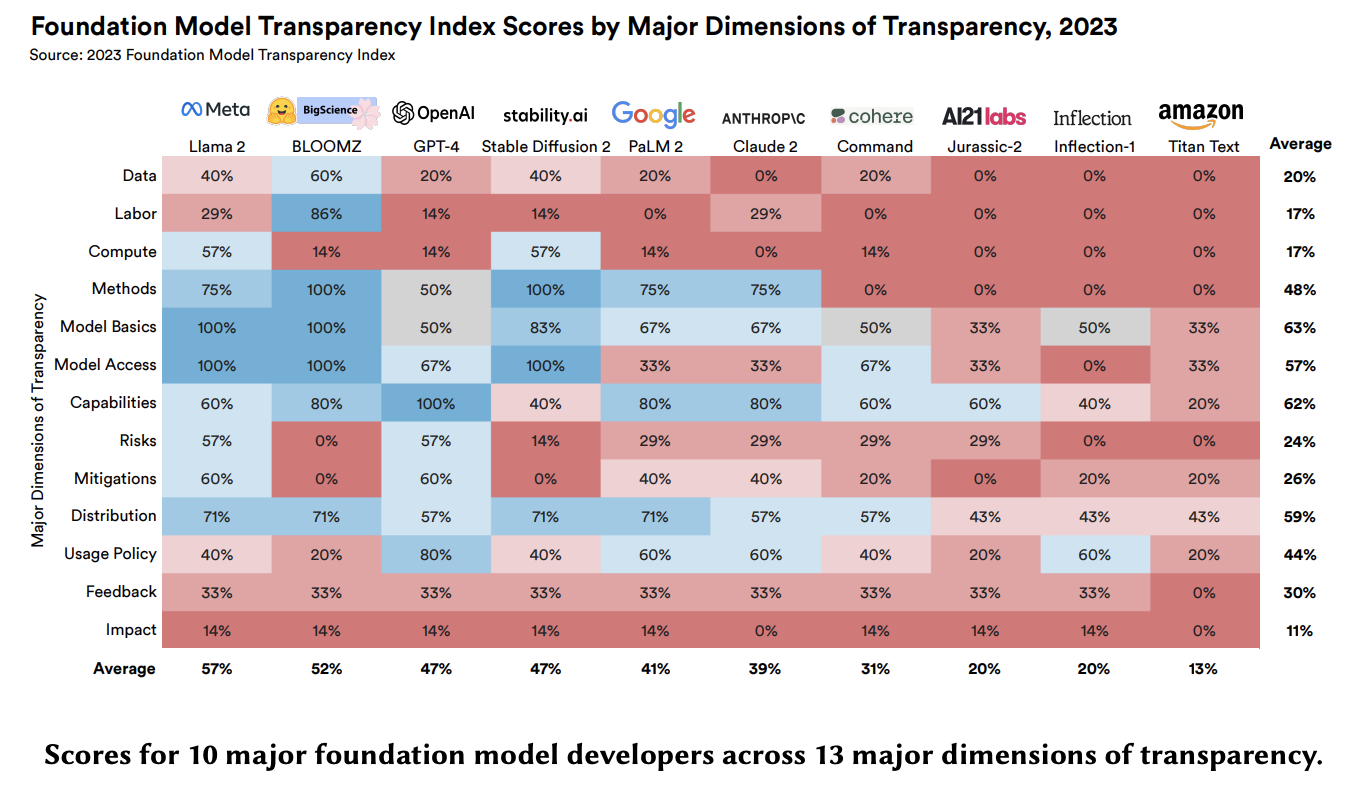

A recent critique calls into question a prominent AI transparency benchmark, illustrating the challenges in evaluating something as complex as transparency.

Ines Almeida

01.11.23 12:07 PM - Comment(s)

A recent critique calls into question a prominent AI transparency benchmark, illustrating the challenges in evaluating something as complex as transparency.

Ines Almeida

01.11.23 12:07 PM - Comment(s)

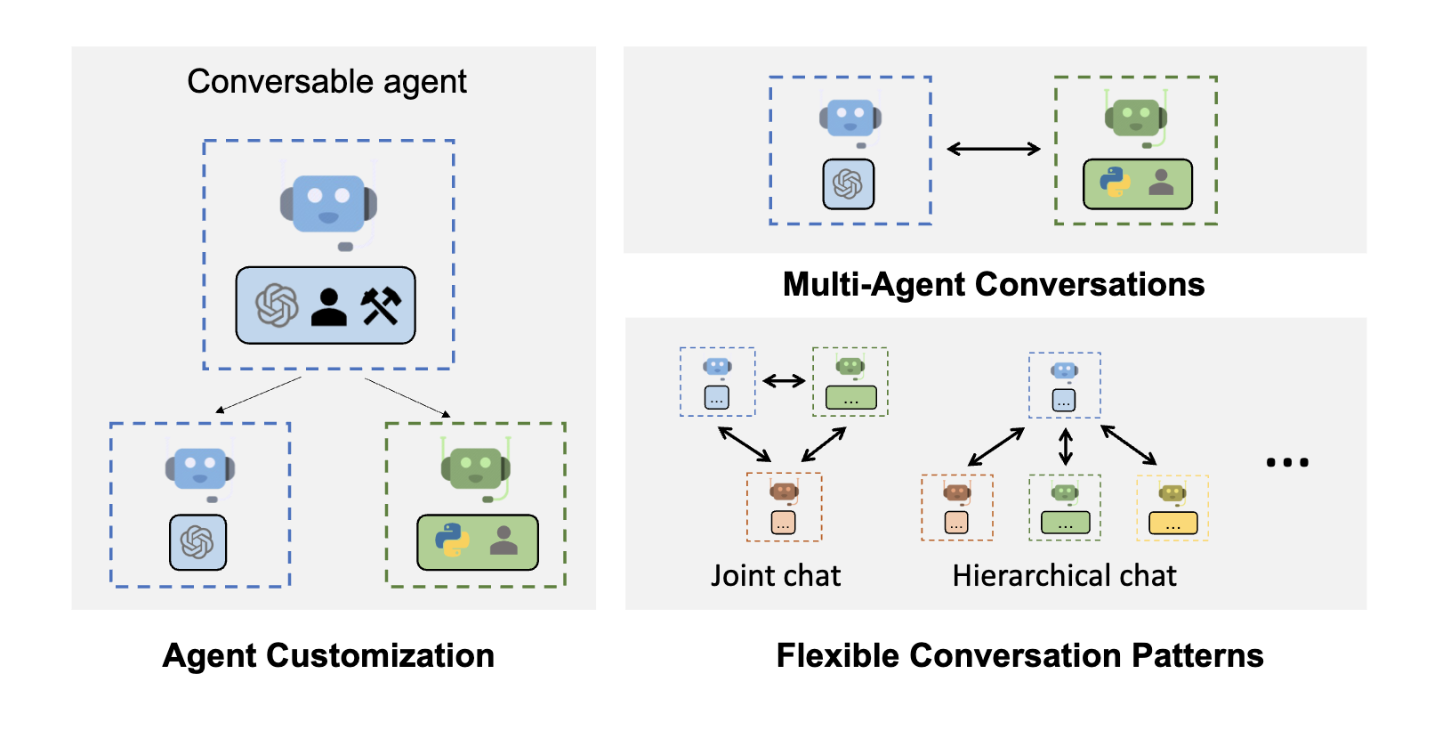

To accelerate development of advanced conversational AI applications, Microsoft recently introduced AutoGen, an open-source Python library that streamlines orchestrating multi-agent conversations.

Ines Almeida

24.10.23 02:13 PM - Comment(s)

MemGPT, applies OS principles like virtual memory and process management to unlock more powerful applications of LLMs - all while staying within their inherent memory limits.

Ines Almeida

24.10.23 12:02 PM - Comment(s)

How exactly should business leaders navigate the complex intersection between AI creation and existing copyright laws? A new research paper by legal scholar Dr Andres Guadamuz provides an enlightening analysis of this murky terrain.

Ines Almeida

15.09.23 09:33 AM - Comment(s)

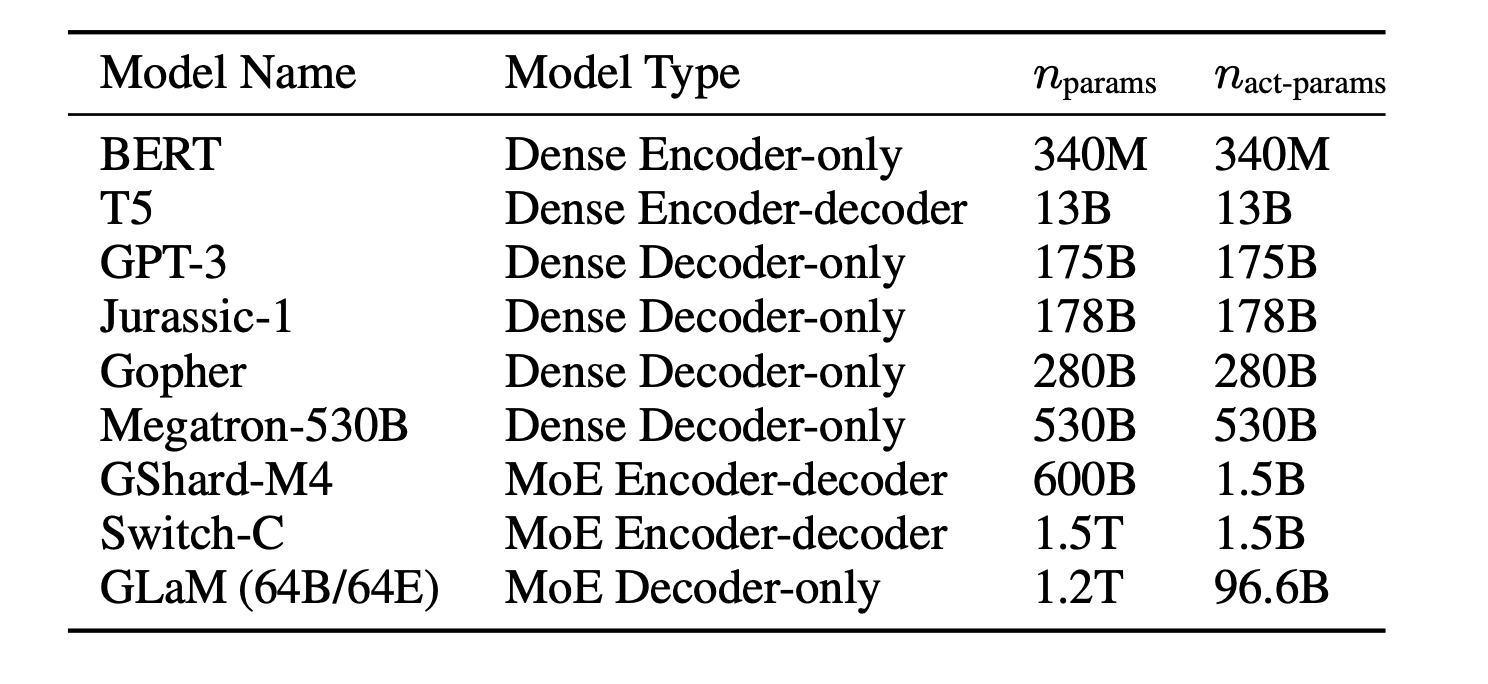

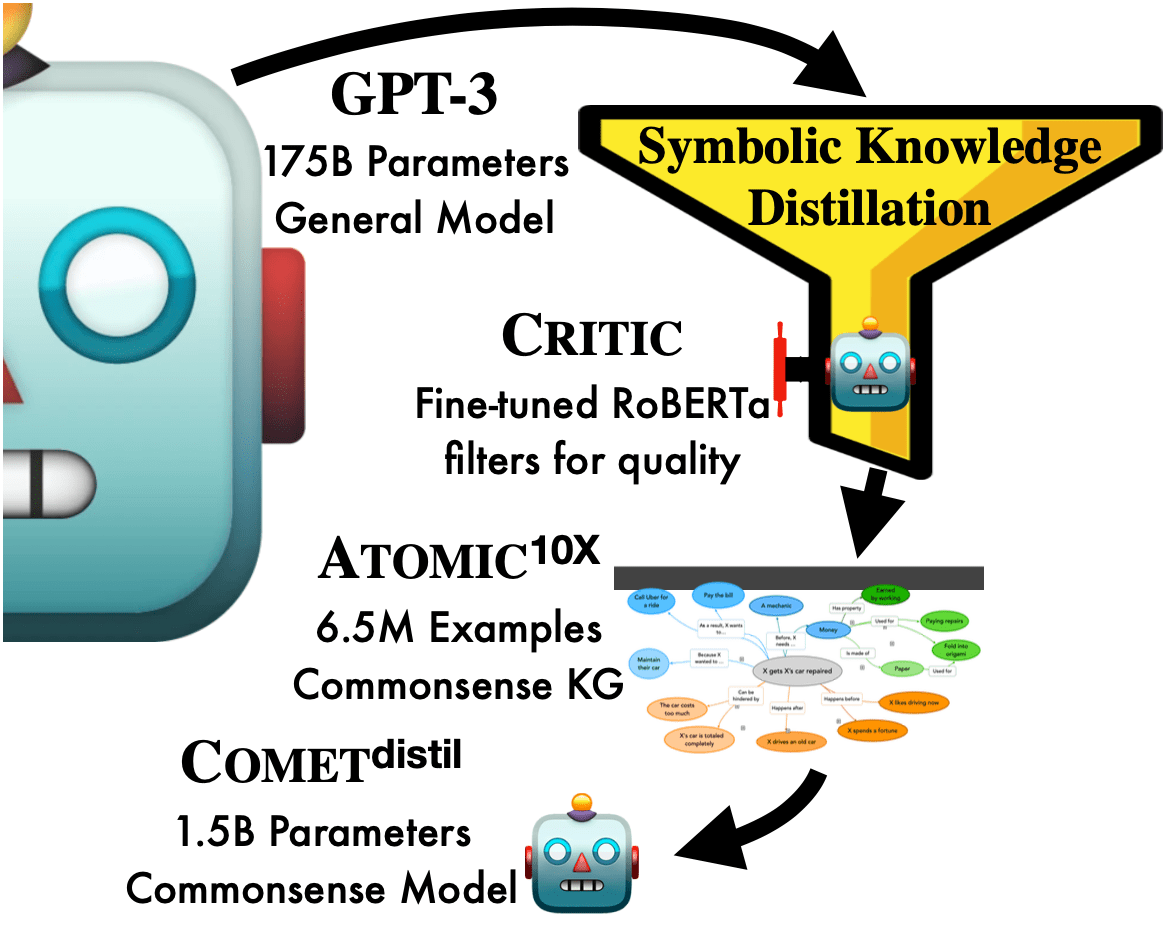

Rumors are swirling that GPT-4 may use an advanced technique called Mixture of Experts (MoE) to achieve over 1 tr parameters. This offers an opportunity to demystify MoE

Ines Almeida

17.08.23 02:25 PM - Comment(s)

Ines Almeida

16.08.23 11:38 AM - Comment(s)

AI experts Alexandra Luccioni and Anna Rogers take a critical look at LLMs, analyzing common claims and assumptions while identifying issues and proposing ways forward.

Ines Almeida

16.08.23 09:04 AM - Comment(s)

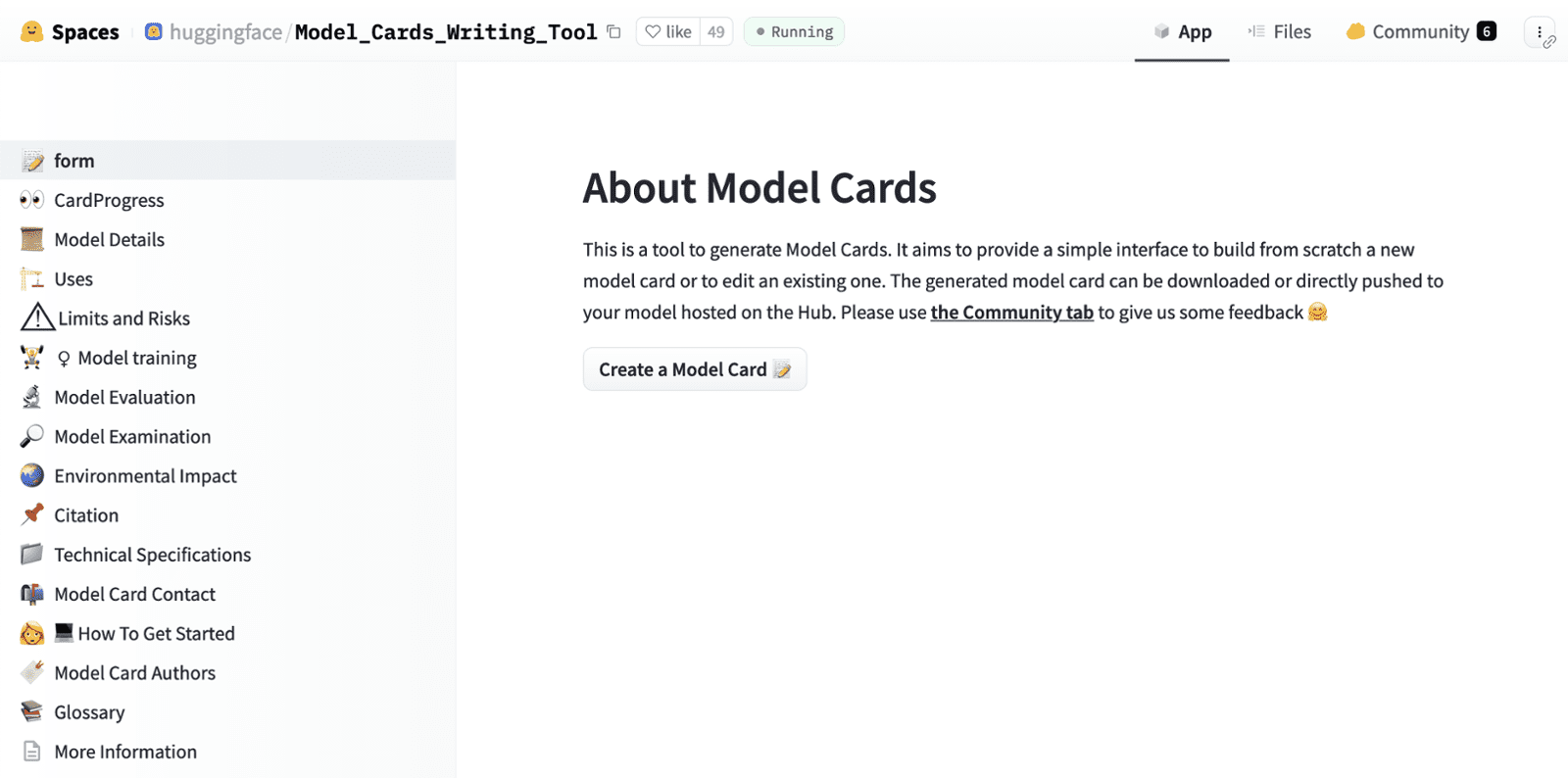

In 2019, a research paper proposed "model cards" as a way to increase transparency into AI systems and mitigate their potential harms.

Ines Almeida

13.08.23 10:39 PM - Comment(s)

A thought-provoking paper from computer scientists raises important concerns about the AI community's pursuit of ever-larger language models.

Ines Almeida

13.08.23 09:46 PM - Comment(s)